- The paper presents a benchmark that unifies graph out-of-distribution detection, open-set recognition, and anomaly detection methodologies.

- It evaluates node-level and graph-level tasks using diverse real-world datasets to assess scalability and robustness under varying class distributions.

- Experimental findings reveal method variability with class composition changes, offering insights to guide future GNN model improvements.

G-OSR: A Comprehensive Benchmark for Graph Open-Set Recognition

Introduction to Graph Open-Set Recognition

Graph Neural Networks (GNNs) have demonstrated remarkable capabilities across various machine learning tasks, particularly in domains like social networks, bioinformatics, and chemistry. These successes have predominantly been observed in settings where all classes are pre-defined, known as closed-set environments. This paper, however, focuses on a more realistic scenario where previously unseen classes are likely to appear, necessitating robust Graph Open-Set Recognition (GOSR) methodologies. Traditional methods, such as Graph Out-of-Distribution Detection (GOODD), GOSR approaches, and Graph Anomaly Detection (GAD) have thus far evolved separately, with limited exploration of their interconnections.

Existing Challenges and the Need for a Benchmark

The primary challenge in advancing Graph Open-Set Recognition lies in the absence of a comprehensive benchmark that evaluates various methodologies across tasks. Factors such as limited evaluation coverage, lack of cross-domain validation, disconnection between related fields, and variations in technical configurations hinder progress in this area. This paper introduces the G-OSR benchmark, which fills these gaps by providing a standardized evaluation framework using datasets from diverse domains, such as citation networks, social media, and molecular graphs.

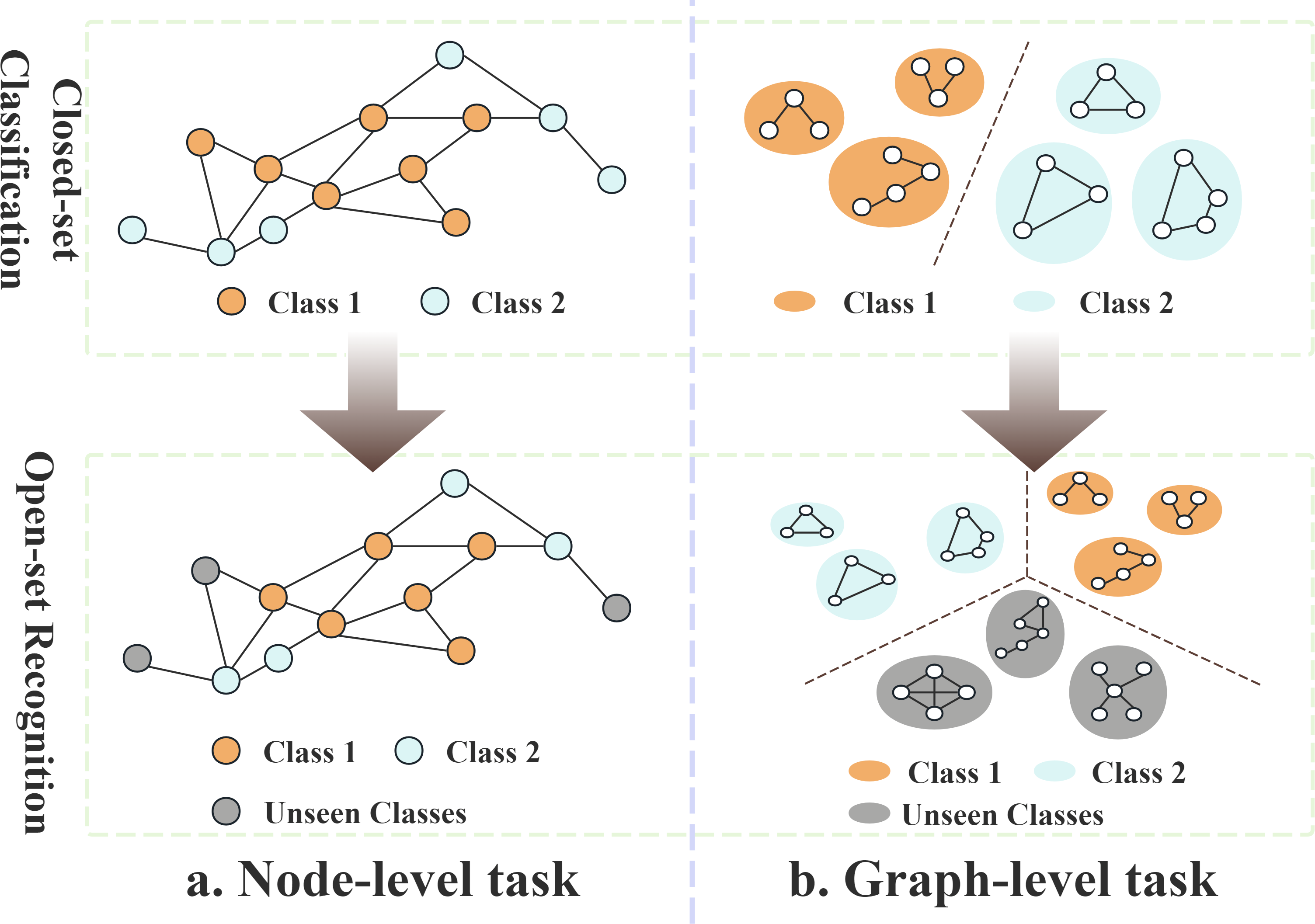

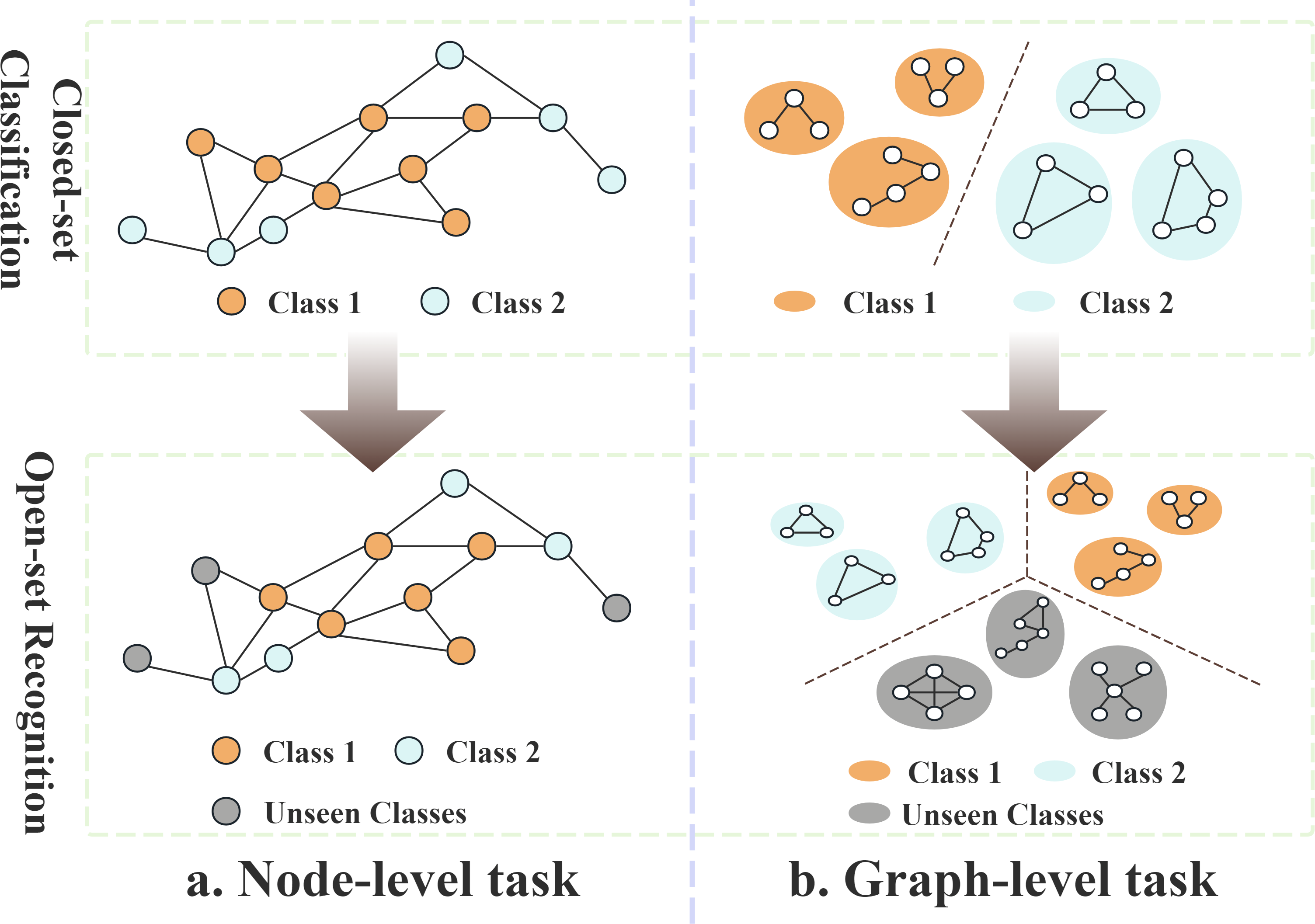

Figure 1: Examples illustrating the difference between closed-set classification and open-set recognition in node-level and graph-level tasks.

Methodological Contributions

The G-OSR benchmark emphasizes node-level and graph-level open-set recognition tasks. Node-level recognition involves identifying unseen node categories emphasizing their local properties and direct connections. In contrast, graph-level recognition assesses global properties, such as topology and node relationships. The benchmark employs real-world datasets to ensure robust evaluations and establishes baselines for GOODD, GOSR, and GAD methods. Additionally, the paper explores performance dynamics relative to the number of seen and unseen classes, offering insights into the scalability and adaptability of these approaches.

Experimental Findings

The results obtained through G-OSR show variations in method effectiveness and robustness as the number of seen and unseen classes changes. GADC methods showed resilience in handling high complexity in class distribution, while traditional methods required fine-tuning to maintain robust performance. Analyzing such variations provides critical insights into each method’s adaptability, which informs future research directions and practical applications.

Conclusion and Outlook

This paper’s significant contribution is the establishment of the G-OSR benchmark, which enables comprehensive and fair evaluation of GOSR methods across multiple task levels and domains. The interconnections between GOSR and related fields like GOODD and GAD present opportunities for innovation that can be leveraged to tackle challenges inherent in open-set environments. Future research can build upon this foundation to develop more sophisticated models, offering increased reliability in real-world applications involving graph structures.