Agentic Retrieval-Augmented Generation: A Survey on Agentic RAG

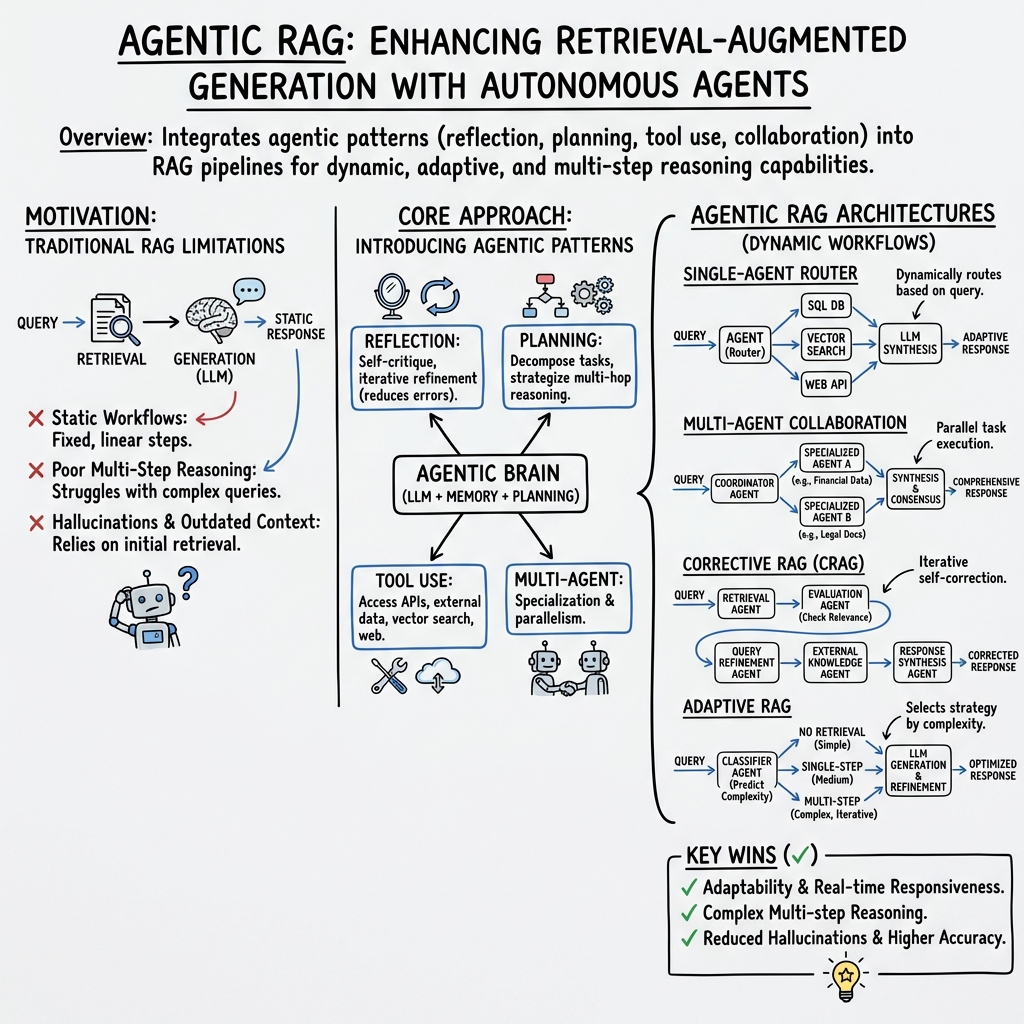

Abstract: LLMs have revolutionized AI by enabling human like text generation and natural language understanding. However, their reliance on static training data limits their ability to respond to dynamic, real time queries, resulting in outdated or inaccurate outputs. Retrieval Augmented Generation (RAG) has emerged as a solution, enhancing LLMs by integrating real time data retrieval to provide contextually relevant and up-to-date responses. Despite its promise, traditional RAG systems are constrained by static workflows and lack the adaptability required for multistep reasoning and complex task management. Agentic Retrieval-Augmented Generation (Agentic RAG) transcends these limitations by embedding autonomous AI agents into the RAG pipeline. These agents leverage agentic design patterns reflection, planning, tool use, and multiagent collaboration to dynamically manage retrieval strategies, iteratively refine contextual understanding, and adapt workflows to meet complex task requirements. This integration enables Agentic RAG systems to deliver unparalleled flexibility, scalability, and context awareness across diverse applications. This survey provides a comprehensive exploration of Agentic RAG, beginning with its foundational principles and the evolution of RAG paradigms. It presents a detailed taxonomy of Agentic RAG architectures, highlights key applications in industries such as healthcare, finance, and education, and examines practical implementation strategies. Additionally, it addresses challenges in scaling these systems, ensuring ethical decision making, and optimizing performance for real-world applications, while providing detailed insights into frameworks and tools for implementing Agentic RAG.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview in Simple Terms

This paper is about making AI helpers smarter and more up-to-date. Big LLMs like GPT can write and understand text very well, but they only “know” what they were trained on, which can get old fast. Retrieval-Augmented Generation (RAG) fixes this by letting the AI look things up in real time, like checking websites or databases before answering. The paper goes one step further to explain “Agentic RAG,” where the AI doesn’t just look things up—it uses autonomous “agents” that can plan, reflect, use tools, and even work as a team to solve complex, multi-step problems.

In short: The paper surveys (reviews and organizes) how Agentic RAG works, how it evolved, where it’s used, tools to build it, and what challenges remain.

What Questions Is the Paper Trying to Answer?

The paper aims to answer a few big questions in everyday language:

- How do we make AI answers more accurate and current, not outdated?

- How can AI handle complicated questions that require multiple steps to solve?

- What are the different designs and patterns for building smarter RAG systems?

- Where is Agentic RAG useful (like health, finance, and education)?

- What are the challenges and best practices for building and scaling these systems safely and efficiently?

How Did the Authors Study It?

This is a survey paper. That means the authors:

- Collected and compared many existing research papers, systems, and tools about RAG and agent-based AI.

- Organized them into a clear “taxonomy” (a structured map) of different approaches.

- Explained core ideas using diagrams and simple breakdowns.

- Highlighted real-world applications, common design patterns, and trade-offs.

To explain the technical parts, think of these analogies:

- LLM: A very smart student who remembers a lot but from a fixed textbook that doesn’t update.

- RAG: The same student taking an open-book test with internet access, so they can look up the latest facts before answering.

- Agents: Helpful teammates who can plan, double-check work, use calculators and websites, and split tasks among themselves.

- Agentic patterns:

- Reflection: The agent reviews its own answer and improves it (like proofreading and fixing mistakes).

- Planning: The agent breaks a big problem into smaller steps and decides what to do first.

- Tool use: The agent uses external tools—search engines, databases, APIs—to get facts or do calculations.

- Multi-agent: Several agents with different specialties work together, like a team project.

What Are the Key Ideas and Approaches?

The paper explains how RAG grew over time:

- Naive RAG: Simple keyword look-up. Fast, but often misses the real meaning.

- Advanced RAG: Uses smarter matching (semantic search) so it “gets” the meaning better and can connect related ideas.

- Modular RAG: Breaks the system into parts you can mix and match—like combining web search, databases, and calculators—so it’s flexible.

- Graph RAG: Builds and uses a “map” of connections between facts (like a web of people, places, and events) to support reasoning across relationships.

- Agentic RAG: Adds agents that plan, reflect, use tools, and collaborate, so the system can adapt, fix mistakes, and handle multi-step tasks.

The authors also organize Agentic RAG into three main architectures:

- Single-agent systems: One “conductor” agent routes the question to the right tools and gathers an answer. Simple and efficient for smaller setups.

- Multi-agent systems: Several specialized agents work in parallel—one might query databases, another searches the web, another checks recommendations—then combine their results. Good for complex questions.

- Hierarchical systems: A “boss” agent delegates to mid-level and lower-level agents and then synthesizes their results. Useful for strategic, multi-stage problems.

They also describe “Corrective RAG,” where agents check if the retrieved documents are actually relevant and, if not, refine the query, pull in new sources, and try again—like having a built-in quality control loop.

What Did They Find and Why Does It Matter?

Here are the big takeaways, written simply:

- Traditional LLMs can sound smart but sometimes make things up or use outdated info. RAG improves this by letting the AI fetch fresh, relevant facts.

- Traditional RAG is still too rigid for multi-step, real-world tasks. Agentic RAG adds planning, reflection, tool use, and teamwork so the system can adapt and improve its answers step by step.

- Different RAG styles fit different needs:

- Naive: Easy but basic.

- Advanced: Better understanding and accuracy.

- Modular: Flexible and customizable for different domains.

- Graph: Great for reasoning over relationships (like in law or healthcare).

- Agentic: Best for complex, changing tasks that require judgment and iteration.

- Agentic RAG is especially useful in:

- Healthcare: Summarizing patient records and the latest research to support decisions.

- Finance: Combining real-time market data, policies, and historical trends.

- Education: Personalized tutoring that looks up accurate, current content.

- Challenges remain:

- Coordination is hard: Multiple agents need smart orchestration.

- Cost and speed: More agents and more retrieval mean higher computing costs and possible delays.

- Data quality: Good results depend on good sources and well-structured data.

- Ethics and safety: Systems must avoid bias, respect privacy, cite sources, and be transparent.

What’s the Impact and What Comes Next?

If we build Agentic RAG systems well, we get AI that:

- Is more trustworthy because it checks facts in real time.

- Solves harder problems by planning, reflecting, and using the right tools.

- Works across many fields by mixing and matching specialized parts.

This could lead to better medical assistants, smarter learning tutors, more reliable financial analysis, and customer support that actually understands and adapts. But to get there, developers and researchers need to:

- Improve orchestration so agents collaborate smoothly.

- Reduce costs and delays through smarter retrieval and caching.

- Ensure fairness, privacy, and transparency.

- Create better benchmarks and datasets to measure real-world performance.

Bottom line: Agentic RAG is like upgrading from a single smart student to a well-organized, well-equipped team that can look things up, think in steps, check their work, and deliver better answers—especially when the world changes fast.

Collections

Sign up for free to add this paper to one or more collections.