Cosmos World Foundation Model Platform for Physical AI

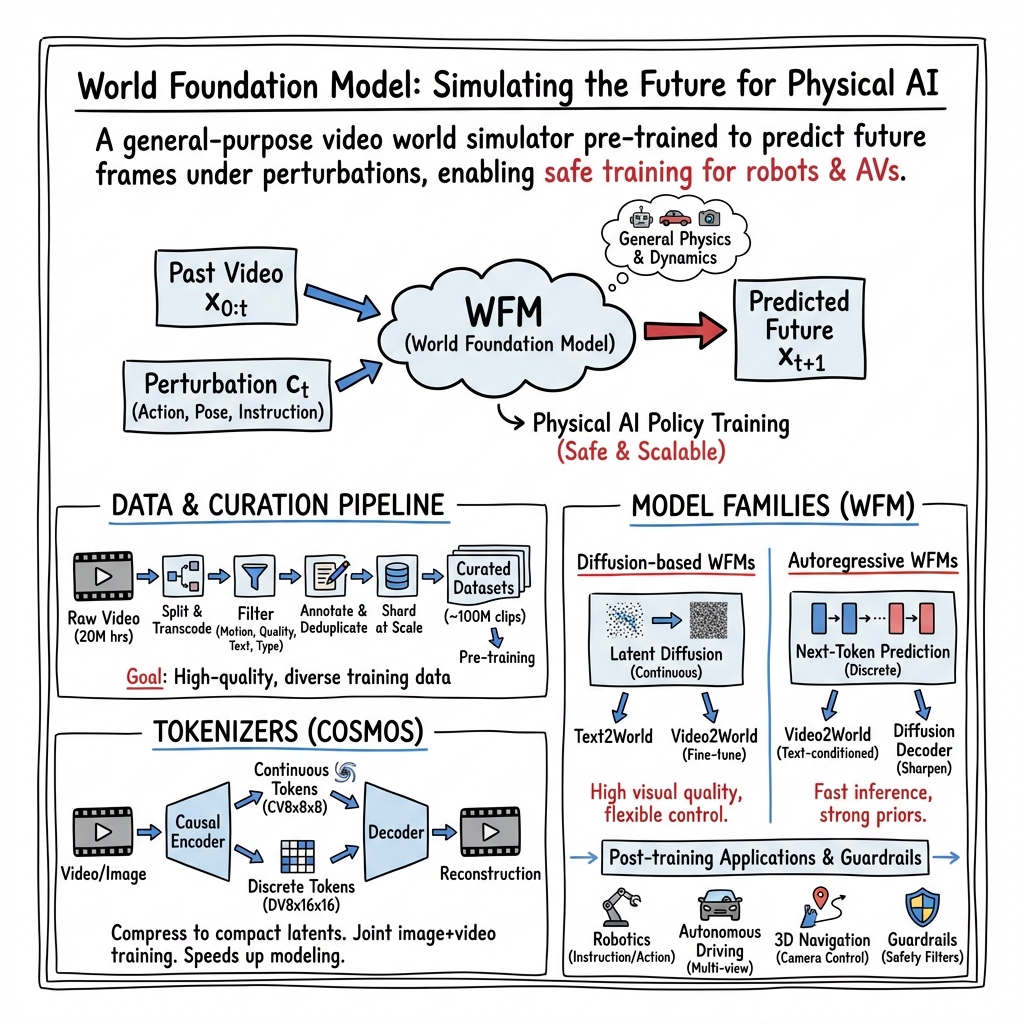

Abstract: Physical AI needs to be trained digitally first. It needs a digital twin of itself, the policy model, and a digital twin of the world, the world model. In this paper, we present the Cosmos World Foundation Model Platform to help developers build customized world models for their Physical AI setups. We position a world foundation model as a general-purpose world model that can be fine-tuned into customized world models for downstream applications. Our platform covers a video curation pipeline, pre-trained world foundation models, examples of post-training of pre-trained world foundation models, and video tokenizers. To help Physical AI builders solve the most critical problems of our society, we make Cosmos open-source and our models open-weight with permissive licenses available via https://github.com/nvidia-cosmos/cosmos-predict1.

Summary

- The paper proposes a novel foundation model platform that pre-trains a digital twin from diverse video data to address data scarcity in Physical AI training.

- It integrates advanced data curation, video tokenization, and both diffusion and autoregressive models to enable high-quality, controllable simulations.

- The work demonstrates improved video generation, 3D consistency, and applicability in camera control, robotic manipulation, and autonomous driving.

The paper introduces the Cosmos World Foundation Model (WFM) Platform for Physical AI, aiming to address the data scarcity challenge in training AI systems that interact with the physical world. The core idea is to pre-train a general-purpose "world model" from large-scale, diverse video data and then fine-tune this model for specific Physical AI setups. The platform includes components for data curation, video tokenization, pre-trained world models (both diffusion-based and autoregressive), examples of post-training for various tasks, and guardrails for safe deployment. The platform and models are open-sourced via NVIDIA Cosmos and NVIDIA Cosmos Tokenizer.

Physical AI systems, equipped with sensors and actuators, require training data consisting of interleaved observations and actions. Collecting such data in the real world is difficult and potentially risky. A WFM serves as a digital twin of the world, allowing safe and efficient data generation and policy training in simulation. The paper highlights several potential applications of WFMs for Physical AI builders:

- Policy evaluation: Testing policies in a WFM before real-world deployment.

- Policy initialization: Using a WFM to provide a starting point for policy models.

- Policy training: Training policies via reinforcement learning within a WFM.

- Planning/Model-Predictive Control: Simulating future states under different actions to choose optimal sequences.

- Synthetic data generation: Creating diverse training data, potentially conditioned on metadata like depth or semantic maps for Sim2Real transfer.

The Cosmos platform comprises several key components:

1. Data Curation:

A scalable pipeline is developed to curate high-quality video datasets for tokenizer and WFM training. The pipeline involves 5 steps:

- Splitting: Raw videos are segmented into shorter clips without scene changes using robust shot detection (like TransNetV2 (Li et al., 2020)) and transcoded into a consistent format (MP4 with h264_nvenc) for efficient processing. Hardware acceleration (NVDEC/NVENC on GPUs like L40S) is heavily utilized and optimized using PyNvideoCodec within a Ray-based orchestration (Moritz et al., 2017).

- Filtering: Low-quality or irrelevant clips are removed, and valuable clips are tagged. This includes motion filtering (using optical flow), visual quality filtering (perceptual scores via a DOVER-based model (Santos et al., 2023), aesthetic scores), text overlay filtering (using an MLP classifier on video embeddings), and video type filtering (classifying content based on a custom taxonomy using a VLM-labeled dataset).

- Annotation: A large Vision-LLM (VLM), specifically an internal VILA model (Chen et al., 2024, Hurtado-Gutiérrez et al., 2024) fine-tuned for video captioning, generates detailed text descriptions for each clip. Inference efficiency for VILA is boosted using FP8 quantization with TensorRT-LLM, achieving 10x speedup.

- Deduplication: Semantic duplicates are removed using InternVideo2 embeddings (Wang et al., 2024) and GPU-accelerated k-means clustering [RAPIDS], based on methods like SemDeDup (Abbas et al., 2023) and DataComp (Wang et al., 2024). About 30% of the data is removed.

- Sharding: Processed clips are packaged into webdatasets, sharded by resolution, aspect ratio, and length, to align with the training curriculum.

2. Tokenizer:

Cosmos Tokenizer is a suite of continuous and discrete visual tokenizers designed for efficiency and high reconstruction quality.

- Architecture: An attention-based encoder-decoder structure with temporal causality is used. It operates in the wavelet space using a 3D Haar wavelet transform [lepik2014haar] followed by causal spatio-temporal factorized convolutions and attention layers. Layer Normalization is preferred over Group Normalization.

- Token Types: Continuous tokenizers use a standard Autoencoder (AE) formulation for latent embeddings. Discrete tokenizers employ Finite-Scalar-Quantization (FSQ) (Mentzer et al., 2023) for quantized indices (e.g., 6 FSQ levels for a vocabulary size of 64,000).

- Training: A joint image and video training strategy alternates batches. Training uses L1, Perceptual (VGG-19 features), Optical Flow (Schindler, 2020), and Gram-matrix losses, with adversarial loss in a second fine-tuning stage.

- Evaluation: Evaluated on standard image (MS-COCO (McBride et al., 2014), ImageNet (0909.0530)) and video (DAVIS (Sanchez et al., 2016)) benchmarks, and a new dataset called TokenBench (curated from BDD100K (Paruchuri et al., 2020), EgoExo-4D (Cheng et al., 2024), BridgeData V2 (Lee et al., 2023), Panda-70M (Kurchan, 2024)). Cosmos Tokenizer significantly outperforms baselines in reconstruction quality (PSNR, SSIM, rFID (Heusel et al., 2017), rFVD (Lee et al., 2019)) and runtime efficiency (2x-12x faster), while having fewer parameters.

3. World Foundation Model Pre-training:

Pre-trained WFMs are built using two scalable paradigms: diffusion and autoregressive models, leveraging Transformer architectures (Vaswani et al., 2017). Training is performed on a cluster of 10,000 NVIDIA H100 GPUs.

- Diffusion-based WFM:

- Formulation: Uses the EDM approach (Islam et al., 2024, Karras et al., 2022) for denoising score matching.

- Architecture: Builds upon DiT (Hang et al., 2023), adapted for video. Features include 3D patchification, hybrid positional embedding (FPS-aware 3D RoPE (Su et al., 2021) + learnable APE), cross-attention for text conditioning (using T5-XXL embeddings (Paruchuri et al., 2020)), QK-Normalization (Wortsman et al., 2023), and AdaLN-LoRA (Valevski et al., 2024) for parameter efficiency (reducing 11B model to 7B).

- Training: Joint image and video training with domain-specific normalization. Progressive training starts at lower resolution/frame count and scales up. Multi-aspect training handles various aspect ratios using reflection padding. Mixed-precision (BF16/FP32) training with AdamW (You et al., 2019) is used. Text conditioning uses T5-XXL and can leverage classifier-free guidance (Ho et al., 2022). Video2World models are trained by conditioning on previous frames with augmented noise.

- Scaling: Uses FSDP (Suleiman et al., 2023) and Context Parallelism (CP) (Liu et al., 2023, Jacobs et al., 2023) (P2P variant from TransformerEngine [nvidia_transformer_engine]) to handle large memory requirements.

- Enhancer: A Prompt Upsampler (Cosmos-1.0-PromptUpsampler-12B-Text2World), fine-tuned from Mistral-NeMo-12B-Instruct [mistral_nemo_2024] using a long-to-short captioning strategy, converts short user prompts to longer, detailed prompts matching the training distribution. Pixtral-12B (Agrawal et al., 2024) is used for Video2World prompt upsampling.

- Results: 7B and 14B Text2World and Video2World models generate high-quality, dynamic videos. 14B models show finer details and better motion stability.

- Autoregressive-based WFM:

- Formulation: World simulation as next-token prediction using discrete video tokens from Cosmos-1.0-Tokenizer-DV8x16x16.

- Architecture: Transformer decoder (Llama3-style (Grattafiori et al., 2024)). Features include 3D-aware positional embeddings (YaRN-extended 3D RoPE (Peng et al., 2023) + 3D APE), cross-attention for text conditioning (using T5-XXL), QK-Normalization (Wortsman et al., 2023), and Z-loss [de2016z] for training stability. Vocabulary size is 64,000.

- Scaling: Uses Tensor Parallelism (TP) (Shoeybi et al., 2019) and Sequence Parallelism (SP) (Suleiman et al., 2023) to manage memory.

- Training: Multi-stage training starts with video prediction (17 then 34 frames) and adds text conditioning in later stages with joint image/video data. A "cooling-down" phase with high-quality data is used. Models include 4B, 5B (Video2World), 12B, and 13B (Video2World) variants.

- Inference Optimization: Leverages LLM techniques like KV caching, torch.compile, and speculative decoding (Medusa (Chiang, 2024)) for real-time generation (demonstrated at 10 FPS at 320x512 resolution). Medusa adds extra decoding heads for parallel token prediction.

- Enhancer: A Diffusion Decoder (Cosmos-1.0-Diffusion-7B-Decoder-DV8x16x16ToCV8x8x8), fine-tuned from Cosmos-1.0-Diffusion-7B-Text2World, maps discrete tokens to higher-quality continuous tokens to mitigate blurriness from aggressive discrete tokenization.

- Results: Larger models (12B, 13B) generate sharper videos with better motion than smaller models (4B, 5B). The diffusion decoder significantly enhances sharpness. Failure cases like objects unexpectedly appearing are observed, with higher rates for smaller models and single-frame conditioning.

- Evaluation of Pre-trained WFMs:

- 3D Consistency: Evaluated on static scenes from RealEstate10K (Gong et al., 2018). Metrics include geometric consistency (Sampson error [hartley2003multiple], camera pose estimation success rate using SuperPoint (Ergashev, 2018) + LightGlue (Lindenberger et al., 2023) + COLMAP (Stern et al., 2016)) and view synthesis consistency (PSNR, SSIM, LPIPS (Zhang et al., 2018) by fitting 3D Gaussian splatting (Bravyi et al., 2023)). Cosmos WFMs significantly outperform VideoLDM (Klinger et al., 2023) and achieve geometric consistency close to real videos.

- Physics Alignment: Evaluated using synthetic physics simulations (PhysX [PhysX], Isaac Sim [IsaacSim]). Scenarios test gravity, collision, inertia, etc. Metrics include pixel-level (PSNR, SSIM), feature-level (DreamSim (Lahiri et al., 2023)), and object-level (Avg. IoU of tracked objects using SAMURAI (Yang et al., 2024)). Cosmos WFMs show intuitive physics understanding but struggle with complex dynamics, highlighting the need for improved data and model design. Diffusion models perform better at pixel-level quality in 9-frame conditioning.

4. Post-trained World Foundation Models:

Pre-trained WFMs are fine-tuned for specific Physical AI tasks:

- Camera Control: Cosmos-1.0-Diffusion-7B-Video2World-Sample-CameraCond is fine-tuned on DL3DV-10K (Kayal et al., 2024) by conditioning on camera poses using Pl\"ucker embeddings (Arjovsky, 2021). Evaluated on RealEstate10K against CamCo (Xu et al., 2024) using FID/FVD and camera trajectory alignment (rotation/translation error after Procrustes analysis). Cosmos model significantly outperforms CamCo, generating realistic, 3D-consistent, and controllable videos that generalize to unseen camera trajectories.

- Robotic Manipulation: Fine-tuned for instruction-based video prediction (on Cosmos-1X dataset, egocentric videos with instructions) and action-based next-frame generation (on Bridge (Tevet et al., 2022) dataset, third-person videos with action vectors). Cosmos-1.0-Diffusion-7B/Autoregressive-5B-Video2World are fine-tuned by adding conditioning for instruction (text embeddings via cross-attention) or action (MLP embedder, incorporated via cross-attention or timestep embedding). Evaluated via human evaluation (instruction following, object permanence, verity) against VideoLDM-Instruction and quantitatively (PSNR, SSIM, Latent L2, FVD) against IRASim-Action (Zhu et al., 2024). Cosmos models perform better than baselines on both tasks.

- Autonomous Driving: Fine-tuned on the internal multi-view RDS dataset (3.6M 20-second clips, 6 synchronized camera views + ego-motion). Cosmos-1.0-Diffusion-7B-Text2World is fine-tuned to generate 6 views simultaneously, using view-independent PE, view embeddings, and view-dependent cross-attention. Variants include Text2World-Sample-MultiView, Text2World-Sample-MultiView-TrajectoryCond (conditioned on future 3D trajectories), and Video2World-Sample-MultiView (conditioned on previous frames for extension). Evaluated against VideoLDM-MultiView on generation quality (FID/FVD), multi-view consistency (Temporal/Cross-view Sampson Error using a robust pose estimation pipeline (Liang et al., 2024)), and trajectory consistency (Trajectory Agreement Error using multi-view pose estimation, Trajectory Following Error against ground truth). Cosmos models significantly outperform VideoLDM-MultiView and achieve consistency close to real videos. Trajectory-conditioned models accurately follow given paths. Object detection and tracking evaluation confirms physical consistency.

5. Guardrails:

A two-stage system ensures safe usage:

- Pre-Guard: Blocks harmful text prompts using Keyword Blocking (blocklist search after lemmatization) and Aegis Guardrail (Ghosh et al., 2024) (LLM-based classifier, fine-tuned Llama-Guard (Inan et al., 2023)).

- Post-Guard: Blocks harmful visual outputs using a Video Content Safety Filter (frame-level classifier trained on VLM-labeled, synthetic, and human-annotated data using SigLIP embeddings (Horn et al., 2023)) and a Face Blur Filter (using RetinaFace (Hebenstreit et al., 2020)). A dedicated red team actively probes the system to improve safety.

In conclusion, Cosmos WFMs provide a platform with powerful pre-trained models and tools to build physical AI systems. While they demonstrate significant progress in visual quality, 3D consistency, and controllability across diverse domains, challenges remain in achieving perfect physics adherence and comprehensive evaluation. The choice between diffusion and autoregressive models presents trade-offs, with hybrid approaches offering potential future directions. The open-source release aims to democratize access and accelerate research in Physical AI.

Paper to Video (Beta)

To view this video please enable JavaScript, and consider upgrading to a web browser that supports HTML5 video.

Whiteboard

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Open Problems

We haven't generated a list of open problems mentioned in this paper yet.

Continue Learning

- How does the Cosmos World Foundation Model compare to other world models like VideoLDM and IRASim in terms of both 3D consistency and physics alignment?

- What are the limitations of current diffusion and autoregressive architectures for world modeling, and how might hybrid models address these challenges?

- In what ways does the Cosmos Tokenizer outperform existing tokenization methods, and how crucial is tokenization quality for downstream Physical AI performance?

- How effectively do the implemented guardrails mitigate potential misuse, and what are the remaining safety risks when deploying large world models for simulation and synthetic data generation?

- Find recent papers about large-scale, pre-trained world models for Physical AI.

Related Papers

- Can Test-Time Scaling Improve World Foundation Model? (2025)

- Cosmos-Drive-Dreams: Scalable Synthetic Driving Data Generation with World Foundation Models (2025)

- OmniWorld: A Multi-Domain and Multi-Modal Dataset for 4D World Modeling (2025)

- World Simulation with Video Foundation Models for Physical AI (2025)

- GigaWorld-0: World Models as Data Engine to Empower Embodied AI (2025)

- Dexterous World Models (2025)

- Walk through Paintings: Egocentric World Models from Internet Priors (2026)

- Cosmos Policy: Fine-Tuning Video Models for Visuomotor Control and Planning (2026)

- Advancing Open-source World Models (2026)

- WorldBench: Disambiguating Physics for Diagnostic Evaluation of World Models (2026)

Authors (78)

Collections

Sign up for free to add this paper to one or more collections.

Tweets

Sign up for free to view the 26 tweets with 42 likes about this paper.

YouTube

HackerNews

- Nvidia Cosmos (3 points, 3 comments)

- Nvidia Cosmos is a developer-first world foundation model platform (2 points, 0 comments)