Overview of LLaVA-o1: Empowering Vision-LLMs with Structured Reasoning

The paper "LLaVA-o1: Let Vision LLMs Reason Step-by-Step" introduces a novel vision-LLM (VLM), LLaVA-o1, designed to enhance reasoning capabilities through an autonomous multistage process. Unlike existing models, which often rely on chain-of-thought (CoT) prompting, LLaVA-o1 engages in independent reasoning phases—summarization, visual interpretation, logical reasoning, and conclusion synthesis. This structured approach aims to address the limitations in systematic reasoning observed in conventional VLMs.

Core Contribution

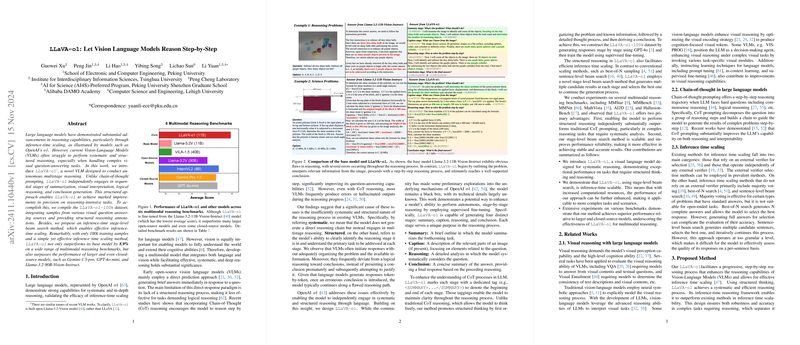

Central to LLaVA-o1's architecture is its ability to carry out multistage reasoning autonomously. This model requires only 100k training samples, sourced from diverse visual question-answering datasets with structured annotations, to significantly outperform both open- and closed-source models. Notably, LLaVA-o1 demonstrates an 8.9% increase in accuracy over its base model and surpasses the performance of larger models such as Llama-3.2-90B-Vision-Instruct and closed-source proprietary solutions like Gemini-1.5-Pro.

The LLaVA-o1 model capitalizes on an innovative inference-time stage-level beam search method. Differing from traditional best-of-N or sentence-level beam search methods, this approach ensures effective inference-time scaling by generating and selecting optimal candidates at each reasoning stage. This design is instrumental in enhancing the model's scalability, allowing it to handle more complex and nuanced reasoning tasks.

Key Findings

Experiments conducted across multiple multimodal reasoning benchmarks, including MMStar, MMBench, and MathVista, confirm that LLaVA-o1 exhibits superior performance. The structured reasoning process, facilitated by stage-level outputs and specialized tags marking each reasoning phase, advances LLaVA-o1 beyond existing CoT methods, yielding improvements in precision and robustness, particularly in tasks demanding logical and systematic analysis.

Further analysis highlights the importance of structured tags embedded within the reasoning stages. Removing such tags results in a significant drop in performance, underscoring their crucial role in guiding the model through a coherent reasoning path. Moreover, comparisons reveal that the curated LLaVA-o1-100k dataset contributes significantly more to model proficiency than traditional datasets lacking explicit reasoning annotations.

Implications and Future Directions

LLaVA-o1 posits a promising direction for advancing AI capabilities in multimodal reasoning by integrating a stage-based structured thinking framework. Its methodology not only alleviates common reasoning faults observed in prevailing VLMs, such as logic errors and hallucinations, but also establishes a foundation that could be further explored to incorporate broader cognitive capabilities, such as the inclusion of external verifiers or adaptive learning algorithms.

For future research, the promising results of LLaVA-o1's stage-level beam search method suggest an avenue for more sophisticated inference-time scaling approaches. Furthermore, extending the reasoning framework to accommodate varied and dynamic problem contexts could enhance the adaptability and generalizability of such models in real-world applications. As the demand for more accurate and comprehensive VLMs continues to grow, the principles and methodologies introduced by LLaVA-o1 could serve as a cornerstone for subsequent developments in AI-driven reasoning and decision-making tasks.