Video-LLaVA: Unified Visual Representation in Vision-LLMs

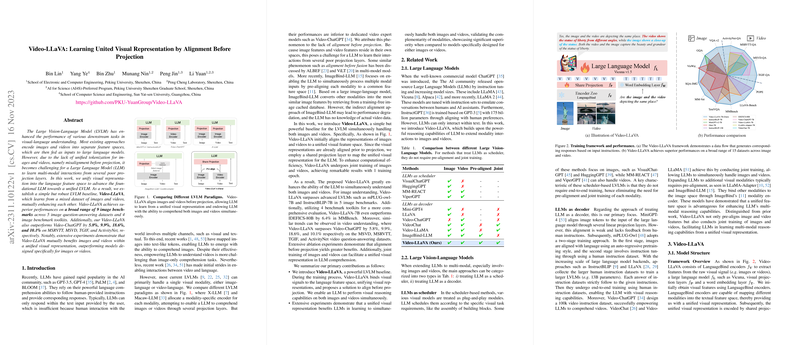

The paper presents Video-LLaVA, a Large Vision-LLM (LVLM) that aims to enhance the integration and performance of multi-modal understanding by unifying visual representations into a language feature space. This approach addresses a significant limitation in existing LVLMs where separate encoding of images and videos often results in misalignment before projection, challenging effective multi-modal interaction learning.

Methodology and Contributions

Video-LLaVA introduces a straightforward yet robust baseline by aligning visual inputs before they are projected. The core innovation lies in using a LanguageBind encoder to pre-align image and video modalities to a unified feature space that corresponds to language inputs. This method facilitates improved learning within the LLM by ensuring a coherent visual input structure, bypassing the necessity for overly complex projection layers.

Key contributions of Video-LLaVA can be summarized as follows:

- Unified Visual Representation: The model aligns both images and videos in a shared feature space, promoting efficient learning of multi-modal interactions within the LLM.

- Joint Training Paradigm: Unlike previous approaches that treat images and videos separately, Video-LLaVA advocates for a joint training regimen. This allows for mutual reinforcement between images and videos, optimizing the model's capability to understand both modalities simultaneously.

- Performance Benchmarks: The model outperforms competing models, such as Video-ChatGPT, by significant margins across various datasets, demonstrating the efficacy of pre-alignment and unified representation strategies.

Experimental Results

Video-LLaVA yields superior performance across a range of benchmarks. On nine image benchmarks spanning five image question-answering datasets and multiple toolkits, the model surpasses other prominent LVLMs. Specifically, it outperforms state-of-the-art models by 6.4% on the MMBench toolkit and achieves notable improvements in video question-answering datasets: 5.8% on MSRVTT, 9.9% on MSVD, 18.6% on TGIF, and 10.1% on ActivityNet.

The model demonstrates a balanced accuracy and robustness in both images and video understanding tasks, which indicates its effectiveness in handling diverse multi-modal inputs. The unified representation significantly aids LLMs in reducing object hallucination errors and improving the comprehension of abstract concepts and temporal relationships in videos.

Implications and Future Directions

The approach outlined in this paper has practical implications for developing LVLMs capable of handling complex visual-language tasks that require understanding across various media types. The unified representation schema can enhance models' interpretative abilities in real-world scenarios where video and image data are prevalent.

Theoretically, Video-LLaVA's alignment before projection suggests a promising direction for future research in multi-modal AI systems, potentially extending to other modalities such as audio or depth images. It also raises compelling questions about the integration of temporal embeddings to bolster the model's aptitude for time-sensitive data.

Moving forward, exploring the application of unified visual representation frameworks in broader contexts, such as augmented and virtual reality, could provide further insights into natural human-machine interactions. Additionally, refining joint training techniques to even better leverage cross-modal complementarity may yield further enhancements in LVLM performance.

Overall, Video-LLaVA stands as an important step toward more integrated and versatile vision-LLMing, offering a foundation for future exploration and development in AI systems capable of unified multi-modal reasoning.