SlimLM: An Efficient Small LLM for On-Device Document Assistance

The rapid advancement of LLMs over recent years has diverged into two distinct pathways: LLMs aimed at achieving feats akin to artificial general intelligence, and smaller, more resource-efficient models intended for deployment on mobile and edge devices. While LLMs have been the focal point for significant attention due to their impressive capabilities, the real-world utility and performance of small LLMs (SLMs) on mobile devices have not been extensively investigated. Addressing this gap, the paper "SlimLM: An Efficient Small LLM for On-Device Document Assistance" presents an empirical paper and development of SLMs specifically optimized for document assistance tasks on high-end smartphones.

Methodology and Contributions

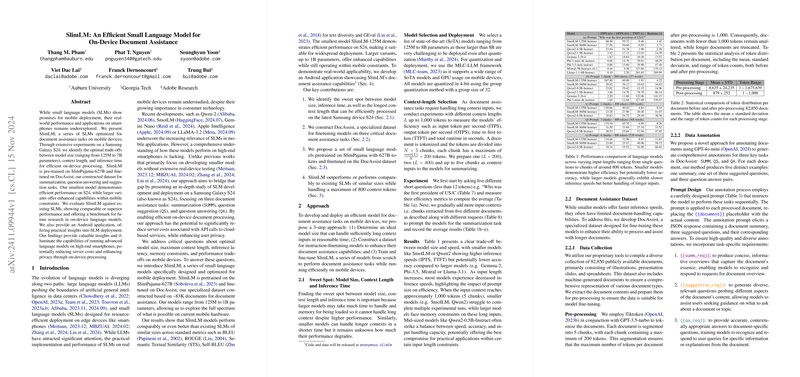

The work introduces SlimLM, a series of small LLMs tailored for mobile applications, particularly document assistance tasks such as summarization, question answering, and suggestion tasks. Key to this approach is identifying optimal trade-offs between model size, context length, and inference time to achieve efficient on-device processing. This is demonstrated through comprehensive experiments on a Samsung Galaxy S24 device, aiming to provide insights applicable to similar mobile technology.

The models are trained on a new dataset named DocAssist, specifically constructed for this research. This dataset was developed by annotating 83K documents to enhance the models' capabilities in the intended application areas. SlimLM, consisting of models ranging from 125 million to 1 billion parameters, is examined extensively, each model pre-trained on the elaborate SlimPajama datatset containing 627 billion tokens, and then fine-tuned using DocAssist.

Benchmark and Performance

In benchmarking against existing SLMs such as SmoLLM and Qwen, SlimLM demonstrates competitive or superior performance across standard evaluation metrics, including BLEU, ROUGE, and semantic textual similarity metrics. Notably, SlimLM achieves effective performance while handling up to 800 context tokens, retaining efficiency and computational viability for mobile deployment without compromising inference quality. The smallest SlimLM variant exhibits robust performance, suitable for practical deployment on the S24, while larger models deliver enhanced capabilities, albeit within the computational limitations of mobile hardware.

Practical Implications and Outlook

One salient implication of SlimLM's research is that advancements in on-device LLM processing could significantly curtail server costs tied to cloud-based API calls, besides advancing user privacy by localizing the processing on device rather than the cloud. This could hold particular value in commercial applications within mobile ecosystems, where SlimLM could be integrated to enable secure yet proficient document assistance functionalities.

Speculating the Future

Looking forward, SlimLM sets a benchmark for future research in on-device LLMs, spurring exploration into further optimizing model architectures for mobile deployment efficiencies. With the ascendancy of edge computing, embedding robust LLMs on devices could transform mobile applications — ensuring more responsive interactions devoid of network dependency, potentially catalyzing the wider adoption of AI-driven solutions in consumer technology.

In summary, this paper contributes significantly to bridging the deployment gap in SLM research on mobile platforms. While the findings do not establish SlimLM as overwhelmingly superior, they furnish critical insights into balancing model efficiency with practical deployment concerns, serving as a springboard for further innovations in the field of on-device language processing.