- The paper introduces SmartInv, a multimodal framework that infers invariants to detect both functional and implementation bugs in smart contracts.

- It employs a novel Tier of Thought prompting strategy that decomposes invariant inference into stages of context identification, candidate generation, and vulnerability ranking.

- Evaluations on 89,621 contracts demonstrate a 4× bug detection improvement and a 150× speedup over traditional analysis tools.

SmartInv: Multimodal Learning for Smart Contract Invariant Inference

Introduction and Motivation

Smart contracts, as immutable programs deployed on blockchains, are highly susceptible to vulnerabilities that can result in significant financial losses. While traditional static and dynamic analysis tools have made progress in detecting implementation bugs (e.g., integer overflows, reentrancy), they are fundamentally limited in their ability to identify "machine un-auditable" functional bugs. These functional bugs arise from complex, domain-specific transactional contexts and require reasoning across multiple modalities, such as source code and natural language documentation. The SmartInv framework addresses this gap by leveraging foundation models and a novel prompting strategy to infer invariants that capture the intended behavior of smart contracts, enabling the detection of both implementation and functional bugs at scale.

The core challenge addressed by SmartInv is the automated inference of invariants that specify the expected behavior of smart contracts, using information from both code and natural language. Formally, given a pre-trained foundation model Mθ and a tokenized smart contract S, the goal is to generate pairs (ci,vi), where ci is a critical program point and vi is an associated invariant. These invariants are then used to detect vulnerabilities by checking for their violation during symbolic or bounded model checking.

SmartInv introduces three primary types of invariants:

- Assertions: Boolean expressions over program variables, possibly involving special constructs such as

Old(expr) (pre-state value) and SumMapping(mappingVar) (aggregate over mappings).

- Modifiers: Function-level invariants, e.g.,

onlyOwner, specifying access control or other global properties.

- Global Invariants: Properties that span multiple functions or contracts, enabling reasoning about cross-contract or cross-function behaviors.

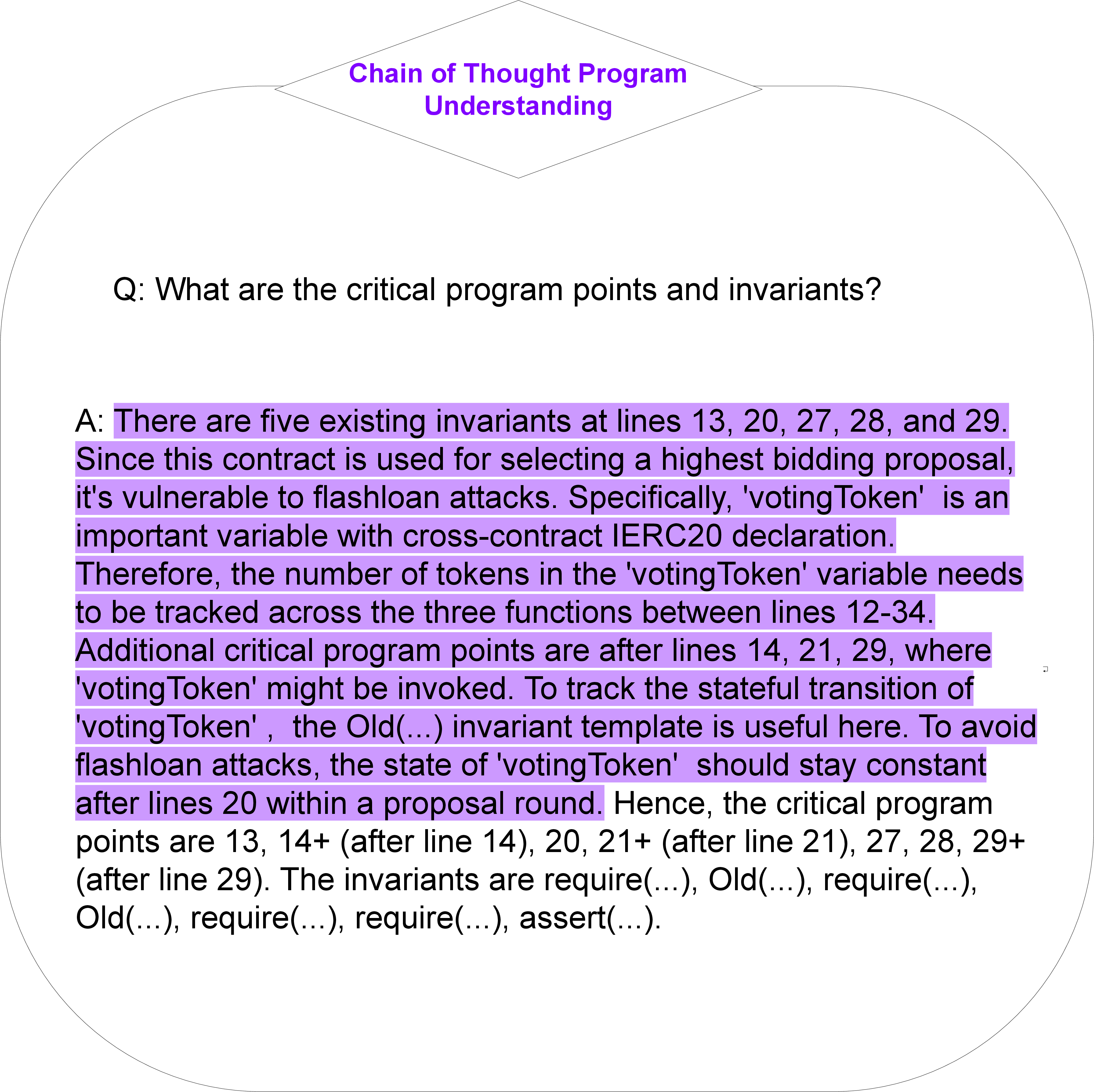

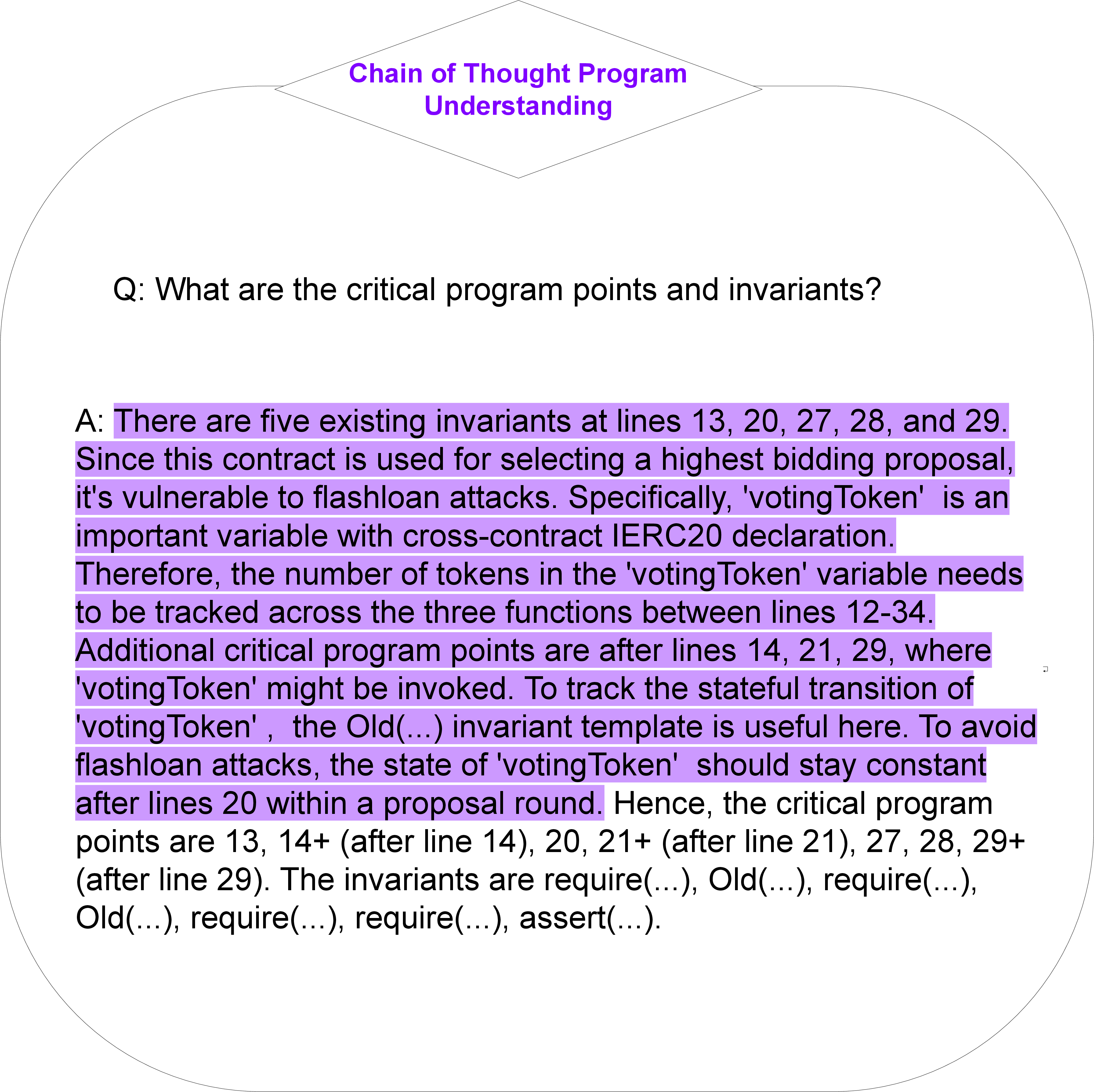

Tier of Thought (ToT): Multimodal Prompting and Reasoning

A key innovation in SmartInv is the Tier of Thought (ToT) prompting strategy, which structures the invariant inference process into a sequence of increasingly complex reasoning steps. This approach is inspired by the observation that human auditors reason about smart contracts in stages: identifying transactional context, locating critical program points, generating candidate invariants, ranking them by bug-preventive potential, and finally mapping them to specific vulnerabilities.

The ToT process is implemented as a multi-tiered prompt sequence:

- Tier 1: Identify transactional context and critical program points using both code and natural language cues.

- Tier 2: Generate candidate invariants at the identified program points, leveraging multimodal information.

- Tier 3: Rank the invariants by their likelihood of preventing bugs and predict the types of vulnerabilities present.

This staged reasoning is critical for effective multimodal learning, as it allows the model to decompose complex tasks and utilize both code structure and natural language documentation.

Figure 1: Chain of Thought prompting enables stepwise reasoning from code and comments to invariants and vulnerabilities.

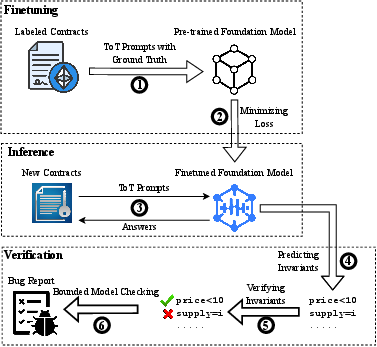

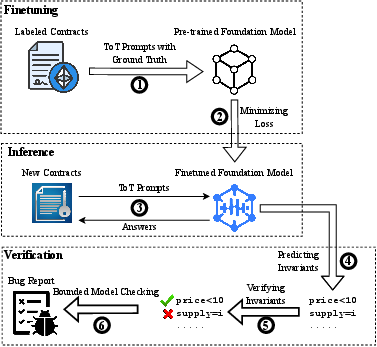

SmartInv Workflow and System Architecture

The SmartInv workflow consists of the following stages:

- Finetuning: The foundation model is finetuned on a curated dataset of annotated smart contracts, with ToT prompts and ground truth labels for transactional context, critical program points, invariants, and vulnerabilities.

- Inference: For a new contract, the finetuned model is prompted using the ToT strategy to generate ranked invariants.

- Verification: The generated invariants are verified using a combination of inductive proof (e.g., via Boogie) and bounded model checking. Invariants that cannot be proven or falsified are flagged for manual inspection.

- Reporting: Verified invariants and detected vulnerabilities are reported, enabling both automated and human-in-the-loop auditing.

Figure 2: SmartInv's Workflow, integrating multimodal finetuning, staged inference, and verification.

Implementation and Evaluation

SmartInv is implemented in Python, with the verification backend built atop VeriSol. The system is evaluated on a large-scale dataset of 89,621 real-world Solidity contracts, with additional curated datasets for training and validation. The evaluation covers four key research questions:

- RQ1: Bug Detection Effectiveness

SmartInv detects 4× more critical bugs and generates 3.5× more bug-critical invariants than state-of-the-art tools, with a 10.39% false positive rate—substantially lower than other prompting-based or symbolic tools. Notably, SmartInv uncovers 119 zero-day vulnerabilities, five of which are confirmed as high severity.

- RQ2: Invariant Generation Quality

SmartInv produces fewer but more semantically meaningful invariants per contract compared to dynamic invariant detectors, with a lower false positive rate (0.32 per contract vs. 5.41 for InvCon).

- RQ3: Ablation and Model Selection

The LLaMA-7B model, finetuned with ToT and multimodal data, achieves the highest accuracy (0.89) and F1 score (0.82). Removing natural language cues or ToT prompting significantly degrades performance, confirming the necessity of both modalities and staged reasoning.

- RQ4: Runtime Performance

SmartInv achieves a 150× speedup over symbolic and static analysis tools, with average per-contract analysis times of 28.6 seconds, enabling practical large-scale deployment.

Case Studies: Zero-Day Vulnerabilities

SmartInv's practical impact is demonstrated through the discovery of real-world zero-day bugs:

- Cross-Bridge Default Value Bug: SmartInv infers an invariant

assert(_msgHash != 0) to prevent acceptance of default-initialized message hashes, which could otherwise allow unauthorized cross-chain operations.

- Gas Inefficiency in Deposit Queues: By generating

require(depositQueue.size() == 1), SmartInv identifies a denial-of-service vector where excessive queue length can lock user funds due to gas exhaustion.

These cases illustrate SmartInv's ability to reason about subtle, context-dependent vulnerabilities that elude pattern-based or purely code-centric tools.

Limitations and Future Directions

While SmartInv demonstrates strong empirical performance, several limitations remain:

- Token Length Constraints: Even with finetuning, foundation models are limited in the size of contracts they can process. Summarization of imported modules is used as a workaround, but further architectural advances are needed for very large contracts.

- Hallucination and Verification: Foundation models are prone to hallucination; thus, the verification phase is essential to filter out spurious invariants. However, some invariants may remain unverifiable due to limitations in current formal verification tools.

- Transaction History: Incorporating transaction history as an additional modality can further improve precision, especially for deployed contracts, but is not always available pre-deployment.

Future work may explore more scalable model architectures, improved integration of dynamic execution traces, and broader support for cross-chain and cross-language contracts.

Implications and Theoretical Significance

SmartInv demonstrates that multimodal learning, when combined with staged prompting and formal verification, can substantially advance the state of the art in smart contract analysis. The approach generalizes beyond hand-crafted patterns, enabling detection of previously unrecognized classes of functional bugs. The ToT strategy provides a template for structured reasoning in other domains where code and natural language interact, such as API misuse detection or regulatory compliance.

The strong numerical results—orders-of-magnitude improvements in both detection rate and runtime—suggest that foundation models, when properly finetuned and guided, can serve as effective assistants for program analysis tasks that require deep semantic understanding.

Conclusion

SmartInv represents a significant advance in automated smart contract analysis, uniting multimodal foundation models, staged reasoning via Tier of Thought prompting, and formal verification. The system achieves superior performance in both invariant inference and bug detection, particularly for functional bugs that have historically eluded automated tools. The methodology and empirical results have broad implications for the application of LLMs in program analysis, formal methods, and software security.