A Survey of Small LLMs

This essay provides an overview of the paper "A Survey of Small LLMs," discussing the increasing relevance of Small LLMs (SLMs) due to their efficiency and capability to perform language tasks with minimal computational resources. As LLMs such as GPT-3 and LLAMA demand substantial computational resources, there has been a shift in research focus towards optimizing SLMs for on-device and resource-constrained environments.

Key Contributions

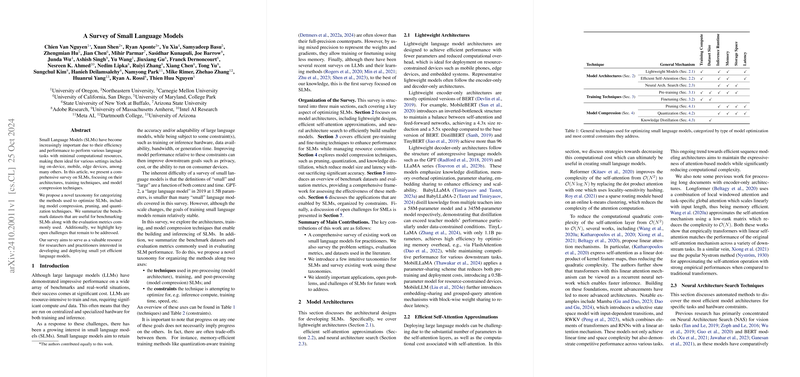

The paper presents a comprehensive survey focusing on three main aspects of SLM development: architectures, training techniques, and model compression methods. Moreover, it proposes a novel taxonomy for categorizing optimization methods for SLMs, providing a structured approach to understanding advances in the field.

Model Architectures

The research discusses various architectural strategies for developing SLMs, emphasizing lightweight designs, efficient self-attention mechanisms, and the use of neural architecture search techniques. In particular, techniques like low-rank factorization and neural architecture pruning demonstrate significant advances in maintaining performance while reducing computational overhead. The paper also highlights the role of multi-modal models in leveraging these lightweight architectures, exemplified by recent works like Gemma and Chameleon.

Training Techniques

Training efficiency is crucial for SLMs, and the paper reviews efficient pre-training and fine-tuning strategies. Mixed precision training emerges as a vital method for handling resource constraints, with recent advancements in hardware support for FP8 precision significantly enhancing computational efficiency. The survey also emphasizes Parameter-Efficient Fine-Tuning (PEFT) and data augmentation techniques as effective methods to adapt SLMs to specific tasks while maintaining efficiency.

Model Compression

Model compression is a key strategy in deriving SLMs from LLMs. The survey categorizes compression methods into pruning, quantization, and knowledge distillation. Weight pruning, both structured and unstructured, is highlighted for its potential to reduce both storage and computational requirements without substantial performance loss. The paper also details quantization techniques like SmoothQuant, which address challenges in activation quantization, and knowledge distillation strategies that effectively transfer capabilities from larger models.

Evaluation and Applications

The paper outlines the datasets and metrics used to evaluate SLMs, structured around constraints such as inference runtime, memory, and energy efficiency. Additionally, it identifies real-world applications of SLMs, from real-time interaction to edge computing, illustrating their practical relevance in various contexts.

Open Problems and Future Directions

The paper underscores existing challenges, such as addressing hallucinations and biases in LLMs, and enhancing energy efficiency during inference. Privacy concerns are also highlighted, considering the sensitive nature of data handled by SLMs. Addressing these issues presents significant opportunities for future research, particularly in improving deployment on consumer devices while maintaining robust performance.

Conclusion

Overall, the paper serves as a valuable resource for researchers, offering a structured overview of the current landscape of SLMs and identifying areas for future exploration. The methodologies discussed support the broader goal of achieving efficient, scalable LLMs applicable across diverse technological environments.