Overview of Model Compression for LLMs

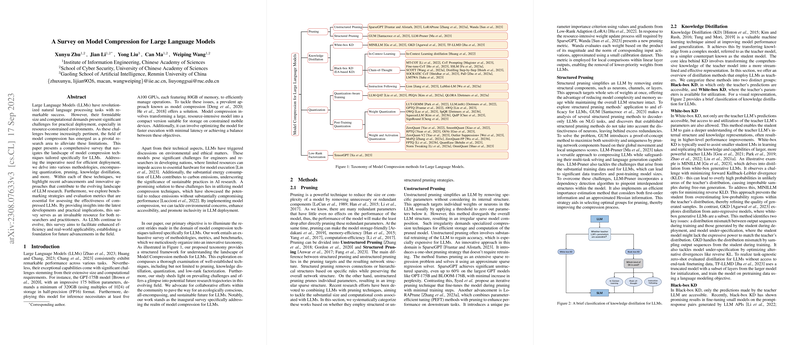

This paper provides a comprehensive survey on the methodologies specifically devised for the compression of LLMs. It thoroughly investigates different techniques such as quantization, pruning, and knowledge distillation, which aim to mitigate the significant computational demands and storage requirements characteristic of LLMs. The survey further introduces a nuanced taxonomy that organizes these methodologies, offering insights into recent successes and emerging approaches in LLM compression.

Model Compression Techniques Discussed in the Paper

- Pruning: The paper elaborates on both unstructured and structured pruning. Unstructured pruning removes individual weights and is applauded for substantial size reduction with minimal performance decline, demonstrated by methods such as SparseGPT and Wanda. Structured pruning, involving removal of entire structural components like neurons or channels, also indicates promise, yet highlights the work required to align these methods with LLMs' unique characteristics.

- Knowledge Distillation (KD): The paper classifies KD into white-box and black-box categories, detailing white-box approaches that utilize teacher model parameters and black-box methods pertinent to emergent abilities like in-context learning and chain-of-thought.

- Quantization: This technique is categorized into Quantization-Aware Training (QAT) and Post-Training Quantization (PTQ). Both approaches aim to decrease the precision of model weights and activations, discussed extensively with examples of significant progress in both 8-bit and lower-bit quantization in tools like QLORA and GPTQ.

- Low-Rank Factorization: Although less prevalent, low-rank factorization is explored for its efficacy in compressing models without considerable performance compromise. The TensorGPT project exemplifies this approach with promising results on embedding layers.

Evaluation and Benchmarking

The effectiveness of compressing LLMs is assessed through metrics such as model size, number of parameters, inference time, and floating-point operations (FLOPs). Widely recognized NLP datasets such as GLUE, LAMBADA, and strategy-specific datasets like BIG-Bench and unseen instructions datasets facilitate the benchmarking process, offering thorough performance comparisons against uncompressed models.

Impact and Implications

Model compression holds robust promise for making LLMs more accessible and feasible for deployment in environments with constrained resources. This survey nudges researchers towards important insights and future opportunities, emphasizing the necessity for better performance-size trade-offs and more dynamically adaptive model architectures, potentially guided by methods like Neural Architecture Search (NAS).

Furthermore, the survey acknowledges the emerging need for explainable model compression, particularly pivotal for understanding and validating the impact of changes on LLMs' performance and ensuring their reliability in practical applications.

Concluding Remarks

The document serves as a valuable reference for scholars and practitioners aiming to navigate the complex landscape of LLM compression. By charting current methodologies and hinting at future research directions, it lays a comprehensive foundation for advancing LLM efficiency while maintaining their formidable natural language processing capabilities. The survey fosters continued exploration into solving LLM's inherent challenges, promoting eco-friendly and inclusive AI development and deployment.