Analyzing Imperfect Retrieval Augmentation and Knowledge Conflicts for LLMs

The paper "Overcoming Imperfect Retrieval Augmentation and Knowledge Conflicts for LLMs" presents a detailed examination of the challenges associated with retrieval-augmented generation (RAG) in the context of LLMs. This work addresses the critical issue of imperfect retrieval which can introduce irrelevant or misleading information, thereby affecting the reliability of LLMs' responses.

Key Findings and Methodology

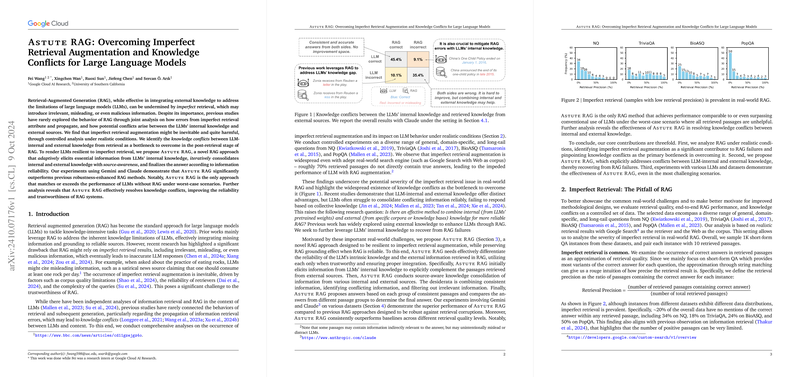

The paper identifies the occurrence of imperfect retrieval augmentation as a significant impediment in RAG systems. Through controlled experiments on datasets such as NQ, TriviaQA, BioASQ, and PopQA, it highlights how a substantial portion of retrieved passages (up to 70%) do not contain correct answers. This underscores the necessity to develop methods that can handle such imperfections.

In response to these challenges, the authors introduce a novel RAG framework designed to enhance the reliability of LLMs by leveraging both internal and external knowledge. Their approach involves an adaptive generation mechanism to extract relevant information from the LLM's internal knowledge base and a source-aware consolidation process to synthesize this with external data. The framework is designed to resolve conflicts between internal and external information, consolidating consistent information and disregarding misleading content.

Experimental Results

The proposed method was tested using advanced LLMs like Gemini and Claude. The results show a significant improvement in robustness compared to previous methods. The paper reports that the new approach not only exceeds the performance of existing RAG methods in typical scenarios but also in worst-case situations where all retrieved passages are unhelpful. This ability to maintain accuracy highlights the effectiveness of the framework in addressing knowledge conflicts.

Implications and Future Directions

The findings have substantial implications for both theoretical research and practical applications of AI. By demonstrating that LLMs can be made more resilient to retrieval errors, the paper suggests pathways for enhancing the trustworthiness of AI systems deployed in sensitive domains where data reliability is paramount.

The paper paves the way for further exploration into adaptive knowledge integration techniques, emphasizing the potential benefits of refining LLM-internal knowledge elicitation and external information synthesis processes. Future work could explore extending this methodology to multimodal settings or applying it to less advanced LLMs to assess broader applicability.

Overall, the research provides a comprehensive framework for addressing the complexities of imperfect retrieval in LLMs, marking a step towards more reliable and trustworthy AI systems.