ReIFE: Re-evaluating Instruction-Following Evaluation

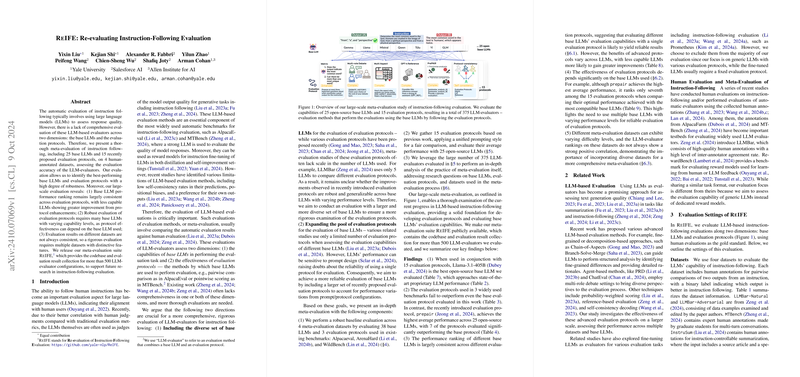

The paper "ReIFE: Re-evaluating Instruction-Following Evaluation" presents a comprehensive paper on the evaluation of instruction-following capabilities in LLMs. This work addresses the need for a thorough meta-evaluation across two primary dimensions: the base LLMs used and the evaluation protocols applied. The paper assesses 25 base LLMs and 15 evaluation protocols, using four human-annotated datasets, aiming to determine the best-performing configurations with robust accuracy.

Key Findings and Contributions

- Base LLMs Consistency: The researchers found that performance rankings of base LLMs remain largely consistent across different evaluation protocols. This suggests that a single protocol might be sufficient for evaluating LLMs' capabilities consistently.

- Diverse Protocol Necessity: The evaluation results emphasize that a robust examination of evaluation protocols requires employing multiple base LLMs with varying capability levels. Protocol effectiveness can be base LLM dependent.

- Dataset Variability: The paper highlights that results across different datasets are not always consistent; hence, a comprehensive evaluation requires testing across datasets with distinct features to ensure reliability.

- Release of ReIFE Suite: The authors introduce the ReIFE suite, a meta-evaluation toolkit containing codebase and evaluation results for over 500 configurations, facilitating future research in this domain.

Evaluated Components

- Open-Source vs. Proprietary Models: Among the 38 models evaluated, llama-3.1-405B stood out as the best open-source model, nearing the performance of proprietary models like GPT-4.

- Protocol Evaluation: The paper critiques existing evaluation protocols used in popular benchmarks like AlpacaEval, ArenaHard, and WildBench, finding them less effective at the instance level compared to the base protocol.

- In-Depth Analysis: The paper provides an analysis framework that questions both the base LLMs' and protocols' contributions to performance, helping identify potential improvements.

Implications and Future Directions

The results from this paper have significant implications both theoretically and practically. The identification of robust LLM-evaluators and optimal evaluation protocols can inform the development of more effective AI training and validation processes. Moreover, understanding the variability in dataset difficulty and protocol effectiveness guides future research in creating more generalizable and reliable AI models.

As AI continues to evolve, further research could explore integrating fine-tuned LLMs and reward models within this evaluation framework. Additionally, a deeper examination involving multiple prompt variants and qualitative human evaluations could offer further insights into LLMs' evaluation capabilities.

In summary, this paper sheds light on the complexities of instruction-following evaluation in LLMs, providing valuable resources and findings that advance the field of AI model development and assessment. The ReIFE suite offers a robust foundation for continued exploration and enhancement of LLMs' evaluative capabilities.