Overview of LLaVA-Critic: Evaluating Multimodal Models

The paper introduces LLaVA-Critic, an open-source large multimodal model (LMM) specifically designed to evaluate the performance of multimodal tasks. Despite the growing sophistication of LMMs, the field has lacked an open, generalized evaluator until this contribution. The focus of LLaVA-Critic is twofold: it serves as a judge providing evaluation scores comparable to proprietary models like GPT-4V and supports preference learning by generating reward signals for these models.

Key Contributions

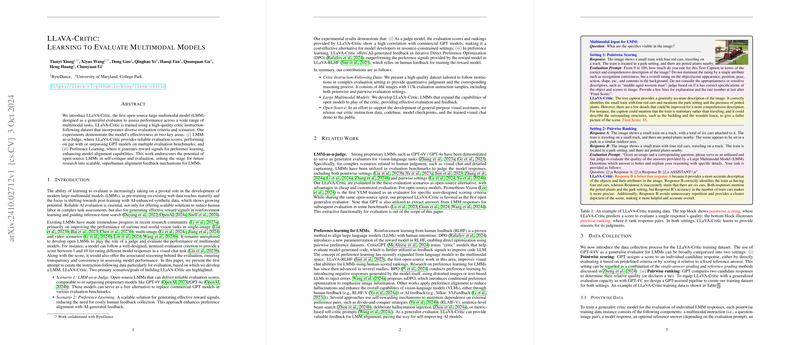

- Critic Instruction-Following Dataset: The work presents an extensive dataset totaling 113k samples, curated to enhance the model's ability to follow evaluation instructions. This dataset spans diverse scenarios, including pointwise and pairwise evaluations, consisting of features like image and text pairs, model responses, scores, and justifications.

- Model Development: LLaVA-Critic builds on existing strong models from the LLaVA-OneVision suite. It demonstrates improved alignment with GPT-4o, offering reliable judgements and consistently outperforming baseline models across multiple benchmarks.

- Open-Source Accessibility: All datasets, codebases, and trained model checkpoints are released to facilitate further research and development of generalized visual assistants.

Experimental Insights

In-Domain Pointwise Scoring

In experiments comparing LLaVA-Critic with GPT-4o across seven multimodal benchmarks, LLaVA-Critic aligns closely with GPT-4o in both instance-level scoring and model-level ranking. The use of high-quality evaluation datasets accounts for its accuracy and consistency. The model demonstrates significant improvements over both LLaVA-NeXT and LLaVA-OneVision baselines.

In-Domain Pairwise Ranking

Utilizing human-annotated data from the WildVision Arena, LLaVA-Critic closely matches human preferences, particularly with its 72B variant. This reflects its robust capability to effectively rank model responses in complex, real-world scenarios.

Out-of-Domain Evaluation

On the MLLM-as-a-Judge benchmark, LLaVA-Critic shows comparable performance to commercial models (e.g., GPT-4V), demonstrating its robustness and generalizability across unseen tasks and prompts. Notably, LLaVA-Critic's capacity is significantly enhanced by both data and model scaling.

Application in Preference Learning

LLaVA-Critic contributes to preference learning by serving as a reward signal generator in the iterative Direct Preference Optimization (DPO) process. It demonstrates noteworthy improvements in the base model’s visual chat capabilities, cementing its role as a non-proprietary alternative for aligning LLMs with human-like preferences.

Implications and Future Directions

The research underscores a shift towards utilizing open-source models for evaluation, thereby reducing reliance on proprietary systems. It highlights the potential for creating scalable self-critique mechanisms in AI, enabling multimodal models to offer superhuman alignment feedback. Moving forward, this opens new avenues for refining LMMs with improved interpretability and transparency, aligning them more closely with human evaluators.

In sum, this work provides a significant stride in multimodal model evaluation, backed by a strong combination of open-source accessibility, comprehensive datasets, and a robust model architecture. The implications for future development in AI alignment and multimodal model assessment are profound, presenting both practical applications and theoretical advancements.