- The paper introduces ACE, an LLM-based system that simulates negotiation scenarios and offers personalized feedback based on annotated MBA transcripts.

- The system leverages GPT-4 with dynamic prompting to generate realistic bargaining interactions and prevent premature concessions.

- User experiments with 374 participants demonstrated significant improvements in negotiation outcomes through detailed, turn-based error feedback.

ACE: A LLM-based Negotiation Coaching System

"ACE: A LLM-based Negotiation Coaching System" (2410.01555) presents a framework for leveraging LLMs to facilitate negotiation training. The authors develop ACE, an LLM-powered coach that simulates bargaining scenarios and delivers targeted feedback intended to enhance negotiation skills. This paper outlines the methodology and evaluation of ACE in improving negotiation capabilities of users.

Introduction to ACE

The ACE system is designed to democratize high-quality negotiation training by providing a virtual environment where users can practice negotiation. By analyzing real-world negotiation transcripts from MBA students and consulting subject matter experts, the authors create an annotation scheme that identifies common negotiation errors. These insights are used to develop ACE, which aims to fill the gap in access to negotiation training for underrepresented groups.

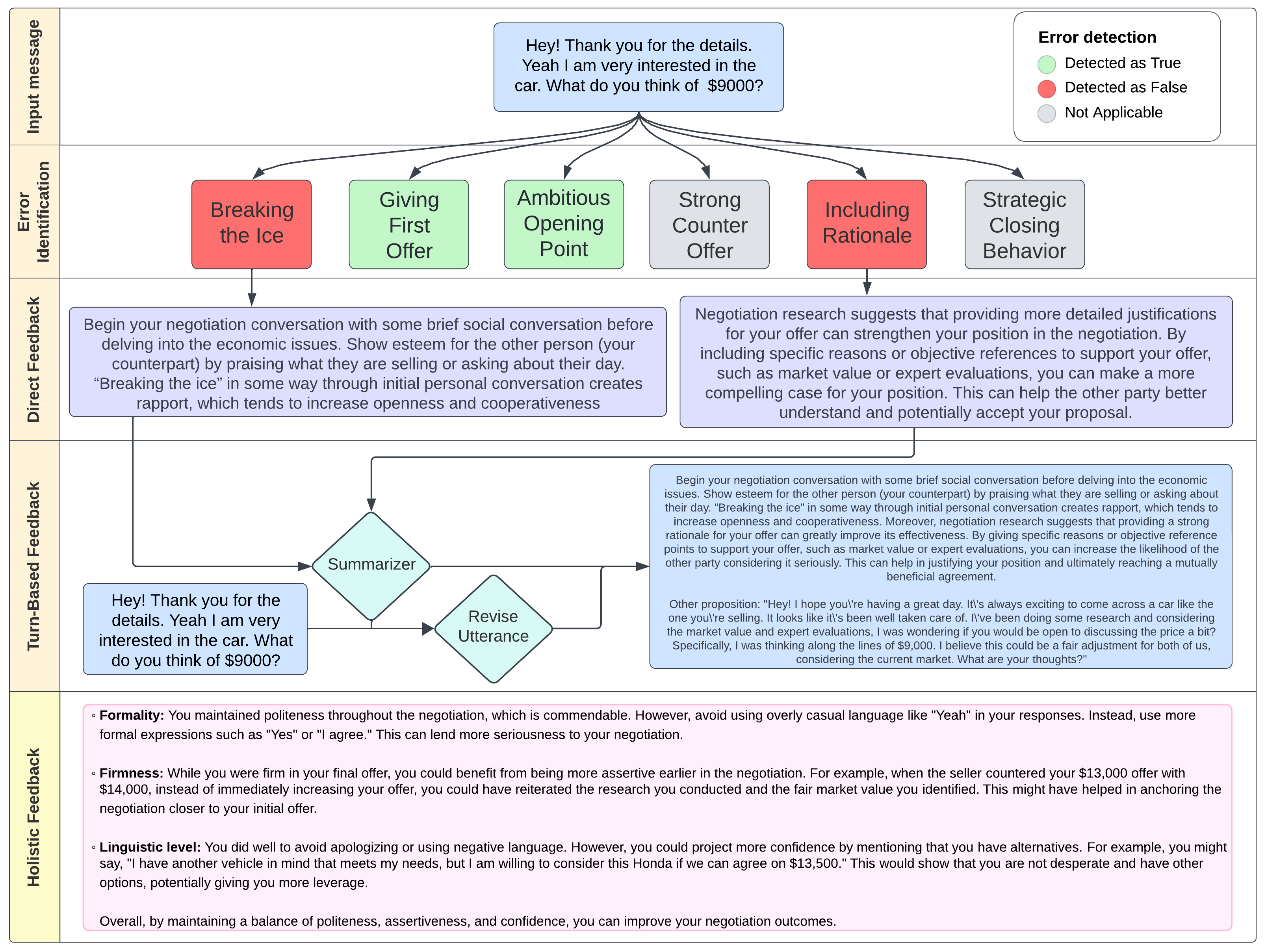

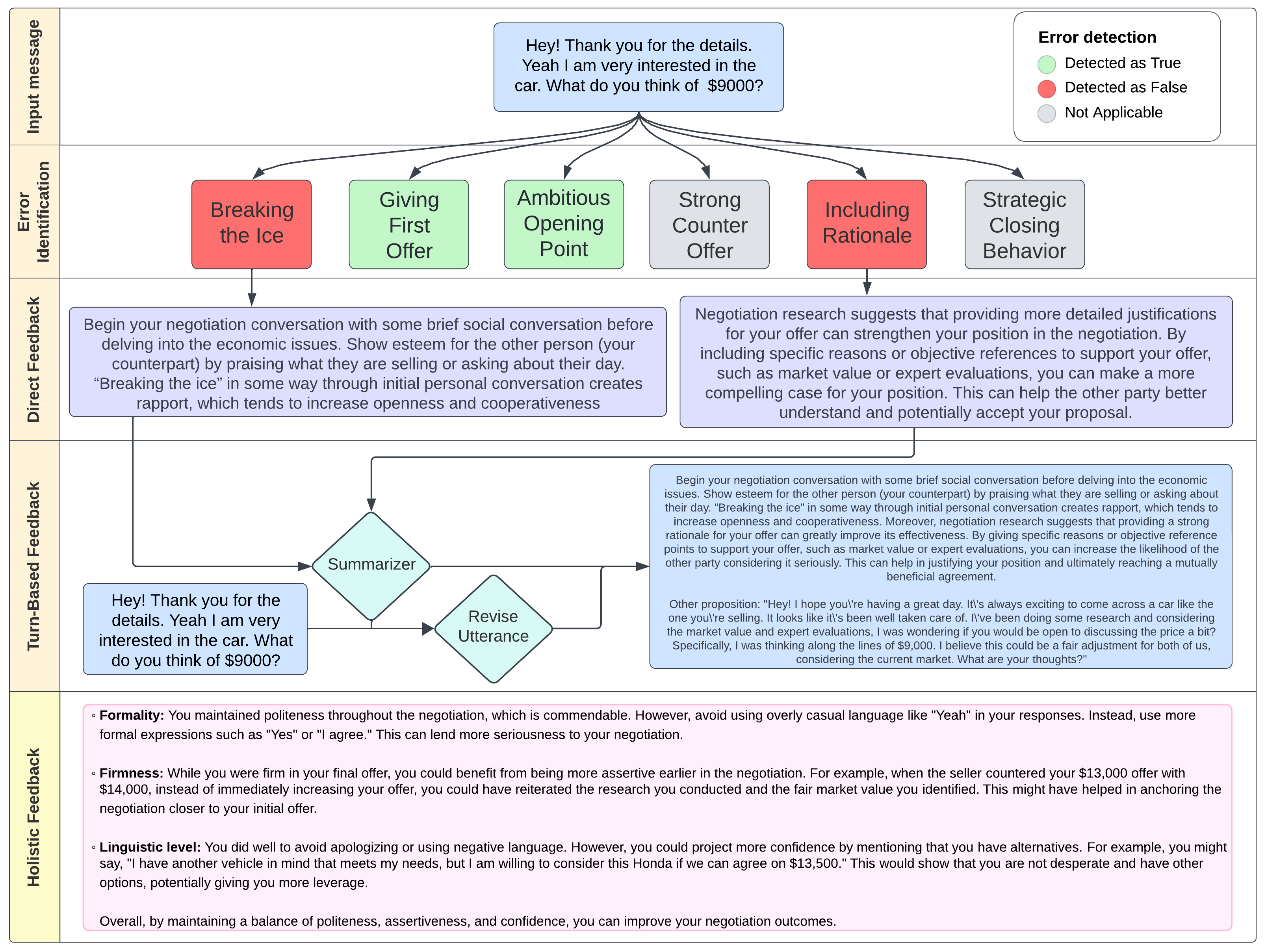

Figure 1: Diagram illustrating the turn-based feedback flow for ACE as well as an example of holistic feedback.

Dataset and Annotation

The dataset used to train and evaluate ACE is derived from negotiation transcripts of MBA students engaged in realistic scenarios, reflecting actual business negotiations. Errors in these transcripts were annotated with a newly developed scheme focusing on both preparation and in-negotiation execution. The scheme classifies errors across categories like setting strategic prices and providing rationales.

System Implementation

Architecture and Pipeline

ACE comprises several key components: a negotiation scenario setup, a dialogue agent powered by GPT-4, and a feedback mechanism. Users engage in simulated negotiations with the dialogue agent. The ACE system evaluates user performance through an error detection module which identifies and provides feedback on errors such as failing to give rationale or strategic price points.

Negotiation Agent

The negotiation agent employs GPT-4 with custom prompts to engage users in realistic bargaining dialogues. Strategies include dynamic prompt adjustments to simulate a realistic bargaining experience. This approach prevents the agent from conceding too easily and ensures robust interactions.

Evaluation and Results

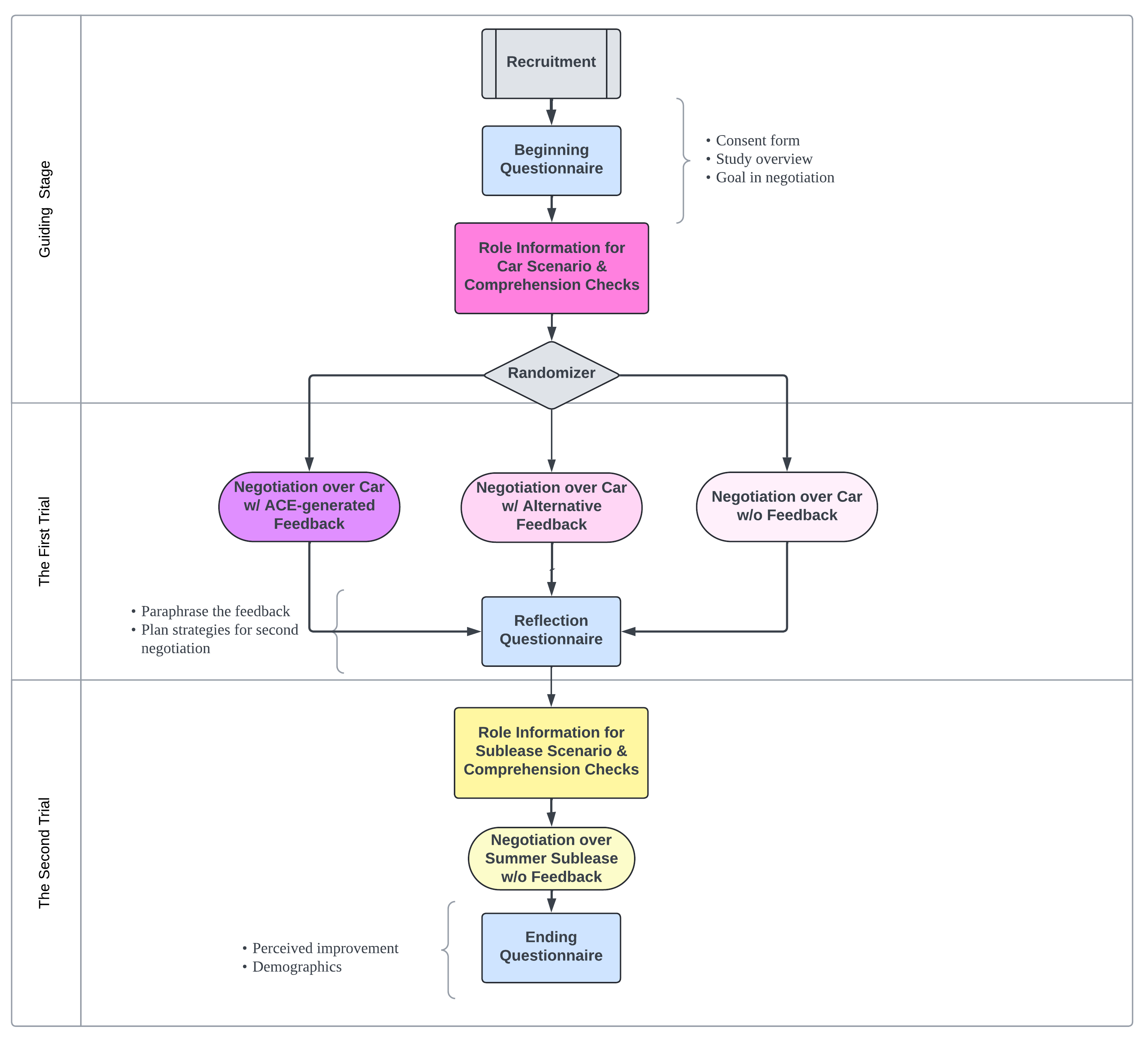

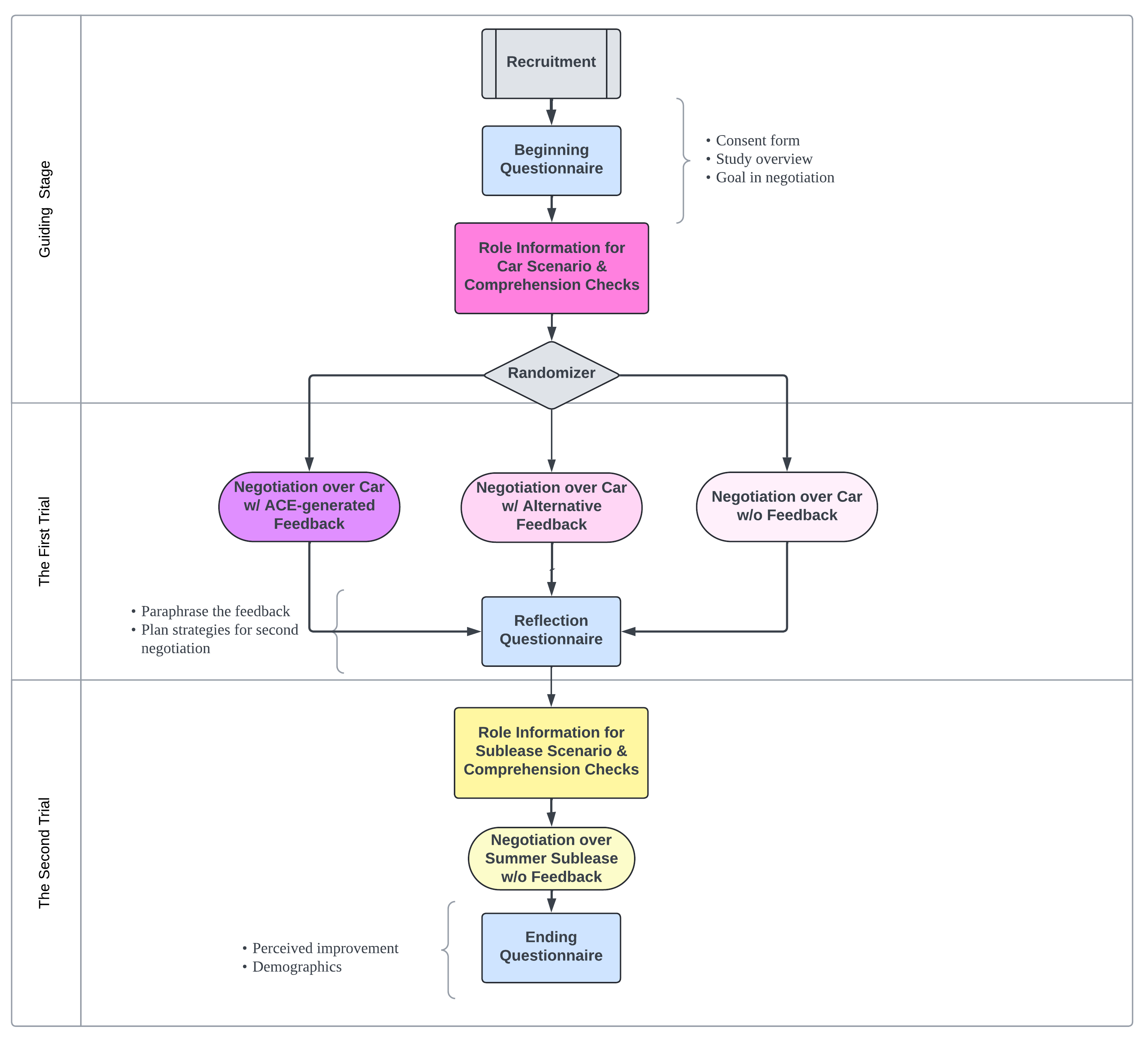

The efficacy of ACE was validated through user experiments involving 374 participants across multiple negotiation trials. Results indicated that participants who received ACE feedback demonstrated significant improvements in negotiation outcomes compared to those who did not receive detailed feedback.

Figure 2: Experiment diagram, illustrating the setup for user trials.

Feedback Mechanism

Feedback in ACE is twofold: it provides turn-based feedback for specific errors and holistic feedback on the user's overall negotiation approach. The system uses advanced prompt-based methods to generate meaningful insights into user performance, thereby offering a personalized coaching experience. The experiments validated that ACE's feedback substantially enhanced users' negotiation skills, as evidenced by improved final agreement prices.

Conclusion

The ACE negotiation coaching system exemplifies how LLMs can be deployed for educational purposes beyond traditional academic subjects. The system broadens access to negotiation training, particularly for those in need of skill enhancement for professional advancement. While showing promising results, future work should aim to incorporate a broader cultural understanding and adaptive feedback that aligns with diverse negotiation practices globally.

Note: Further exploration and rigorous experimentation in cross-cultural settings would enhance ACE's applicability worldwide. The integration of continuous learning models that adapt user interactions over time could potentially improve personalization and coaching effectiveness.