- The paper introduces a novel architecture that integrates 2D CNNs with bidirectional GRUs to capture spatial and temporal features for violence detection in video data.

- It achieves high accuracy with 98% on the Hockey dataset and above 90% on other benchmarks, demonstrating robust performance.

- The study emphasizes computational efficiency by using simpler 2D CNNs and GRUs instead of resource-intensive 3D CNNs, paving the way for real-time applications.

2D Bidirectional Gated Recurrent Unit Convolutional Neural Networks for End-to-End Violence Detection in Videos

Introduction

This paper investigates an advanced end-to-end solution for detecting violence in videos, leveraging the capabilities of deep learning architectures tailored for spatiotemporal data. The method synergistically combines the benefits of two prevalent architectures: 2D Convolutional Neural Networks (CNNs) for spatial feature extraction and Bidirectional Gated Recurrent Units (BiGRUs) for capturing temporal dynamics. Unlike traditional approaches that rely heavily on handcrafted features or computationally intensive 3D convolutional neural networks, this work presents a streamlined yet performant alternative that balances accuracy and computational efficiency.

Methodology

The proposed architecture seeks to maximize the advantage of 2D CNNs and GRUs within a unified framework.

Convolutional Neural Networks for Spatial Features

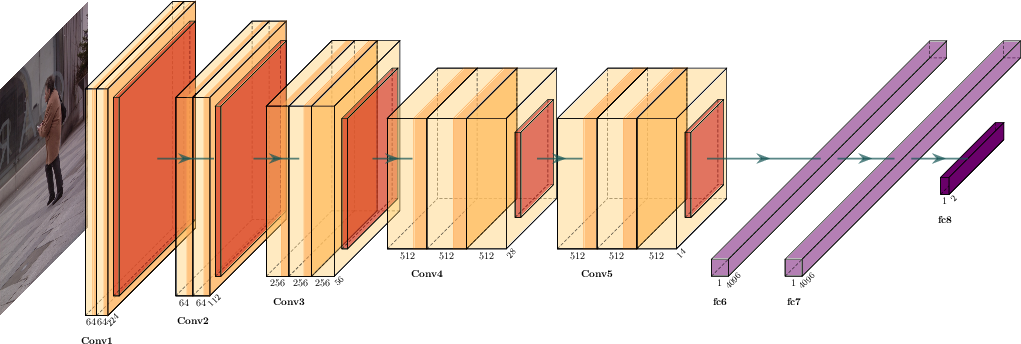

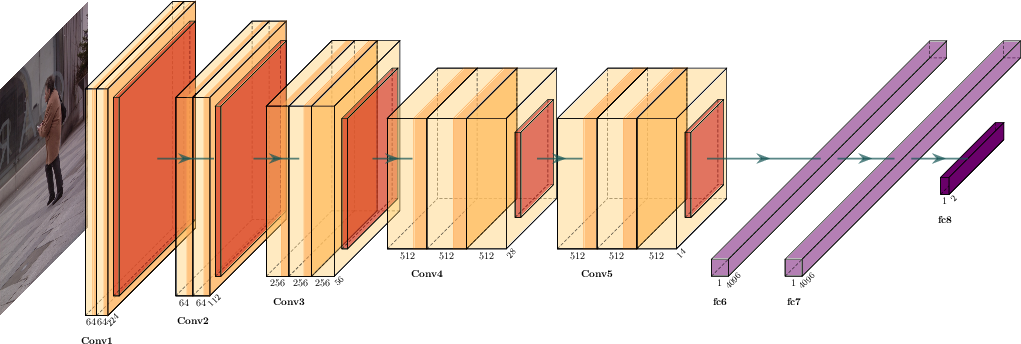

The CNN component is based on the VGG16 architecture, chosen for its ability to effectively capture spatial hierarchies in images. Pre-training was conducted on the INRIA Person Dataset to optimize person detection by stripping away fully connected layers (FC6, FC7, FC8) post-training, facilitating its integration with GRUs.

Figure 1: VGG16 used for capture spatial features.

Bidirectional Gated Recurrent Units for Temporal Features

To address the temporal interconnectedness of video frames, a BiGRU is employed following the CNN. BiGRUs are leveraged here for their propensity to circumvent the vanishing gradient problem that plagues LSTMs, while also requiring fewer computational resources due to their simpler gating mechanism. The bidirectional aspect allows the model to incorporate information from both past and future frames, enhancing predictive accuracy.

Figure 2: BiGRU used to capture temporal features.

Network Architecture

The integration of the CNN with BiGRU involves a unified architecture where the CNN output is flattened and fed into the BiGRU, subsequently outputting temporal inferences which inform video classification outcomes.

Figure 3: 2D BiGRU-CNN architecture.

Experimental Evaluation

The empirical performance was validated using three publicly available datasets: Hockey Fight, Violent Flow, and Real Life Violence Situations.

Datasets and Setup

- Hockey Dataset: Consists of balanced fight/non-fight 2-second sequences containing approximately 41 frames each (Figure 4).

- Violent Flow Dataset: Comprises 246 real-world video sequences, characterized by crowd violence and varying clip durations (Figure 5).

- Real Life Violence Situations: A substantially varied dataset with different violence scenarios mimicking real-life sequences (Figure 6).

Figure 4: Frames from Hockey Dataset.

Figure 5: Frames from ViolentFlow.

Figure 6: Frames from Real Life Violence Situations Dataset.

Results

The proposed architecture achieved promising accuracy levels:

- Hockey Dataset: 98%

- Violent Flow Dataset: 95.5%

- Real Life Violence Situations: 90.25%

These outcomes exceeded several contemporary techniques that employ more computationally demanding 3D CNNs. Notably, the approach's computational footprint is significantly reduced due to its efficient utilization of 2D CNNs in combination with BiGRU for temporal reconciliation.

Conclusion

The novel combination of a 2D CNN and BiGRU puts forth a compelling solution for violence detection in videos, offering both high accuracy and computational efficiency. The architecture’s generalization capability was demonstrated across multiple datasets with diverse characteristics. The research indicates potential for further enhancements through sophisticated sampling techniques and fusion methodologies involving optical flow data. These avenues may yield improvements in accuracy and open possibilities for real-time violence detection solutions in surveillance systems. Future efforts will aim to adapt lightweight architectures to further optimize performance for deployment in resource-constrained environments.