Flexible Low Rank Adaptation for LLMs

The research paper under discussion presents Flexible Low Rank Adaptation (), a novel method aimed at enhancing the fine-tuning capabilities of LLMs. LLMs, while proficient in broad generalization tasks, often underperform in specific downstream tasks due to knowledge boundaries and overfitting. To address these issues, the authors propose a flexible, hyperparameter-optimized framework that dynamically selects the most critical layers for fine-tuning, reducing computational costs and mitigating overfitting.

Introduction

The motivation behind this research is rooted in the limitations of existing fine-tuning methodologies for LLMs. While methods like Low-Rank Adaptation (LoRA) reduce training costs by introducing additional trainable parameters while freezing the pre-trained parameters, they often lead to suboptimal performance due to overfitting. Prior approaches such as AdaLoRA, DoRA, and various regularization techniques have attempted to address this issue with varying degrees of success. However, these methods either fall short in achieving optimality across different tasks or lack flexibility.

Methodology

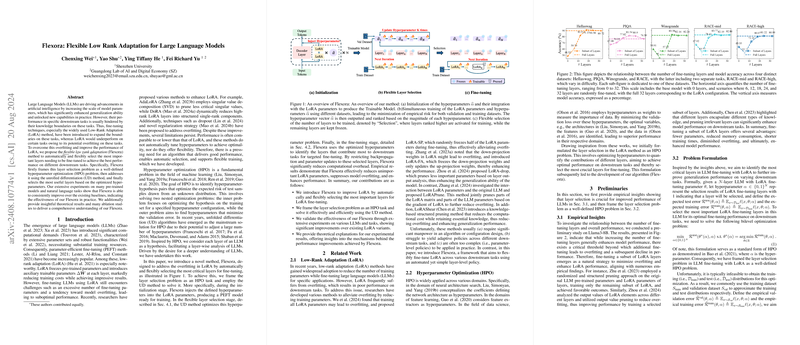

The core contribution of this paper is formulating layer selection in LLM fine-tuning as a Hyperparameter Optimization (HPO) problem. The proposed method, , makes use of Unrolled Differentiation (UD) to solve the HPO problem, thus identifying and fine-tuning only the most critical layers. This approach significantly reduces the number of trainable parameters and computational overhead while enhancing the model's performance on specific tasks.

Empirical Insights

The authors begin by exploring the relationship between the number of fine-tuning layers and overall performance. Results indicate that while increasing the number of fine-tuning layers generally enhances performance, beyond a certain threshold, additional fine-tuning leads to overfitting and performance degradation. This insight justifies the need for selective fine-tuning—a haLLMark of efficiency that balances performance with computational cost.

Optimization and Selection Strategy

frames the layer selection problem as a hyperparameter optimization (HPO) task, defining specific layers as hypotheses within the model. During fine-tuning, the layers are dynamically selected based on their contribution to performance on validation data. The algorithm employs SGD for hyperparameter updates, minimizing the empirical validation error through continuous optimization of layer importance.

Specifically, the introduced relaxation mechanism allows treating binary layer selection as a continuous optimization problem, further enhanced by the UD method. Through iterative training and validation cycles, determines the most critical layers for fine-tuning, thus achieving an optimal reduction in model overfitting.

Experimental Results

The efficacy of was validated through extensive experiments on several mainstream LLMs, including Llama3-8B, Chatglm3-6B, Mistral-7B-v0.1, and Gemma-7B. The experimental results encompass various datasets such as Hellaswag, PIQA, Winogrande, and RACE, offering a comprehensive evaluation framework for reasoning and comprehension tasks.

In comparison with baseline models and other state-of-the-art fine-tuning methods, consistently outperformed in both accuracy and computational efficiency. For instance, on the Llama3-8B model, achieved an average accuracy of 86.46%, compared to 83.04% by LoRA, while also significantly reducing the number of trainable parameters and the overall training time. Notably, the method exhibited strong generalization capabilities across different tasks and models, underscoring its flexibility and robustness.

Theoretical Insights

The paper provides a theoretical foundation explaining the superior performance of models fine-tuned using fewer, carefully selected layers. This is attributed to better generalization resulting from reduced smoothness (β) in terms of test error bounds. Proposition 2 derived within the paper indicates that models with fewer fine-tuning layers exhibit lower smoothness and thus better generalization, theoretically affirming the empirical results obtained.

Conclusion and Future Work

introduces a paradigm shift in fine-tuning LLMs by focusing on a dynamic selection of critical layers, thereby enhancing both performance and efficiency. This method addresses the core issues of overfitting and excessive computational requirements faced by previous approaches.

Future research directions include exploring the specific impacts of individual layers on task performance, further refining the understanding of layer importance. Additionally, the integration of with other advanced LoRA variants holds promise for even more significant performance improvements, paving the way for robust and versatile fine-tuning methodologies.

In conclusion, the research presents a compelling case for flexible and efficient fine-tuning strategies, demonstrating substantial improvements over existing methods and opening avenues for future innovations in the field of large-scale LLMs.