Introduction to Hyperparameter Optimization for Instruction-Tuning in LLMs

Hyperparameter optimization (HPO) is a critical step in refining the performance of LLMs, particularly when applying Instruction-Tuning methods. This discussion dissects an evaluation of different Hyperparameter Optimization strategies, focusing on Low-Rank Adaptation (LoRA), a popular fine-tuning method that maintains most pre-trained LLM weights while tweaking only a small subset.

The Methodology of HPO in Instruction-Tuning

Instruction-tuning, a modern approach in fine-tuning LLMs like GPT-4 or ChatGPT, is particularly sensitive to hyperparameter selection. It involves training on instruction-output pairs and aims to align model predictions with human intent. This paper identifies hyperparameters crucial to the LoRA method's efficiency—such as the rank of decomposition and scaling factors. To fine-tune these hyperparameters, two blackbox optimization (BBO) techniques were employed: NOMAD, an algorithm accommodating direct search methods, and TPE, a Bayesian optimization method within the Neural Network Intelligence (NNI) toolkit.

Efficiency via Blackbox Optimization Techniques

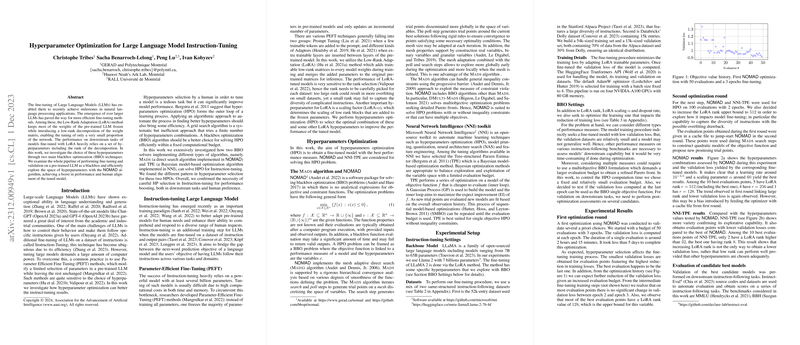

The potential advantages of BBO techniques over traditional grid search procedures are substantial, offering more systematic and efficient exploration of the hyperparameter space. NOMAD's algorithm is particularly suited for the task, as it can handle general inequality constraints and is equipped for multiobjective optimization problems. Meanwhile, the TPE within NNI is adept at balancing exploration and exploitation with a limited evaluation budget. Experiments were conducted using a blend of instruction-following datasets, from which, after extensive BBO application, different hyperparameter patterns emerged between the NOMAD and NNI-TPE algorithms.

Experimental Insights and Outcome

The empirical results revealed a clear benefit of hyperparameter optimization: fine-tuned models display a substantial enhancement in downstream tasks and human preference alignment. However, the relationship between validation loss during tuning and downstream performance isn't absolute. The best parameters found by NOMAD led to models exhibiting a marked human preference over default parameters. This underscores the importance of a robust approach to HPO, especially in the context of aligning LLM outputs with human desires.

In conclusion, both NOMAD and NNI-TPE HPO techniques prove to be valuable tools for improving the efficacy and alignment of LLMs through Instruction-Tuning. Their contributions extend to diverse instructional benchmarks, paving the way for fine-tuned models that effectively internalize complex instructions without the necessity for widespread parameter adjustments. Through this analysis, we are reminded that the intricacy of LLM tuning calls for a detailed, methodical approach to HPO, and further research may yet refine these processes to achieve even greater performance benchmarks.