Overview of "W-RAG: Weakly Supervised Dense Retrieval in RAG for Open-domain Question Answering"

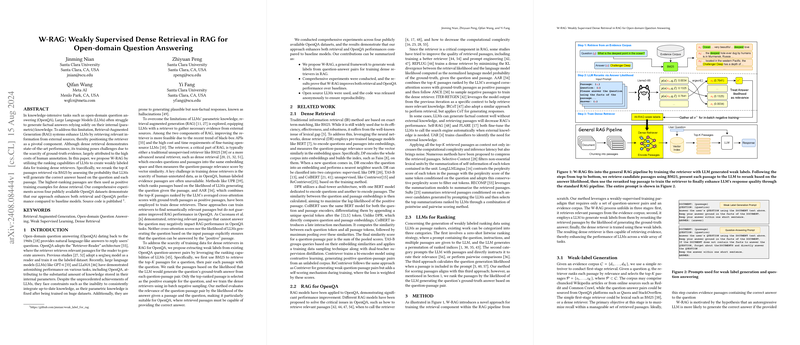

The paper "W-RAG: Weakly Supervised Dense Retrieval in RAG for Open-domain Question Answering" explores the challenges and mechanisms of enhancing the Retrieval-Augmented Generation (RAG) framework for Open-domain Question Answering (OpenQA) by focusing on the retriever component's training. The authors propose the W-RAG framework, which leverages weak supervision derived from the ranking capabilities of LLMs to generate training data for dense retrieval models.

Core Contributions

The main contributions of the paper can be summarized into three key points:

- W-RAG Framework: The authors introduced a novel approach to extract weak labels from question-answer pairs using the ranking capabilities of LLMs. By leveraging the likelihood of LLMs generating the correct answer, passages are reranked and used as positive training examples for dense retrieval.

- Comprehensive Evaluation: The paper provides a thorough evaluation of the W-RAG framework across four well-known OpenQA datasets: MSMARCO QnA, NQ, SQuAD, and WebQ. The retrievers trained using W-RAG data demonstrated improved retrieval and OpenQA performance compared to baseline models.

- Open Source Code: The authors have released the source code to ensure reproducibility of the proposed framework, allowing other researchers to validate and expand upon their work.

Detailed Analysis

Dense Retrieval via Weak Supervision

Dense retrieval, typically employing neural retrievers like DPR and ColBERT, aims to encode questions and passages into a shared embedding space, scoring relevance through vector similarity. However, generating ground-truth labels for training these models is resource-intensive due to the human annotation involved. The W-RAG aims to mitigate this by creating training data from existing OpenQA pairs without explicit human-labeled passage relevance.

Methodological Rationale

The retrieval process in W-RAG begins with an initial retrieval using BM25, followed by reranking the top- passages through an LLM based on the likelihood that LLMs generate the question's accurate answer. This transformation into probability-based relevance scores provides a weakly supervised dataset which is then utilized to train dense retrieval models like DPR and ColBERT.

Experimental Validation

The experiments conducted reveal some noteworthy numerical results:

- Enhanced Performance: The weakly supervised training of dense retrievers demonstrated superior performance on retrieval tasks compared to unsupervised models like BM25, Contriever, and ReContriever. For instance, Recall@1 improvements were observed, such as a jump from BM25's 0.1647 to W-RAG trained models' 0.2023 on the MSMARCO QnA dataset.

- OpenQA Metrics: When integrating these retrievers into the OpenQA pipeline, significant enhancements in F1, Rouge-L, and BLEU scores were achieved, reflecting the effectiveness of the passages retrieved via the W-RAG-trained models in improving answer quality.

Implications and Future Work

The implications of the research are twofold, impacting both practical and theoretical dimensions:

- Practical Impact: The retrieval improvements facilitate more accurate answer generation in OpenQA systems, making them more reliable for real-world applications where factual accuracy is crucial.

- Theoretical Implications: The paper underscores the potential of weakly supervised methodologies to approximate the performance of models trained on expensive, manually annotated datasets. It also opens up inquiries into the optimal characteristics of passages that most effectively enhance LLMs' answer generation performance.

Future Directions

Future work may delve into deeper analysis of the types of passages that most effectively contribute to the accuracy of LLM-generated answers. As noted, passages with substantial semantic overlap with the answers do not always guarantee the best performance in practical RAG scenarios. Additionally, further research could explore innovative ways to compress retrieved passages, thus reducing computational overhead while maximizing useful content for OpenQA.

In conclusion, the W-RAG framework proposed by the authors provides an effective pathway for enhancing dense retrieval in RAG systems under constrained resources. By ingeniously leveraging weak supervision, the framework achieves competitive performance enhancements, underscoring the readiness of weakly supervised strategies for broader application in AI and NLP domains.