Enhancing LLMs' Awareness of Source Relevance in Retrieval-Augmented Generation Systems

Introduction to REAR

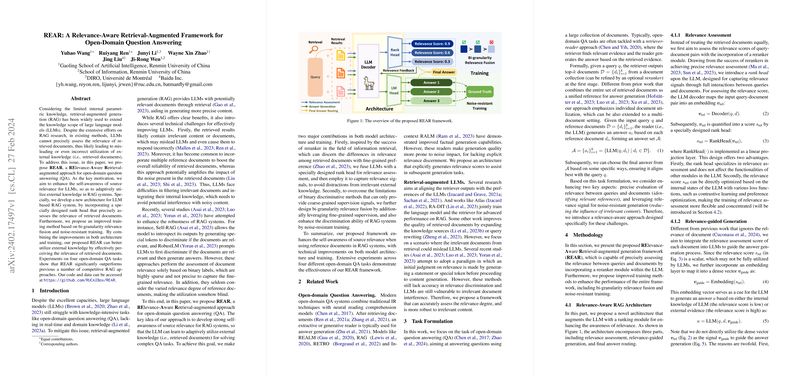

In the field of retrieval-augmented generation (RAG) systems, a persistent challenge has been the effective utilization of external knowledge. Despite the profound capabilities exhibited by LLMs in various domains, their application in knowledge-intense tasks such as open-domain question answering (QA) starkly reveals limitations pertaining to their innate knowledge and real-time adaptability. These systems often struggle to discern the relevance of retrieved documents accurately, leading to potential misinformation in generated outputs. Addressing this challenge, the paper proposes REAR (RElevance-Aware Retrieval-augmented approach for open-domain QA), a novel framework designed to significantly boost LLMs' effectiveness in open-domain QA by enhancing source relevance awareness. This is achieved through innovative improvements in model architecture and training methodology, focusing on relevance assessment precision and adaptive knowledge utilization.

Architecture and Methodology

REAR introduces a specialized architecture incorporating a rank head explicitly designed for relevance assessment, integrated within the LLM framework. This distinct component allows for capturing and utilizing relevance signals from retrieved documents, underpinning the model's ability to discern the pertinence of external knowledge accurately. Moreover, the paper complements architectural advancements with a refined training approach, incorporating bi-granularity relevance fusion and noise-resistant training methods. These strategies collectively aim at improving the model's proficiency in processing fine-grained relevance cues and bolstering resistance against the noise present in retrieved documents. Through these dual avenues of enhancement, REAR emerges as a sophisticated framework capable of elevating the accuracy and reliability of content generated by LLMs in response to query inputs.

Experimental Results

The efficacy of REAR is substantiated through extensive experiments conducted across four open-domain QA tasks. The framework consistently outperformed several established RAG models, showcasing its superior capability in relevance assessment and external knowledge utility. Notably, REAR demonstrated a robust performance against irrelevant document interference, a significant advancement over conventional models. These experimental outcomes are a testament to the framework's ability to judiciously utilize retrieved documents. Detailed analyses further enlighten on specific components of REAR, elucidating the contributions of the rank head, bi-granularity relevance training, and noise-resistant training towards the observed performance enhancements.

Implications and Future Directions

The introduction of REAR paves a promising path towards resolving the longstanding challenge of effective external knowledge utilization in LLMs, specifically within the RAG context. By prioritizing the self-awareness of source relevance, REAR sets a foundation that future works can build upon to explore more granular levels of relevance discernment, such as passage or sentence level augmentation. Additionally, the applicability of the REAR framework in a wider array of knowledge-intensive tasks presents a fertile ground for further research, potentially extending beyond open-domain QA to other domains within the KILT benchmark.

Conclusion

In summary, REAR represents a significant stride towards enhancing the interplay between LLMs and external knowledge sources in RAG systems. Through its novel architecture and training approaches, REAR not only fosters a deeper understanding of document relevance but also ensures a more adept and noise-resistant utilization of external knowledge. Consequently, this framework heralds a notable improvement in the accuracy and reliability of responses generated by LLMs in open-domain QA tasks, marking a pivotal advancement in the field of AI research.