Characterizing the Reasoning Abilities of LLMs via 3-SAT Phase Transitions

The paper "Characterizing the Reasoning Abilities of LLMs via 3-SAT Phase Transitions" rigorously evaluates the reasoning capabilities of LLMs using the 3-SAT problem as a benchmark. The 3-SAT problem, a classic NP-complete problem, provides a robust framework due to its well-defined and widely studied phase transitions which can delineate easy and hard instances. This paper focuses on determining the bounds and robustness of LLMs' reasoning abilities by analyzing their performance on these phase transitions.

Introduction

The introductory section outlines the growing interest in LLMs' reasoning capabilities. Emphasizing Leon Bottou's definition of reasoning as “the algebraic manipulation of previously acquired knowledge in order to answer a new question”, the authors motivate the need to scrutinize the purported reasoning abilities of LLMs. They consider both logical and deductive reasoning, evaluating LLMs such as GPT-4, and exploring how different prompting strategies and input forms affect performance.

3-SAT Problems

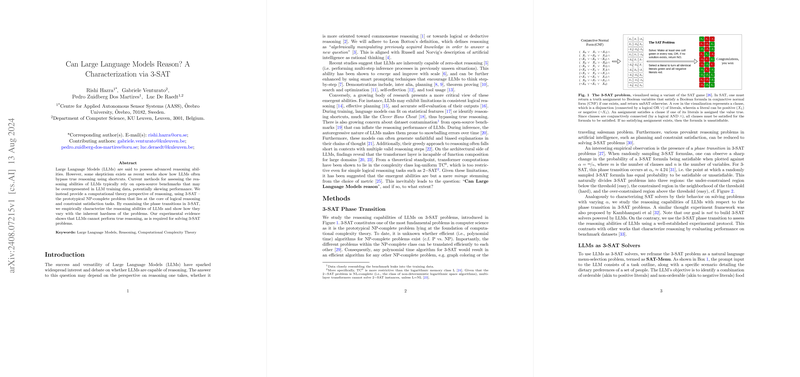

In the context of computational complexity, the significance of the 3-SAT problem lies in its NP-completeness. Numerous problems can be efficiently reduced to 3-SAT, and any polynomial-time algorithm for 3-SAT would similarly be applicable to all NP-complete problems. The 3-SAT problem also presents distinct phase transition characteristics referring to the sharp change in the probability of an instance being satisfiable based on the ratio (α) of clauses to variables. This feature is critical for assessing the reasoning capabilities of LLMs and helps to identify regions where problem instances are inherently easy or hard.

Evaluating the Reasoning Ability of LLMs on 3-SAT

The authors conducted extensive experimentation to evaluate the reasoning capabilities of LLMs on 3-SAT problems, focusing on both decision and search variants. Specifically:

- 3-SAT Decision Problem: Results from the confusion matrix indicate that GPT-4 correctly identifies unsatisfiable scenarios while occasionally misclassifying satisfiable problems, especially in the hard region.

- 3-SAT Search Problem: GPT-4 mirrors the solver-like Easy-Hard-Easy phase transition pattern, performing well in easy regions but with accuracy dropping significantly around the hard phase transition area.

- Size of Solution Space: The performance of GPT-4 improves with a higher satisfiability ratio, suggesting a correlation between the size of the solution space and the model's ability to find a satisfying assignment.

Impact of Prompting and Input Type

The paper further examines the impact of input types (SAT-Menu vs. SAT-CNF) and prompting techniques. The findings reveal no significant changes in performance due to the form of input, and in-context learning shows some improvement for GPT-4 but negligible effects for other models. The use of step-by-step instructions demonstrates some enhancements in initial easy-hard phases but decreases in performance thereafter.

Enhancing LLMs' Reasoning with Solvers

A critical insight emerges from the "SAT-Translate" approach, which leverages LLMs for natural language to CNF formula translation, followed by employing a SAT solver. This method shows almost perfect performance, underscoring the effectiveness of combining LLMs' capabilities with symbolic solvers to overcome complexity constraints.

Comparative Performance Among LLMs

The comparative analysis across various state-of-the-art LLMs, including GPT-4, GPT-3.5, Llama, and others, highlights that GPT-4 consistently outperforms its peers. Performance trends reveal that:

- All LLMs show improved performance with a larger solution space, consistent reasoning abilities irrespective of input type, and significant gains when augmented with solvers.

- Unique to GPT-4 is its solver-like phase transitions and improvements with in-context learning, unlike other models which exhibit an Easy-Hard-Hard pattern and negligible in-context learning benefits.

Implications and Future Directions

The implications of this research are multifold. Practically, the findings suggest employing LLMs in conjunction with symbolic solvers to tackle complex reasoning problems effectively. Theoretically, it reinforces the idea that LLMs alone struggle with inherently hard problems, highlighting a limitation in their reasoning capabilities. Future developments might focus on enhancing the intrinsic reasoning abilities of LLMs and explore more sophisticated hybrid architectures that integrate symbolic methods.

In conclusion, the paper provides a detailed characterization of LLMs' reasoning abilities, delivering valuable metrics and insights into their performance on NP-complete problems. The integration of solver tools presents a promising direction for enhancing AI's practical reasoning capabilities.