Evaluation of Strategic Reasoning Limitations in LLMs through Game-Theoretical Benchmarks

Introduction to GTBench and its Purpose

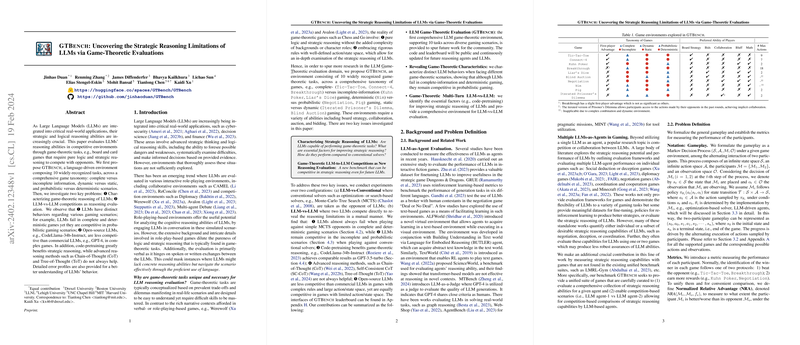

The integration of LLMs into high-stakes real-world applications demands a rigorous assessment of their strategic reasoning capabilities. This paper introduces GTBench, a language-driven benchmark environment that employs game-theoretic tasks to evaluate LLMs' strategic reasoning abilities. GTBench comprises 10 distinct game-theoretic tasks across various domains: complete vs. incomplete information, static vs. dynamic, and probabilistic vs. deterministic scenarios. Through this comprehensive suite, the paper aims to investigate two primary issues: characterizing LLMs' strategic reasoning capacities and evaluating their performance in LLM vs. LLM competitions.

Key Observations and Findings

Strategic Reasoning in Diverse Game-Theoretic Scenarios

The experiments reveal that LLMs exhibit significant variance in performance across different gaming scenarios. Notably, LLMs tend to struggle in complete information and deterministic games, yet they demonstrate competitiveness in environments characterized by incomplete information and probabilistic outcomes. This insight into LLM behavior underlines the models' strengths and limitations in strategic reasoning across a spectrum of game-theoretic contexts.

Comparison between Open-Source and Commercial LLMs

The findings suggest a disparity in performance between open-source and commercial LLMs, particularly in complex game scenarios requiring sophisticated strategic planning and execution. Commercial LLMs like GPT-4 display superior strategic reasoning abilities compared to their open-source counterparts. Furthermore, the research indicates that code-pretraining significantly enhances LLMs' strategic reasoning capabilities, offering an intriguing avenue for future developments in LLM training methodologies.

Impact of Advanced Reasoning Methods

Contrary to expectations, more advanced reasoning approaches such as Chain-of-Thought (CoT) and Tree-of-Thought (ToT) do not universally improve LLM performance in strategic game play. The effectiveness of these methods appears to be context-dependent, suggesting that the incorporation of advanced reasoning paradigms into LLMs requires careful consideration of the specific task and environment.

Implications and Future Directions

The GTBench environment serves as a valuable tool for the AI research community, facilitating a deeper understanding of LLMs' strategic reasoning abilities and limitations. By providing detailed error profiles and insights into factors influencing performance, this work paves the way for targeted improvements in LLM design and training. Future research may explore the integration of domain-specific knowledge and reasoning strategies to enhance LLMs' strategic competencies further.

Moreover, this work opens up discussions about the applicability of LLMs in real-world scenarios that demand strategic reasoning and decision-making. As LLMs continue to evolve, their potential role in decision support systems, negotiation, and other applications requiring nuanced strategic thinking warrants careful consideration and ongoing evaluation.

Contributions and Impact

This paper makes substantial contributions to the understanding of strategic reasoning capabilities in LLMs. By unveiling the complex landscape of LLM performance across a variety of game-theoretic tasks, it sets a foundation for future advancements in AI research focused on strategic reasoning. The GTBench benchmark not only facilitates a nuanced evaluation of LLMs but also stimulates further innovation in developing models capable of sophisticated strategic thought. As the AI field continues to progress, the insights gained from this research will undoubtedly influence the development of more advanced and capable LLMs, driving forward the capabilities of AI in strategic reasoning domains.