Boosting Reward Model with Preference-Conditional Multi-Aspect Synthetic Data Generation

The paper "Boosting Reward Model with Preference-Conditional Multi-Aspect Synthetic Data Generation" introduces RMBoost, a novel synthetic data generation method designed to enhance the performance of Reward Models (RMs), which are essential for aligning LLMs with human preferences. RM training traditionally relies on preference datasets containing pairs of responses to prompts along with labels indicating the preferred response. RMBoost proposes a more nuanced method to generate these pairs, aiming to address the shortcomings of previous synthetic data generation techniques.

Methodology

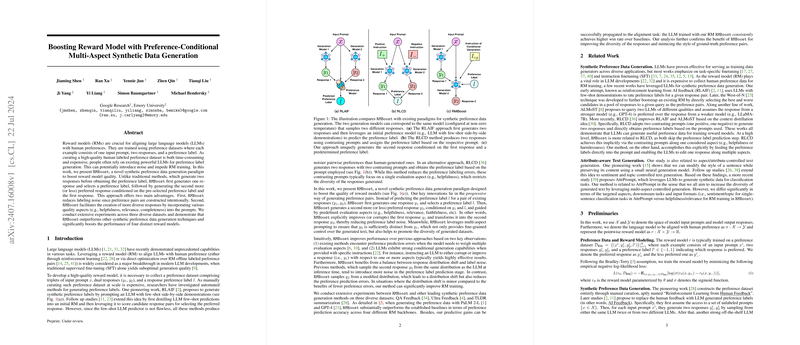

The RMBoost framework operates in a distinct, progressive manner:

- Initial Response Generation: For each input prompt, RMBoost first generates a response using a LLM.

- Preference Label Assignment: A preference label (indicating more or less preferred) is then pre-assigned.

- Conditional Second Response Generation: RMBoost conditions the generation of a second response on the initial response, the pre-assigned preference label, and predefined multi-aspect evaluation instructions (e.g., helpfulness, relevance).

This approach ensures that the preferred response pairs are not just synthetically generated based on LLM outputs but are also diverse and intentional in their differences. This multi-aspect conditioning allows responses to vary along specific, intended dimensions, thereby reducing labeling noise and enhancing response diversity.

Evaluation and Results

The paper evaluates RMBoost against existing synthetic data generation methods such as RLAIF, West-of-N, and RLCD across three diverse datasets:

- QA Feedback: A dataset for long-form question answering.

- Ultra Feedback: A dataset for general LLM alignment.

- TLDR Summarization: A dataset for summarization of Reddit posts.

Results demonstrate RMBoost's superiority in generating high-quality synthetic preference datasets. The experiments show that RMBoost significantly boosts the performance of various RM backbones (Gemini-Nano-1, Gemini-Nano-2, PaLM 2-XXS, and Gemma 2B). Notably, RMBoost-trained RMs show higher preference prediction accuracy and robustness when mixed with real, human-labeled data.

Implications and Future Work

Practically, RMBoost advances the methodology for creating synthetic preference data, making it a valuable tool for RM training where large-scale human-labeled datasets are impractical. Theoretically, it balances the trade-off between response distribution shift and label noise more effectively than previous methods. This leads to training a less biased RM and achieving better downstream task performance.

Future developments might focus on optimizing RMBoost for different domains and extending its application to multi-modal data inputs. Enhancing the robustness and scalability of RMBoost will be crucial for broader adoption in diverse LLM applications.

Conclusion

RMBoost represents a significant methodological improvement for synthetic preference data generation and reward model training. By leveraging preference-conditional and multi-aspect synthetic data generation, RMBoost offers a pathway to more reliable and diverse synthetic datasets, enhancing the overall alignment and performance of LLMs. This work opens up new avenues for research in AI alignment and synthetic data generation, reaffirming the importance of carefully designed data creation methodologies in the development of advanced AI systems.