Introduction

The delineation of Reinforcement Learning from Human Feedback (RLHF) has facilitated the prosperity of LLMs, where the optimization of model output hinges critically on the fidelity of the underlying reward model. Assembling a robust reward model, on the other hand, is contingent on the procuration of top-quality preference data—a procedure that can be cost-prohibitive and labor-intensive. Addressing this bottlenecks, the paper introduces a novel method for generating synthetic preference data to enhance reward model training, thereby directly benefiting LLM alignment.

Related Work

The framing of the problem is rooted in the established understanding that the procurement and curation of high-value preference data is crucial for modeling human preferences effectively. Prior strategies such as the Best-of-N sampling have exhibited efficacy in elevating LLM outcomes by navigating models towards favorable generations. However, the application of such strategies in reward model optimization has not been thoroughly explored. Concurrently, the employ of self-training methods within the semi-supervised learning paradigm has shown promise in elevating performance across various domains in AI, but their potential in reward modeling for LLMs remains untapped.

Approach and Contributions

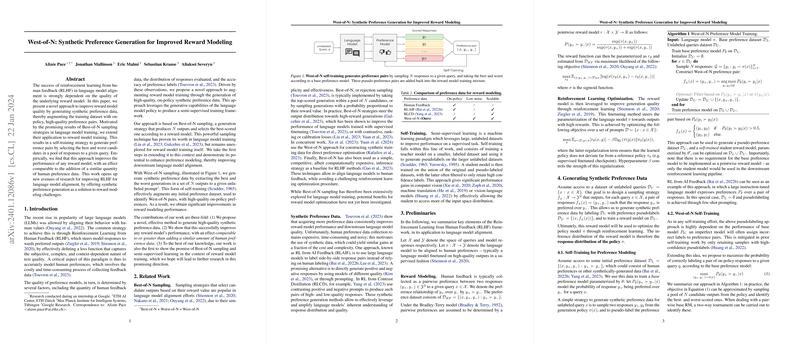

The paper expounds on a scheme termed West-of-N sampling, where, through self-training, synthetic high-quality preference pairs are produced by discerning the best and worst responses within a set of outputs to an input query. The anticipation is that this approach allows for substantial enhancements in reward model performance. This is accompanied by empirical validation suggesting the approach's efficacy is comparable to the inclusion of an equivalent footing of human preference data. The authors highlight three principal contributions: a newly introduced method for creating synthetic preference data, validation of the method's capability to boost the performance of reward models, and pioneering evidence of the utility of Best-of-N sampling within the scope of reward model training.

Empirical Validation and Avenues for Future Research

Empirical trials underscore the method's potency across multiple datasets, manifesting consistent improvements over existing synthetic data generation approaches like RLAIF and RLCD. The findings are robust over various initial data conditions, reinforcing the method's universal applicability. Moreover, the paper ventures into an extensive analysis of self-training strategies, which further unveils mechanisms pivotal for this approach’s success. These analyses pave the way for innovative research directions, such as exploring self-training extension methodologies that could potentially lead to additional advancements in reward model performance.

The paper engenders optimism for future work, laying the groundwork for subsequent explorations in refining RLHF methodologies, all while emphasizing the quintessential role synthetic preference generation plays in the continuous evolution of LLM alignment.