This paper introduces LoRA-Guard, a novel method for implementing content moderation guardrails for LLMs in a parameter-efficient manner, making it suitable for resource-constrained environments like mobile devices (Elesedy et al., 3 Jul 2024 ). The core problem addressed is that traditional model-based guardrails often require separate, large models, leading to prohibitive computational overhead and inefficient knowledge duplication between the chat LLM and the guard model.

LoRA-Guard integrates the guardrail mechanism directly into the chat LLM using Low-Rank Adaptation (LoRA). It maintains a dual-path design:

- Generative Path: Uses the original, unmodified weights of the chat LLM () to generate responses. This ensures that the model's primary task performance is not degraded.

- Guarding Path: Activates trained LoRA adapters () applied to the chat LLM's weights and uses a separate, lightweight classification head () to predict a harmfulness score based on the LLM's internal representations. This path leverages the language understanding capabilities already present in the chat model.

The system architecture involves sharing the tokenizer and embedding layer () and the main transformer blocks () between both paths. For guarding, the input passes through the embedding layer, the transformer blocks with activated LoRA adapters (), and finally the guard classification head: . For generation, the LoRA adapters are deactivated, and the original LLMing head () is used: .

Implementation Details:

- Training: Only the LoRA matrices ( and , where ) and the classification head () are trained. The original chat model weights () remain frozen. This significantly reduces training time and memory. Training uses standard cross-entropy loss on datasets labeled for harmful content.

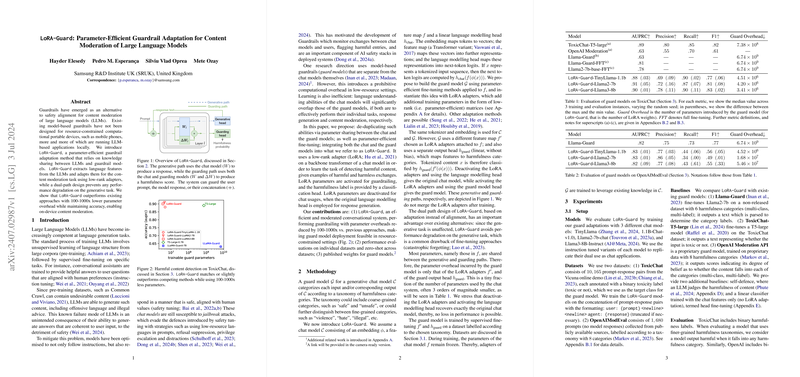

- Parameter Efficiency: The parameter overhead is limited to the LoRA weights and the classification head, which is shown to be 100-1000x smaller than deploying a separate guard model like Llama-Guard or fully fine-tuning an LLM for the guard task.

- Inference: The system switches between paths by activating/deactivating LoRA adapters and selecting the appropriate head (LLMing or guard classification). Since LoRA weights are not merged, this allows flexibility but might introduce a minimal latency switch cost.

Experiments and Results:

- LoRA-Guard was evaluated using TinyLlama-1.1B, Llama2-7b, and Llama3-8B as base models.

- Datasets included ToxicChat (binary toxicity) and OpenAIModEval (8 harmfulness categories).

- Results show that LoRA-Guard achieves comparable or better performance (measured by AUPRC, Precision, Recall, F1) compared to baselines like Llama-Guard, OpenAI Moderation API, and fully fine-tuned models, while drastically reducing the parameter overhead (e.g., ~4.5M parameters for LoRA-Guard on TinyLlama vs. ~6.74B for Llama-Guard).

- Cross-domain tests indicated that models trained on ToxicChat generalized reasonably well to OpenAIModEval, but the reverse showed a significant performance drop, highlighting the sensitivity to dataset characteristics and formats.

Practical Implications:

- Enables efficient deployment of content moderation guardrails on devices with limited compute power (e.g., smartphones).

- Avoids catastrophic forgetting or degradation of the LLM's primary generative capabilities, a common issue with full fine-tuning approaches.

- Offers a way to add safety features to existing open-source LLMs with minimal additional resources.

Limitations:

- Requires white-box access to the chat model's weights.

- The harmfulness taxonomy is fixed post-training; adapting to new categories requires retraining the LoRA adapters and head.

- Cross-domain generalization is not guaranteed and may require further tuning or investigation depending on the target domain's data characteristics.

In conclusion, LoRA-Guard presents a practical and effective approach for parameter-efficient content moderation by adapting existing LLMs using LoRA, significantly reducing computational costs while maintaining performance and avoiding negative impacts on the model's generative function.