An Academic Overview of LiveBench: A Contamination-Free LLM Benchmark

The paper under review introduces "LiveBench," a novel benchmark designed to address the limitations associated with traditional evaluation frameworks for LLMs. These frameworks are often compromised due to test set contamination and biases introduced via crowdsourcing evaluations, posing significant challenges to accurate, fair assessments. LiveBench, as articulated by White et al., proposes a comprehensive solution by regularly updating its questions using recent information and relying on objective ground-truth values for automatic scoring, thereby eliminating the dependencies and biases of human or LLM judges.

Key Characteristics of LiveBench

The authors establish three unique facets of LiveBench:

- Regularly Updated Questions: Questions derive from contemporary sources, such as recent math competitions and academic datasets, allowing LiveBench to remain contemporaneous and relevant.

- Objective Scoring: Without involving LLM or human judges, LiveBench scores answers based on established ground-truth values, enhancing the integrity and reliability of the evaluation.

- Diverse Task Range: The benchmark encompasses six broad categories—math, coding, reasoning, language, instruction following, and data analysis—enhancing its capacity to evaluate various LLM capabilities comprehensively.

Evaluation and Insights

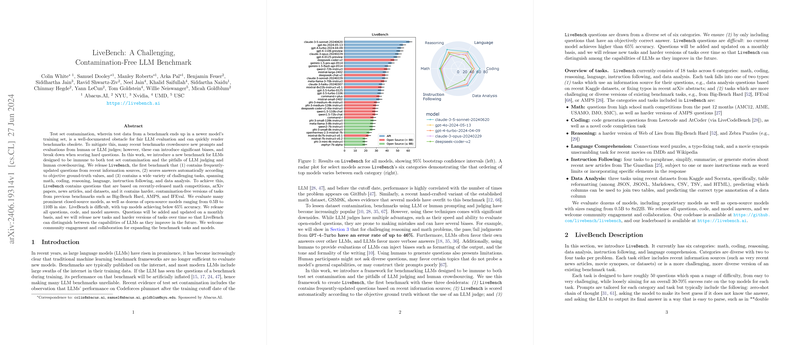

The authors propose a robust evaluation framework by testing a wide array of models, including both open and closed-source variants ranging in size up to 110B parameters. Results showcase LiveBench’s complexity, as even the highest performing models achieve an accuracy below 65%. This challenges the LLMs across the breadth of updated questions while remaining resistant to contamination.

A significant insight revealed by this paper is the inadequacy of LLMs as judges for difficult tasks. The authors point out that GPT-4-Turbo's pass/fail judgement errors stand as high as 46% for complex reasoning tasks, thereby necessitating a transition towards automated, ground-truth answers.

Implications and Future Prospects

Practical Implications: For practitioners and developers of LLMs, LiveBench offers a contamination-proof, unbiased framework for evaluating model enhancements and innovations. This leads to more truthful and meaningful assessments of model capabilities in application settings, fostering the development of models better suited for dynamic, real-world challenges.

Theoretical Implications: From a research perspective, LiveBench prompts reconsideration of existing evaluation metrics and methodologies. This scrutiny can lead to broader acceptance of automated, contamination-free benchmarks, further advancing the theoretical understanding of LLM capabilities and limitations.

Future Developments: Looking forward, the adaptability of LiveBench is a notable benefit. Its structure supports continuous evolution, with new questions and challenges added regularly. As models improve, LiveBench can adapt to maintain its challenging nature, fostering ongoing, meaningful differentiation between models.

Conclusion

LiveBench represents a concerted effort to address the chronic problem of test data contamination and biased evaluations in LLM benchmarking. It sets a new standard in the evaluation landscape by emphasizing updated, diverse question sets and objective, automated scoring mechanisms. This approach promises enhanced reliability in benchmarking outcomes, contributing significantly to both the applied and theoretical realms of LLM research and development. Future engagements and expansions of LiveBench will likely bolster its utility and efficacy, reinforcing its role as a pivotal benchmark in LLM assessments.