Analyzing Mixture-of-Experts in LLMs

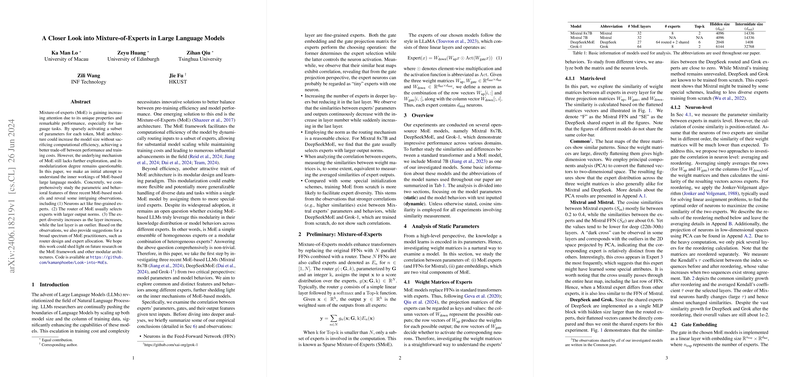

The paper "A Closer Look into Mixture-of-Experts in LLMs" provides an in-depth exploration of the Mixture-of-Experts (MoE) architecture, highlighting its potential for improving computational efficiency and model performance within the field of LLMs. The authors embark on a detailed analysis of MoE models, dissecting both their parametric configurations and operational behaviors. By investigating three prominent MoE-based LLMs—Mixtral 8x7B, DeepSeekMoE, and Grok-1—they scrutinize the underlying mechanics of these architectures through both static parameter examination and dynamic behavior analysis in response to textual inputs.

Key Observations and Insights

The paper presents several noteworthy observations. Firstly, the analysis reveals that neurons within the Feed-Forward Network (FFN) exhibit behavior akin to "fine-grained experts," suggesting a potential need for considering micro-level operations in MoE configurations. This neuron-level specialization appears significant for deeper insights into MoE functionality and its modular architecture. The authors note that, especially in Mixtral, experts exhibit peculiar attributes with often unique learning signatures, inferred from distinct crosses in similarity matrices.

Secondly, the router mechanism in MoE—responsible for dynamically assigning inputs to various experts—often selects experts based on larger output norms. This observation suggests that expert selection can be significantly influenced by the magnitude of expert outputs. Furthermore, intriguing trends in expert diversity emerge as the models progress through layers; experts in deeper layers tend to be less similar, though an anomaly is observed in the last layer, which paradoxically increases in similarity.

The routing patterns across different MoE implementations were investigated using normalized layer-expert arrangements. The findings suggest refined strategies for expert allocation and routing, signifying the importance of architectural refinement based on task-specific requirements. The similarity in behavioral patterns between selected models provides a unique vantage into how MoEs might structurally enhance modular capability through expert diversity and specialization.

Practical and Theoretical Implications

This paper's findings suggest several implications for future developments in MoE LLMs. Practically, the expert-specific insights can influence specific roles of neurons within FFN layers, potentially guiding future architectural innovations that support efficient model scaling without proportional increases in computational demand. Furthermore, their findings underscore the compatibility of norm-based routing strategies with effective expert selection, providing a solid basis for refining MoE router designs.

On a theoretical front, the exploration of neuron-level modularization strategies within expert networks hints at a broader implication for how modular and adaptable systems can be crafted in AI architectures. Given the substantial impact these architectures have in handling increasingly diverse and complex datasets, the understanding and optimization of MoE framework operations are pertinent in advancing AI's adaptability and efficiency.

Future Research Directions

The paper opens several avenues for future research. Questions regarding the optimization of expert allocation and selection strategies based on task-oriented goals continue to merit exploration. Additionally, the weight of training schemes, as indicated by startling differences between models trained from scratch versus those potentially initialized via other sophisticated means, proposes further paper into the optimization of MoE training processes.

Moreover, investigations into how fine-tuning impacts expert behavior remain underexplored, warranting more comprehensive analyses post-model deployment to understand long-term implications on performance and adaptability. This research bridges fundamental insights and impactful methodologies, steering MoEs towards more robust, efficient, and insightful implementations in large-scale LLMing.

In conclusion, the exploration of MoE LLMs by Lo et al. offers significant insights into both micro-level and macro-level operations of expert models, contributing valuably to the ongoing advancement of machine learning architectures. The strategic examination of expert behaviors and parametric distributions aligns with broader goals of achieving scalable, flexible, and high-performance models, establishing a comprehensive foundation for continued research in AI modular frameworks.