Overview of Mixture of Experts Architecture

The Mixture of Experts (MoE) architecture is a concept in neural network design wherein a group of specialized models, known as experts, work in concert to optimize performance while maintaining constant computational cost. Traditional MoE architectures face scalability issues due to the necessity of storing all the experts in memory, making them less practical for large-scale use.

Advancements in Parameter-Efficient Fine-Tuning

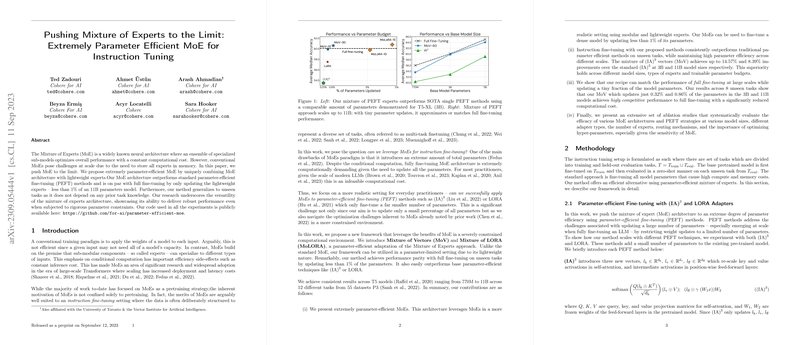

Researchers have now developed a framework pushing the boundaries of MoE by revolutionizing its parameter efficiency. The novel model innovatively pairs MoE with parameter-efficient fine-tuning (PEFT) methods, which substantially reduce the number of parameters requiring updates during fine-tuning. These methods include Intrinsic Attention (IA) and Low-Rank adaptation (LORA). Their proposed architecture manages to match the performance of complete model fine-tuning by only adjusting a fraction of the model's parameters - less than 1%. This is especially noteworthy as the method does not rely on prior knowledge of tasks, thus generalizing well to new, unseen tasks.

Implementation and Practical Benefits

The proposed approach introduces two significant modifications to MoE: Mixture of Vectors (MoV) and Mixture of LORA (MoLORA). In these adaptations, traditional dense experts are replaced with lightweight adaptable elements like IA vectors or LORA adapters. Unlike their denser counterparts, these experts require updates to fewer parameters, significantly reducing memory usage and computational demands during both training and inference. Additionally, this increased efficiency does not come at the cost of performance, with MoV and MoLORA exhibiting superior results compared to standard PEFT methods and full model fine-tuning.

Comprehensive Evaluation

The models were put through rigorous experimentation, encompassing 12 tasks across 55 datasets in the Public Pool of Prompts (P3) dataset. The experiments utilized a range of Transformers from the T5 model family, extending up to 11 billion parameters. In summary, this extremely parameter-efficient MoE framework has demonstrated considerable improvements over standard methods, delivering competitive performance to full fine-tuning and a promising solution for large-scale model deployment. The research not only validates the potency of MoE in parameter-constrained scenarios but also offers a valuable contribution to the domain of efficient model fine-tuning. To encourage further exploration and application, the team has made their code publicly accessible.