Introduction

Mixture-of-Experts (MoE) models represent an important direction in scaling neural network capacity efficiently. Primarily, these models incorporate sparsity into deep learning by routing inputs only through a subset of available experts—sub-networks specializing in different parts of the input space. This paper dissects the router mechanisms responsible for this dynamic allocation within the context of computer vision tasks, evaluating their efficacy in establishing a robust vision MoE system.

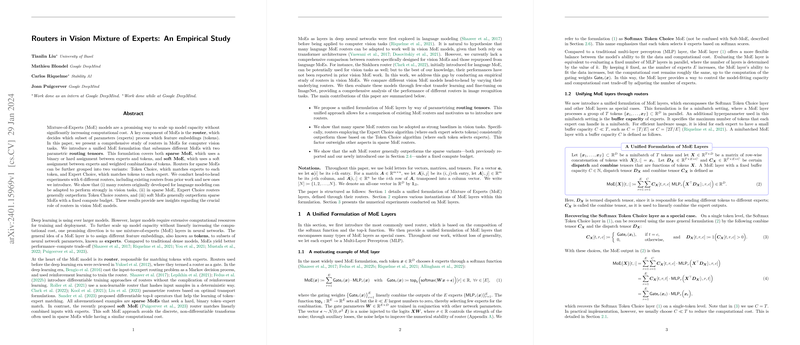

Unified MoE Formulation

The researchers present a novel unified formulation for comparing and implementing various MoE layers. They identify two classes: sparse and soft MoE. Sparse MoEs make a binary decision about whether a particular expert handles an input token, while soft MoEs allow for a softer, distributed handling by blending input tokens across different experts.

The authors strategically analyze two sub-types of sparse MoEs: Token Choice and Expert Choice. In Token Choice, each token is matched to one or more experts, whereas Expert Choice inverts this relationship, allowing experts to select the tokens they process. The authors argue that Expert Choice generally performs better due to consistent expert utilization.

Parametric Evaluation of Routers

Within this comparative framework, the paper evaluates six routers, including those previously used for natural language processing tasks, and custom-developed ones. Specifically, this investigation includes Token Choice and Expert Choice routers informed by Softmax and Sinkhorn algorithms, as well as the novel Sparsity-constrained Expert Choice router.

Remarkably, the authors posit that while the routing strategy significantly impacts the performance of sparse MoEs, the parameterization approach of token-to-expert affinity matrices is of secondary concern. Contrarily, soft MoE, influenced by the SoftMoE router, is shown to be superior under uniform computational budgets.

Empirical Insights

Extensive empirical evaluations underline the insights. Routers initially engineered for LLMs show strong performance when adapted to vision tasks, corroborating the architecture-agnostic nature of MoEs. Additionally, the soft MoE model outshines its sparse counterparts across various benchmarks, solidifying it as an efficient and potent approach to scalable vision tasks.

The performance across routers is assessed through large-scale pre-training and fine-tuning on the JFT-300M dataset, including ImageNet few-shot transfer tasks. Notably, Expert Choice routers, which enable each expert to independently select tokens for processing, consistently excel over Token Choice routers. Moreover, it is evident that soft MoE models, notwithstanding their distinct operating mechanism, attain the highest performance metrics, arguing for their future-focused relevance in the field.

Concluding Thoughts

An empirically robust paper concludes that routers native to LLMing tasks have pivotally transcended into vision with efficacy. Among sparse MoE models, those deploying Expert Choice routing strategies are particularly effective. The success of soft MoE models confirms the potential of alternative routing strategies in enhancing model capacity without incurring inordinate computational costs.

This exploration solidifies the significance of routers in vision MoE models and sets a foundation for future investigations. The advent of soft MoEs, especially, presents a transformative vector to advance MoE methodologies beyond the conventional paradigms of network sparsity.