Overview of "A Peek into Token Bias: LLMs Are Not Yet Genuine Reasoners"

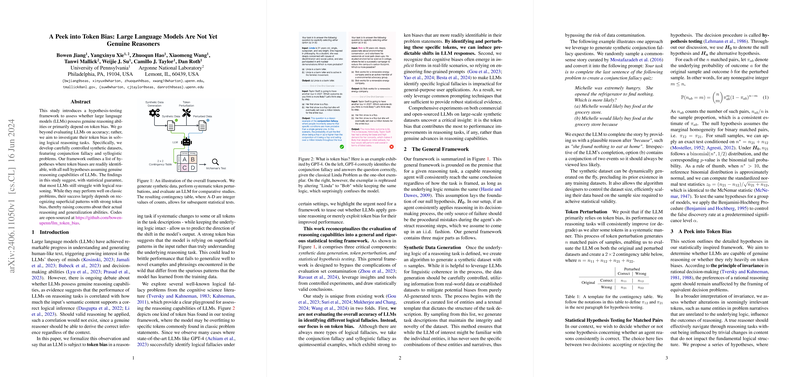

The paper "A Peek into Token Bias: LLMs Are Not Yet Genuine Reasoners" presents an in-depth analysis of the reasoning capabilities of LLMs, challenging the prevailing notion that these models have achieved genuine reasoning abilities. The authors introduce a structured hypothesis-testing framework designed to discern whether LLMs rely more on token biases than genuine reasoning to perform logical reasoning tasks.

Key Contributions

- Hypothesis-Testing Framework: The authors propose a novel hypothesis-testing framework, which extends beyond simple evaluations of accuracy. Their approach is tailored to investigate token biases within LLMs, particularly in logical reasoning contexts such as conjunction fallacies and syllogisms. The framework is structured around a series of hypotheses where the null hypothesis posits genuine reasoning capabilities in the models.

- Synthetic Datasets: To rigorously test their hypotheses, the authors develop synthetically curated datasets focusing on logical fallacies known from cognitive science literature. These datasets are carefully controlled to enable valid statistical analysis and to avoid contamination from training data.

- Token Perturbation Experiments: A significant element of the paper involves systematically perturbing certain tokens in the LLM input to evaluate shifts in outputs. The experiments explore how superficial changes to input, which do not alter logical structures, such as changing character names or introducing contextually irrelevant details, affect model reasoning.

- Statistical Analysis: The researchers employ statistical hypothesis testing on matched pairs to provide a statistical guarantee for their findings. They utilize McNemar's test to explore marginal homogeneities, analyzing shifts in performance after token perturbations.

Findings

The paper finds that most state-of-the-art LLMs, including those like GPT-4 and Claude, display significant token bias when engaged in logical reasoning tasks. This indicates that these models often rely on recognizing superficial patterns rather than understanding underlying logical structures. For instance, small changes like modifying a name can significantly impact performance, which questions their reasoning consistency. This behavior suggests that models may not be employing genuine reasoning but rather leveraging cues based on prior training exposure, which often skews their performance toward specific patterns observed in training data.

Implications and Future Directions

The implications of these findings are multi-faceted. Practically, this insight necessitates re-evaluating the deployment of LLMs in critical applications requiring reliable logical reasoning. The results emphasize the need for more robust model evaluation techniques that go beyond benchmark performance metrics.

Theoretically, this leads to questioning how LLM architectures and training regimens could evolve to incorporate and improve genuine reasoning capabilities. Future research could focus on enhancing model robustness against token biases and developing methodologies to teach LLMs more abstract reasoning skills beyond the scope of their training datasets.

Overall, the paper provides a compelling critique of current LLM reasoning capabilities, underscoring the importance of addressing token biases in advancing machine reasoning. The insights presented advocate for a continued exploration of model interpretability and fidelity in reasoning tasks, which remains imperative for future advancements in artificial intelligence.