Overview of Automatic Instruction Evolving for LLMs

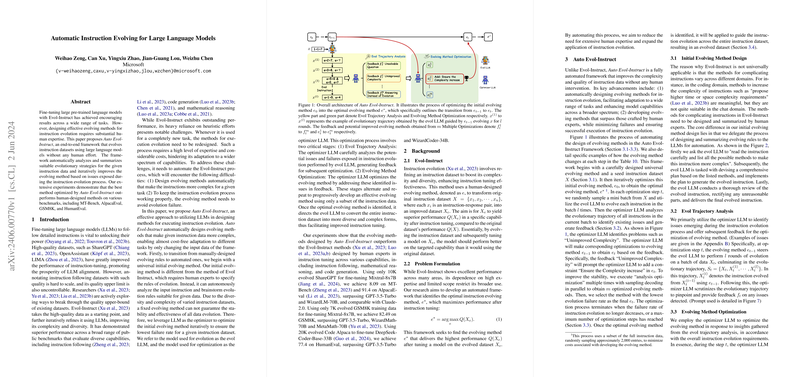

The paper "Automatic Instruction Evolving for LLMs" explores the development of a systematic framework, Auto Evol-Instruct, aimed at automating the evolution of instruction datasets for fine-tuning LLMs. This methodology bypasses the need for human intervention in the design of evolving strategies, empowering LLMs to autonomously analyze, summarize, and iteratively refine instructions to improve complexity and diversity.

Core Contributions

Auto Evol-Instruct represents a significant paradigm shift from traditional, manual instructional design methods to automated processes that leverage LLM capabilities for optimal dataset evolution. The framework's key features are:

- Automation of Evolving Methods: By systematically designing evolving methods, the framework facilitates cost-free adaptation across various tasks, only requiring a change in input data. The initial evolving method autonomously proposes evolution rules based on data analysis.

- Iterative Optimization: Employing an evol LLM for executing instruction evolution and an optimizer LLM for refining evolving methods, the framework iteratively optimizes evolving strategies. It addresses failures by analyzing evolution trajectories and honing methods to minimize failure rates.

- Demonstrated Superiority: Extensive experiments confirm that Auto Evol-Instruct-designed methods outperform human-designed Evol-Instruct methods across diverse benchmarks like MT-Bench, AlpacaEval, GSM8K, and HumanEval.

Experimental Results

The framework was tested on three primary capabilities: instruction following, mathematical reasoning, and code generation, using datasets such as ShareGPT, GSM8K, and Code Alpaca. The experiments revealed:

- Auto Evol-Instruct-ed methods for instruction following achieved an MT-Bench score of 8.09 and AlpacaEval score of 91.37, aligning closely with advanced models like Claude-2.0.

- In math reasoning, the framework achieved an accuracy of 82.49 on GSM8K, surpassing the capabilities of GPT-3.5-Turbo and leading models like MetaMath-70B.

- For code generation, Auto Evol-Instruct scored 77.4 on HumanEval, consistent with models trained on significantly larger datasets.

Implications

The optimization carried out by Auto Evol-Instruct suggests several practical and theoretical benefits:

- Reduced Human Expertise Requirement: By automating the evolution process, the framework alleviates the dependency on human expertise, opening up possibilities for fine-tuning across broader task spectrums with minimal cost.

- Enhanced Diversity and Complexity: The methods inherently enhance the complexity and diversity of data, crucially impacting model performance and alignment. This suggests that the framework can elevate the quality upper-bound of existing datasets, addressing previously unmet performance ceilings.

Speculations for Future Developments

Looking forward, the implications of Auto Evol-Instruct pave the way for several advancements in AI:

- Scaling Across Diverse Domains: With its ability to automatically derive optimal evolving strategies, Auto Evol-Instruct could potentially be applied to numerous domains beyond the tested areas, including truthfulness in AI responses and more sophisticated reasoning tasks.

- Adapting to New LLMs: Given its automation, the framework could be fine-tuned to collaborate with emerging LLM architectures, expanding its applicability alongside model advancements.

- Broader Dataset Utilization: Future iterations could extend to larger datasets and more varied tasks, enhancing generalization capabilities and robustness.

In summary, Auto Evol-Instruct offers a scalable, efficient methodology for advancing LLM tuning, promising to redefine instruction evolution through automation and iterative optimization. The framework not only surpasses existing manual methods in effectiveness but also ensures a sustainable trajectory for future AI developments.