Instruction Fusion: Advancements in Prompt Evolution for Code Generation

The paper "Instruction Fusion: Advancing Prompt Evolution through Hybridization" by Weidong Guo et al. introduces a novel method for enhancing prompt generation in code LLMs (Code LLMs). This approach, termed Instruction Fusion (IF), addresses limitations inherent in existing prompt evolution techniques like Evol-Instruct by merging distinct instructions to evolve more diverse and complex prompts, specifically for code generation tasks.

Constraints of Existing Methods

The paper begins by analyzing the pervasive limitations associated with current methodologies such as Evol-Instruct. Evol-Instruct primarily enhances code LLMs by generating new instructions through the addition of constraints to existing prompts. This method demonstrates an increase in complexity and diversity of instructions; however, it encounters several challenges:

- Incremental complexity can overburden LLMs if constraints become excessively intricate.

- Newly added constraints may not align with the fundamental context of the original instruction, leading to disparity in educational difficulty.

- The evolutionary process remains largely restricted by the initial prompt, preventing true diversification in objective creation.

Introduction of Instruction Fusion

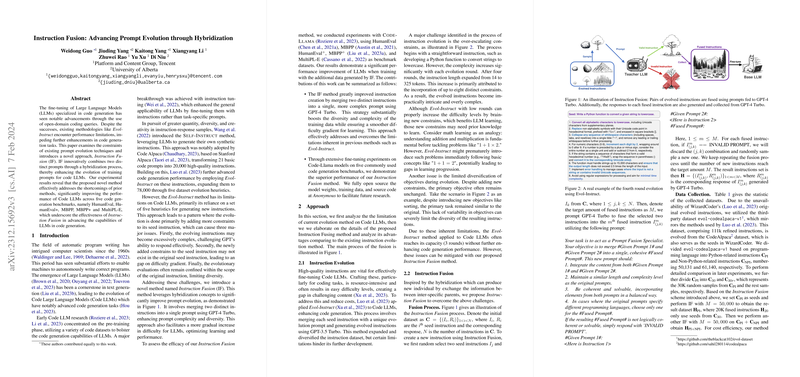

To counter these limitations, the authors propose Instruction Fusion. By amalgamating two distinct prompts, the technique enhances the complexity and diversity of the resulting synthesized prompt without exacerbating the difficulty level gradient experienced by LLMs. This process is realized using GPT-4 Turbo, which merges instructions and responses for optimized fusion.

The paper details the methodological step wherein two seed instructions are randomly selected and fed into GPT-4 Turbo to produce a hybridized instruction defined by a specific degree of coherence and operability. The authors highlight that the fusion process is meticulously crafted to balance the new prompt's length and difficulty with its components.

Experimental Evaluation

The efficacy of the IF technique is demonstrated through a series of carefully controlled experiments using benchmark datasets such as HumanEval, MBPP, and MultiPL-E. The results indicate substantial improvements over traditional evol-codealpaca-v1 evolved dataset methods, with the fused instructions encouraging higher performance by amplifying instruction ambiguity and increasing prompt complexity and diversity. Notably, the models fine-tuned with IF-generated instruction sets, even at a lower parameter scale, demonstrated performance that equaled or surpassed existing open-source models on multiple benchmarks.

Results and Implications

The quantitative outcomes are compelling: the IF method consistently shows enhanced performance across several benchmarks when compared with models fine-tuned using only traditional evolution methods. These results suggest that IF could fundamentally alter the effectiveness of training data used for Code LLM refinement. The improved complexity and diversity in instruction sets open avenues for further refinement of LLMs, underscoring the potential for enhanced multi-language and multi-contextual code generation capabilities.

Future Directions

Considering the evolving nature of LLMs and the growing applicability of artificial intelligence in code generation tasks, Instruction Fusion sets the stage for future explorations in hybrid prompt methodologies. This work heralds potential shifts in instructional creative processes, encouraging further investigation into cross-domain application, cost optimization in data generation, and exploration of additional fusion techniques.

In conclusion, the methods presented in the paper provide a significant advancement in the field of code LLM prompt engineering. They underscore the potential to overcome traditional constraints by employing innovative methods that enhance instructional design through intelligent prompt synthesis.