Overview of "WizardLM: Empowering LLMs to Follow Complex Instructions"

In the field of NLP, LLMs have proven adept at a variety of tasks, from generating coherent text to answering complex queries. However, their efficacy is often limited by their ability to follow user-specified instructions, particularly when those instructions are complex. The paper "WizardLM: Empowering LLMs to Follow Complex Instructions" addresses this limitation by introducing an innovative approach to generating complex instruction data for LLM training.

Methodology: Evol-Instruct

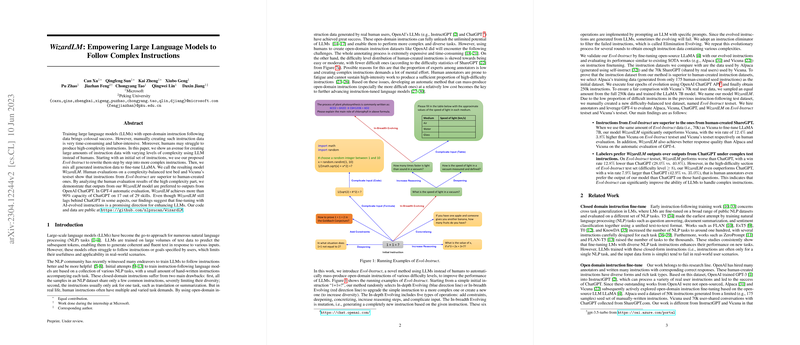

The crux of the paper's proposed method, termed Evol-Instruct, is the automated generation of complex instruction data using existing LLMs rather than human annotators, which is traditionally a resource-intensive process. The authors employ an iterative process that begins with a basic set of instructions. These instructions are then submitted to the Evol-Instruct system where they are progressively rewritten into more complex directives through a two-pronged approach: in-depth and in-breadth evolving.

- In-depth Evolving: This involves five specific operations designed to increase the complexity:

- Adding constraints

- Deepening (increasing the level of inquiry)

- Concretizing (using more specific terms)

- Increasing reasoning steps

- Complicating input (adding complex input formats like code or data tables)

- In-breadth Evolving: This generates new instructions based on the themes of the initial set to diversify the instruction set's topic coverage.

A filtering mechanism, termed elimination evolving, validates the evolved instructions to ensure they are reasonable and not overly derived from evolving prompts.

Experimental Validation

The evolved instruction data is used to fine-tune the LLaMA LLM, resulting in a new model termed WizardLM. The efficacy of WizardLM is evaluated using a complexity-balanced test set and Vicuna's test set, both through human assessment and automatic scoring using GPT-4. The results show that WizardLM outperforms existing models, including OpenAI's ChatGPT and other instruction-tuned models like Vicuna and Alpaca, particularly on complex instructional tasks.

- Human Evaluations: On the Evol-Instruct and Vicuna test sets, WizardLM displayed superior performance compared to Vicuna and Alpaca, with notable improvement in handling high-difficulty instructions.

- GPT-4 Automatic Evaluation: WizardLM achieved over 90% of ChatGPT’s capacity in 17 out of 29 skills, marking significant advancements in instruction following capabilities through automated data generation.

Implications and Future Work

The implications of this research are profound for both practical applications and theoretical advancements. Practically, the ability to generate complex instructional data autonomously can significantly reduce the cost and time associated with human annotation, making it more feasible to train LLMs to comprehend and execute more complex tasks.

Theoretically, the findings indicate that AI-generated data can match, if not exceed, the quality and complexity of human-generated data, thus suggesting a potential paradigm shift in how training datasets for LLMs are constructed. The authors suggest that future work could focus on refining the elimination evolving process and exploring the integration of more sophisticated evolving techniques to further enhance the complexity and application range of the instructions.

Conclusion

The paper "WizardLM: Empowering LLMs to Follow Complex Instructions" presents a significant advancement in the field of NLP by demonstrating how LLMs can be fine-tuned with automatically generated complex instructions. This method not only enhances the capabilities of LLMs to follow intricate user directives but also offers a sustainable and scalable approach to data generation that could revolutionize training methodologies for sophisticated language tasks. The introduction of the Evol-Instruct framework sets a promising direction for future research and application in autonomous AI instruction and comprehension, potentially leading to more intelligent and adaptable AI systems.