Faithful Logical Reasoning via Symbolic Chain-of-Thought

The paper "Faithful Logical Reasoning via Symbolic Chain-of-Thought" introduces SymbCoT, a novel framework designed to enhance the logical reasoning capabilities of LLMs. The central motivation for this work is the inherent limitation of existing Chain-of-Thought (CoT) techniques in handling logical reasoning tasks that rely significantly on symbolic expressions and rigid deduction rules.

Core Contributions

SymbCoT Framework

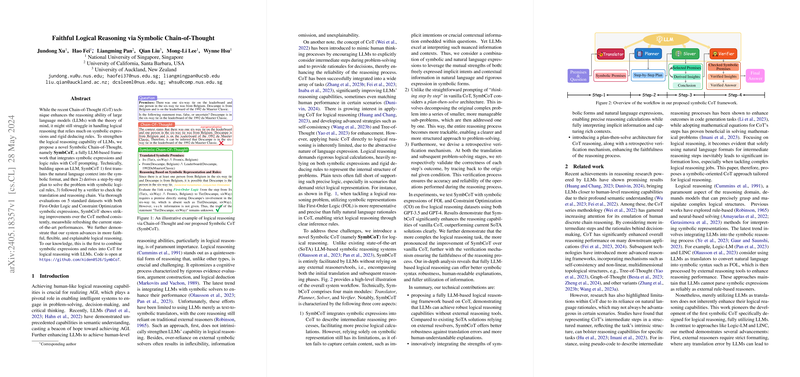

SymbCoT, or Symbolic Chain-of-Thought, integrates symbolic expressions and logic rules with CoT prompting. Unlike traditional CoT that operates purely within the natural language domain, SymbCoT bridges the gap between symbolic and natural language reasoning. The framework involves four main modules: Translator, Planner, Solver, and Verifier.

- Translator: Converts natural language premises and question statements into a symbolic format.

- Planner: Decomposes the problem into a series of smaller sub-problems and formulates a step-by-step reasoning plan.

- Solver: Applies symbolic logical rules to derive insights and conclusions from the given premises.

- Verifier: Ensures the correctness of both the translation and reasoning process by validating each logical step against original conditions.

Methodology

SymbCoT's methodology contrasts with vanilla CoT by ensuring more structured and precise logical reasoning. This is achieved by:

- Symbolic Integration: Uses symbolic representations to describe intermediate reasoning steps, thus reducing the ambiguity inherent in natural language and enabling accurate logical inferences.

- Plan-then-Solve Architecture: Breaks the original complex problem into manageable sub-problems, thereby providing a clearer problem-solving approach.

- Retrospective Verification: Validates the accuracy of each reasoning step, enhancing the faithfulness and reliability of the reasoning process.

Experimental Evaluation

The paper provides a thorough evaluation of SymbCoT across five standard datasets: PrOntoQA, ProofWriter, FOLIO, LogicalDeduction, and AR-LSAT. Experiments were conducted using both GPT-3.5 and GPT-4 to ensure comprehensive benchmarking. The results demonstrate significant improvements over existing methods, including vanilla CoT and Logic-LM. For instance, SymbCoT showcased an overall average accuracy improvement of 21.56% and 22.08% over Naive and CoT methods, respectively, using GPT-3.5, and similar gains with GPT-4.

Impact and Future Work

Robustness and Faithfulness:

- SymbCoT's fully LLM-based approach improves robustness against translation errors and maintains human-readable explanations.

- The integration of symbolic logic ensures a high level of reasoning faithfulness, significantly reducing the instances of correct answers achieved through flawed logical steps.

Practical Implications:

- In practical applications, SymbCoT enhances the logical reasoning capabilities of AI systems, making them more reliable for tasks requiring rigorous logical deductions.

- The framework's comprehensive verification mechanism makes it particularly valuable in domains like automated theorem proving, mathematical problem-solving, and complex decision-making systems.

Theoretical Implications:

- The success of SymbCoT underscores the efficacy of integrating symbolic logic with natural language prompts, providing a pathway for developing more advanced AI systems capable of performing human-like logical reasoning.

Future Research Directions:

- Exploring the integration of SymbCoT with external symbolic solvers may enhance its capability to identify correct reasoning paths, thereby combining the strengths of both methodologies.

- Expanding the framework to support a wider variety of symbolic languages and exploring additional datasets will further validate and refine SymbCoT's applicability and robustness.

Conclusion

This paper presents a substantive leap in the logical reasoning capabilities of LLMs by introducing SymbCoT, a framework that amalgamates the strengths of symbolic expressions and CoT prompting. The consistent improvement across multiple datasets and reasoning tasks highlights SymbCoT's potential as a robust, explainable, and faithful reasoning framework. As LLM research continues to evolve, SymbCoT represents a promising direction towards achieving more reliable and human-like logical reasoning in AI systems.