Enhancing LLMs' Reasoning Through Prolog-Based Chain-of-Thought

The paper "Thought-Like-Pro: Enhancing Reasoning of LLMs through Self-Driven Prolog-based Chain-of-Thought" introduces a framework aimed at improving the reasoning capabilities of LLMs. The primary innovation lies in the integration of Prolog logic engines to guide the development of coherent and logically sound reasoning trajectories, which LLMs can then learn to imitate. This approach addresses a significant challenge in the current landscape of LLMs—namely, the dependency on specific prompting strategies and the lack of a unified framework for consistent reasoning across diverse tasks.

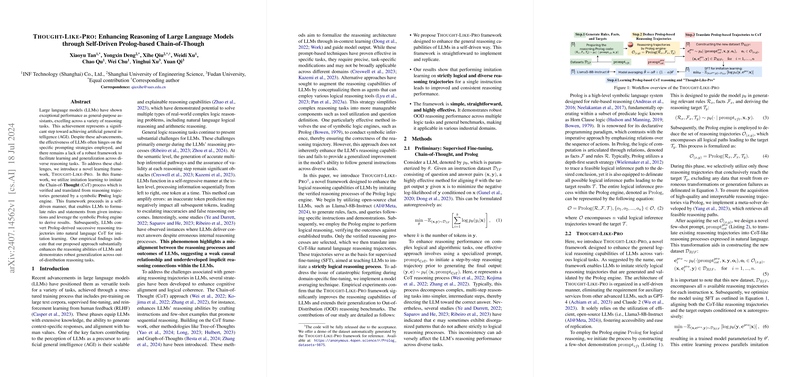

The proposed framework, Thought-Like-Pro, utilizes imitation learning to capture the Chain-of-Thought (CoT) process informed by the reasoning paths generated by Prolog. This self-driven methodology allows LLMs to construct their rules and perform inference using the Prolog engine, subsequently translating these logic-based pathways into natural language CoT reasoning. The framework's empirical evaluations demonstrate that this method significantly enhances LLMs' reasoning proficiency and generalizes well to out-of-distribution (OOD) reasoning tasks.

In the methods section, the authors describe the progressive steps involved in the Thought-Like-Pro framework. It begins with the formulation of rules and facts by employing open-source chat LLMs like Llama3-8B-Instruct, followed by symbolic reasoning through Prolog, which verifies these paths against known truths. Thus, the validity of the reasoning process is ensured before the natural language translation occurs for imitation learning. This iterative process helps align LLMs' reasoning steps with logical structures, enhancing performance across a broad spectrum of reasoning challenges.

Experimental evaluations conducted on datasets such as GSM8K, ProofWriter, and PrOntoQA reveal a marked improvement in reasoning accuracy when using Thought-Like-Pro. This improvement is attributed to the training on verified logical trajectories, which bolsters the LLMs' capacity to handle both structured logical reasoning tasks and complex algorithmic computations. The paper also explores model averaging techniques to prevent catastrophic forgetting, balancing domain-specific fine-tuning with the retention of general reasoning capabilities.

The authors acknowledge certain limitations in their paper, notably the scale of tasks addressed and the potential variances in OOD generalization. However, they assert that the Thought-Like-Pro framework offers a robust foundation for extending logical reasoning capabilities, especially in industrial applications requiring reliable and explainable AI systems.

The paper’s implications suggest potential pathways for evolving the capabilities of LLMs, pointing towards a future where AI systems can autonomously construct and follow logical reasoning processes with minimal human supervision. The fusion of symbolic logic and data-driven learning models, as demonstrated, may enhance AI's application in domains that demand stringent logical frameworks, such as legal reasoning, complex decision-making, and mathematical problem-solving.

Future developments in AI could further capitalize on this framework to unify various reasoning paradigms, thereby enhancing LLMs' role as general-purpose intelligent agents. This would contribute significantly to the overarching goal of approaching artificial general intelligence (AGI) by fostering more natural and consistent reasoning abilities in machines.