Training Vision-LLMs with Reinforcement Learning – An Introduction

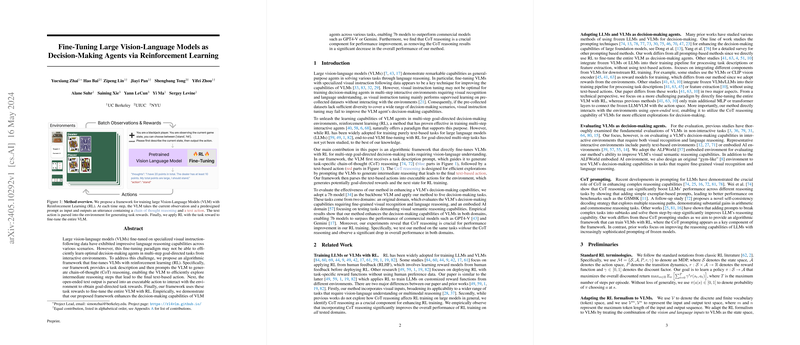

Vision-LLMs (VLMs) have shown impressive language reasoning abilities when fine-tuned on specialized visual instruction-following data. However, these models face challenges in multi-step, goal-directed tasks from interactive environments. To tackle this, recent research proposes an innovative algorithmic framework that fine-tunes VLMs using Reinforcement Learning (RL).

What's the Approach?

The research introduces a framework where VLMs, when given a task description, generate intermediate reasoning steps before deciding on a specific text-based action. These actions are then parsed into executable commands for interacting with the environment. RL is applied to fine-tune the VLMs based on the rewards received from these interactions.

Key Components

- Chain-of-Thought (CoT) Reasoning: This involves breaking down complex tasks into intermediate steps, making the decision-making process more efficient.

- Open-ended Text Actions: The VLM generates text actions that are parsed into concrete actions for environment interaction.

- Reinforcement Learning: The VLM is fine-tuned using task rewards, improving its decision-making capabilities.

Experimental Results

The framework was tested on a variety of tasks, including deterministic arithmetic tasks and visual semantic reasoning tasks. Here's a quick look at the results:

Arithmetic Tasks

- NumberLine: Task of moving a number to a target on a synthetic number line.

- EZPoints: Using numbers from two cards to compute a specified value.

- Points24: A harder version of EZPoints requiring the use of four cards.

- Blackjack: Winning a blackjack game using visual information.

Visual Semantic Reasoning

- ALFWorld: A text-based interactive environment combined with vision-language instruction to test visual semantic understanding.

Performance Highlights

The new method significantly improved decision-making capabilities. The enhanced models, even with modest sizes like 7 billion parameters, outperformed commercial giants such as GPT-4V and Gemini on most tasks. For instance:

- NumberLine: Achieved a success rate of 89.4% (vs. 65.5% for GPT-4V)

- Blackjack: Improved performance to 40.2% from 25.5% (GPT-4V)

The Role of CoT Reasoning

Experiments revealed that CoT reasoning played a critical role in improving model performance. Without it, the model performance dropped notably, underscoring its necessity. Moreover, the paper found that optimal performance was achieved with moderate scaling factors for CoT reasoning, balancing between the reasoning steps and the final text-based action.

Practical and Theoretical Implications

Practically, this research opens avenues for developing more intelligent, autonomous VLM agents capable of handling complex multi-step tasks in dynamic environments. Theoretically, it showcases the potential of integrating CoT reasoning with RL to enhance decision-making processes in VLMs.

Future Directions

The paper suggests two interesting future directions:

- Exploring Different Prompting Techniques: While CoT reasoning is beneficial, examining other prompting techniques could further enhance performance.

- Multi-task Training: Currently, the framework improves performance on individual tasks. Extending this to improve multiple tasks simultaneously could be a valuable future development.

In summary, the proposed framework combines the strengths of VLMs and RL to tackle the challenges of goal-directed multi-step tasks, demonstrating substantial improvements in decision-making capabilities. This blend of intermediate reasoning and reinforcement learning could indeed pave the way for more sophisticated and capable AI systems.