- The paper presents Gaussian Splatting's capability to reconstruct 3D scenes and generate novel views with improved training speed and reduced artifacts compared to traditional methods.

- It details dynamic and deformation-based extensions that capture time-dependent movements, enhancing realism in digital avatars and real-time rendering applications.

- The review highlights challenges such as computational complexity and memory usage while proposing future research directions to optimize performance and broaden applications.

Gaussian Splatting: 3D Reconstruction and Novel View Synthesis

Introduction

The paper "Gaussian Splatting: 3D Reconstruction and Novel View Synthesis, a Review" offers a comprehensive overview of the state-of-the-art techniques for 3D reconstruction using Gaussian Splatting. Image-based 3D reconstruction is a challenging task that involves inferring the 3D shape of an object or scene from a set of input images. The paper focuses on the Gaussian Splatting method, which has garnered attention for its ability to efficiently generate novel, unseen views and reconstruct 3D scenes. This review explores recent developments in Gaussian Splatting, discussing input types, model structures, output representations, training strategies, unresolved challenges, and future directions.

Fundamentals of Gaussian Splatting

Gaussian Splatting represents 3D scenes using numerous 3D Gaussians or particles, where each is defined by parameters such as position, orientation, size, opacity, and color. This approach enables the rendering of objects by transforming particles into 2D space from a camera viewpoint, organizing them for optimal rendering.

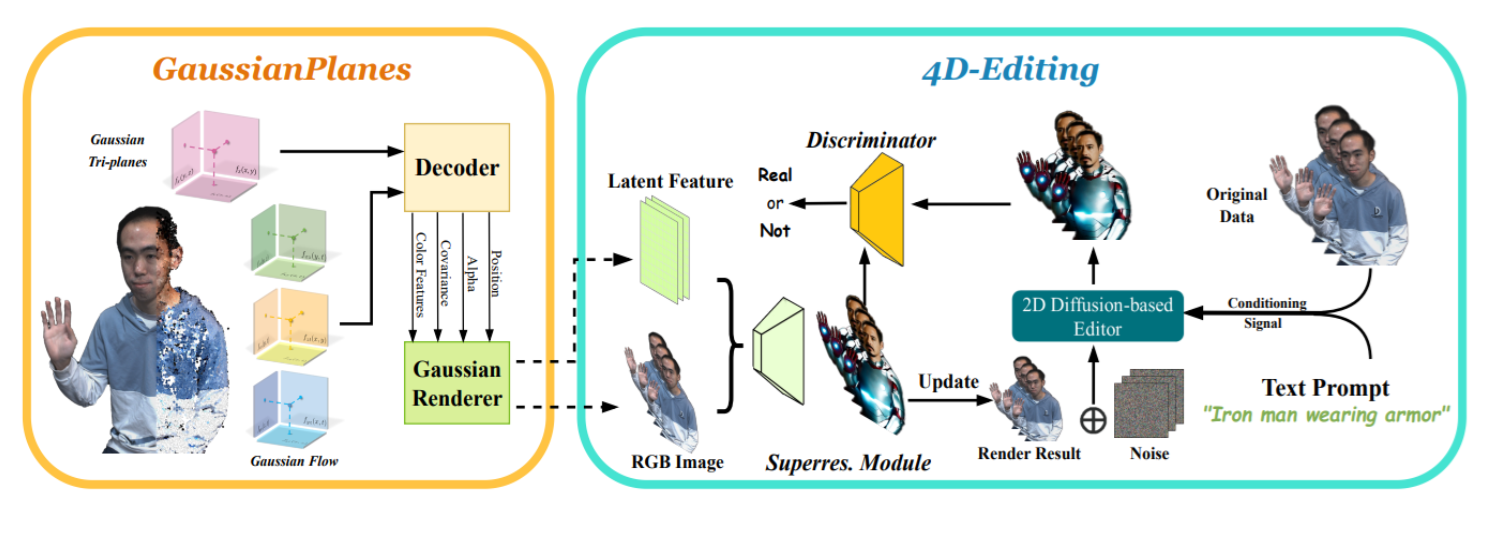

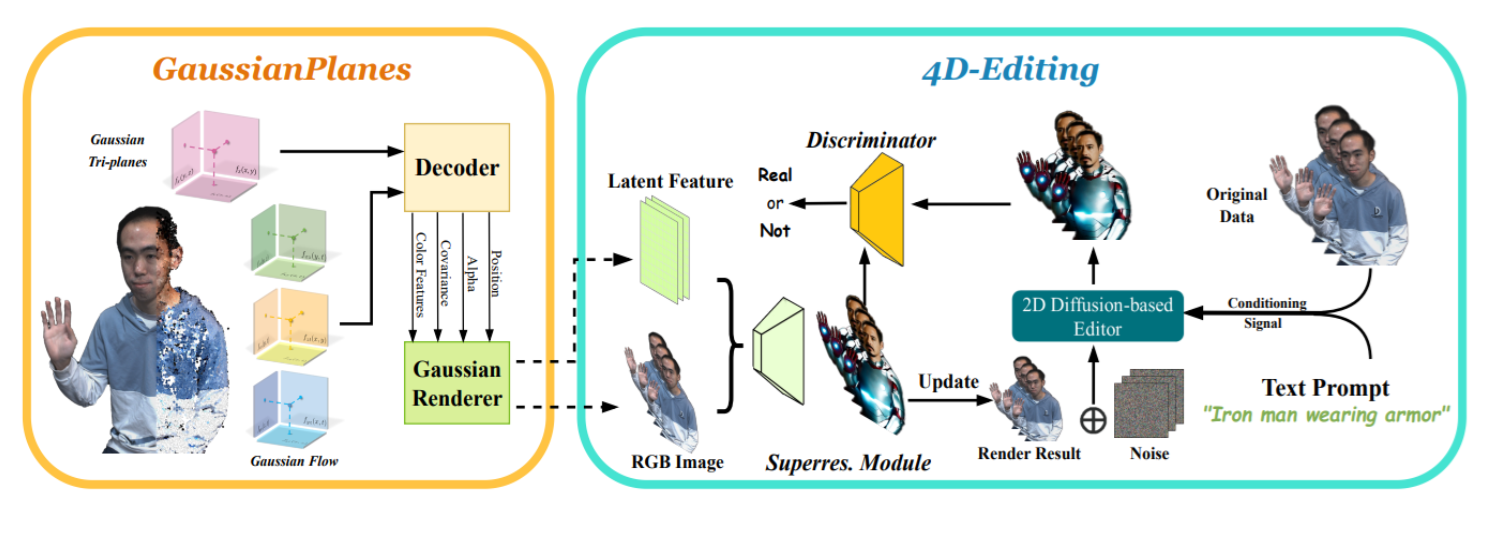

Figure 1: Dynamic and deformation based methods.

In Gaussian Splatting, 3D Gaussians are projected into pixel space, transforming their parameters using linear approximations. The depth compositing of Gaussians ensures proper visibility and depth sorting for efficient rendering. Methods for training include stochastic gradient descent, leveraging differentiable Gaussian rasterization to achieve high-quality outputs.

3D Reconstruction Techniques

The paper identifies various traditional and novel approaches for 3D data representation, such as point clouds, meshes, voxels, NeRFs, and Gaussian Splats. Gaussian Splatting offers significant improvements over methods like NeRFs in terms of fewer artifacts and faster training times. The study highlights the advancements made in activation and optimization techniques, particularly in real-time rendering capabilities and robustness in dynamic scenes.

Recent Advancements

Dynamic and deformable Gaussian Splatting extends the representation by incorporating parameters dependent on time or time steps, capturing dynamic movements and expressions in scenes. Methods like Fourier approximation for position and linear approximation for rotation have shown substantial improvements in visual quality and computational efficiency. Additionally, diffusion techniques combined with Gaussian Splatting allow for text-driven 3D generation, generating realistic and diverse 3D assets efficiently.

Applications in Avatars

The review paper explores the application of Gaussian Splatting in creating digital avatars, emphasizing articulation and expressiveness. Various approaches employ Gaussian splats for capturing joint angles, complex expressions, and dynamic clothing variations in avatars, highlighting advancements in efficiency and realism.

Challenges and Future Directions

While Gaussian Splatting offers promising results, it presents challenges like computational complexity and memory usage. The paper suggests further research in enhancing rendering speeds, improving mesh extraction techniques, and expanding applications to a broader range of dynamic scenes. Future directions may include integrating Gaussian Splatting with other technologies such as real-time simulations and interactive environments.

Conclusion

Gaussian Splatting has emerged as a robust method for 3D reconstruction and novel view synthesis, offering improvements in rendering speed, quality, and dynamic capabilities. The method's versatility has opened new possibilities in various fields, including AR/VR, robotics, and computer graphics.

Endeavors in addressing current limitations and further exploring its applications are promising steps toward achieving broader implementation and advancement in 3D scene understanding and synthesis.