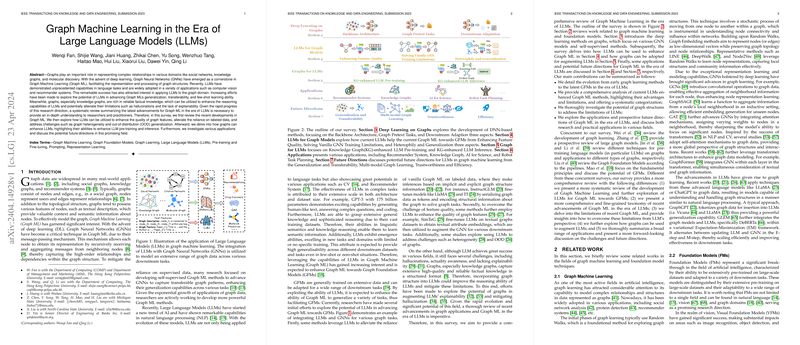

Overview of "Graph Machine Learning in the era of LLMs"

Developments in Graph Machine Learning

The integration of LLMs within the field of Graph Machine Learning has generated novel methodologies that capitalize on the strengths of both fields. The survey elaborates on recent developments in Graph ML beginning with an in-depth discussion on the advancements brought forth by deep learning techniques, particularly Graph Neural Networks (GNNs). Innovations such as Graph Transformers and self-supervised learning frameworks have significantly evolved the capabilities of Graph ML, enabling more complex and varied applications.

Enhancements Through LLMs

A significant portion of the survey is dedicated to discussing how LLMs can be utilized to enhance the quality and functionality of graph models. The survey categorizes these enhancements in terms of feature quality improvements, addressing training limitations, and enhancing heterogeneity and out-of-distribution generalization:

- Feature Quality Improvements: Details how LLMs can enhance node and edge feature representations, which is critical for improving the accuracy and efficiency of graph models.

- Training Limitations: Explores how leveraging LLMs can reduce the dependency on labeled data through advanced generative models and few-shot learning capabilities.

- Heterogeneity and Generalization: Discusses methods to use LLMs to adapt graph models to handle more heterogeneous data and improve their generalization across different graph distributions.

Graphs Enhancing LLMs

The survey transitions into how graph structures can conversely augment LLMs. This section highlights the benefits of incorporating graphs to mitigate some of LLMs' inherent limitations, such as lack of explainability and susceptibility to hallucinations. Graphs, particularly knowledge graphs, provide structured, factual knowledge that can aid LLMs in generating more accurate and reliable outputs.

Diverse Applications

A wide range of practical applications of integrating LLMs with Graph ML is presented, showcasing its versatility:

- Recommender Systems: Improved by understanding complex user-item relationships and better feature representations.

- Knowledge Graphs: Enhanced ability of LLMs to interact with and utilize structured knowledge for tasks like question answering and information retrieval.

- Scientific Discovery: Applications in drug discovery and materials science, where graph models benefit from the vast knowledge and reasoning capabilities of LLMs.

Future Prospects

Looking towards the future, the paper speculates on several promising research directions that could further advance the field of Graph ML in association with LLMs:

- Generalization and Transferability: Developing methods to enhance the ability of graph models to perform well across diverse graph types and structures.

- Multi-modal Graph Learning: Integrating and processing multiple types of data (e.g., text, images, and structured data) within graph frameworks.

- Trustworthiness: Ensuring the reliability, fairness, and privacy of graph models, particularly when integrated with LLMs.

- Efficiency Improvements: Addressing the computational demands and optimizing the performance of combined LLM and graph model systems.

Conclusion

This survey encapsulates the transformative potential of combining LLMs with Graph ML, delineating both current achievements and avenues for future research. As these models become increasingly sophisticated and integrated, they hold the promise of solving more complex problems across various domains with greater efficiency and efficacy.