A Survey of Graph Meets LLM: Progress and Future Directions

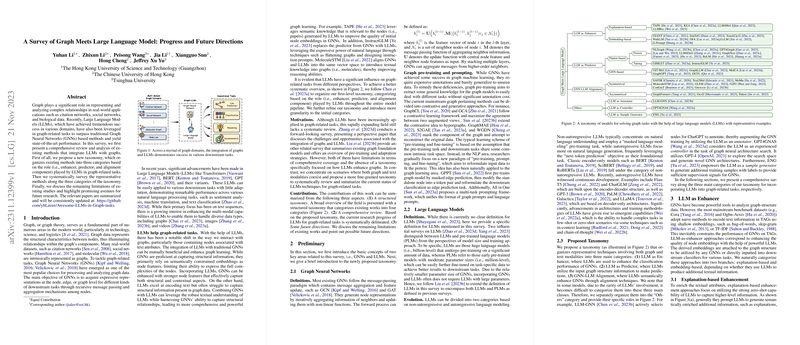

The paper "A Survey of Graph Meets LLM: Progress and Future Directions" provides a structured and detailed overview of the intersection between graph data handling and the capabilities of LLMs. The authors present a new taxonomy, categorizing existing techniques into three primary roles that LLMs play in graph-related tasks: enhancers, predictors, and alignment components.

Overview of Contributions

The central contributions of the paper include a comprehensive taxonomy for classifying approaches to integrating LLMs with graph data, an extensive review of existing methodologies, and a discussion of potential future research directions. These contributions offer insights into the methods used to leverage LLMs in enhancing graph-based tasks, ultimately aiming to improve the performance of various graph-centric applications.

LLM as Enhancer

LLMs have been utilized as enhancers to improve the quality of node embeddings in Text-Attributed Graphs (TAGs). This enhancement can be achieved through two primary approaches: explanation-based and embedding-based. Explanation-based approaches leverage the LLMs' ability to generate enriched explanations or pseudo labels, which are then used to improve GNN outputs. Embedding-based approaches, on the other hand, use LLMs directly to generate node embeddings that feed into GNNs. These methods have demonstrated enhanced performance on various domains but face scalability challenges, especially with large-scale datasets.

LLM as Predictor

In using LLMs as predictors, the approach involves either flattening graph structures into text sequences or using GNNs to capture structural patterns for LLMs. Flatten-based strategies transform graph data into textual descriptions that LLMs can process, while GNN-based strategies employ GNNs to integrate structural details with LLMs. Despite the noted effectiveness, these methods face challenges related to capturing long-range dependencies and the inherent inefficiencies of LLMs in processing sequential graph data.

GNN-LLM Alignment

GNN-LLM alignment aims to harmonize the embedding spaces of graph and textual data processing components. This can be achieved symmetrically, treating both modalities equally, or asymmetrically, often allowing GNNs to enhance LLMs. Symmetric alignment frequently involves contrastive learning, enabling the integration of structural and textual information, while asymmetric alignment typically allows GNNs to serve as guides or teachers in training LLMs.

Future Research Directions

The paper outlines several avenues for future exploration:

- Handling Non-Text-Attributed Graphs: There's a need to extend LLM capabilities to graphs lacking textual attributes, exploring foundation models in varied domains.

- Addressing Data Leakage: Mitigating data leakage issues is critical, necessitating newer, robust benchmarking approaches.

- Enhancing Transferability: Cross-domain application of LLM-enhanced graph learning models remains underexplored.

- Improving Explainability: Advances in transparency are needed, leveraging LLMs' reasoning capabilities.

- Boosting Efficiency: It's essential to develop resource-efficient methods while maintaining the efficacy of GNN-LLM integrations.

- Exploring Expressive Abilities and Agent Roles: Further analysis is necessary to understand LLMs' expressiveness in graph contexts, and their potential roles as agents could unlock new capabilities in complex graph tasks.

Conclusion

This survey adeptly synthesizes the progress at the intersection of LLMs and graph data handling, offering a clear taxonomy and highlighting pivotal research trends. The guidance on future research directions underscores the ongoing challenges and opportunities in this domain, providing a foundation for continued advancements. The potential synergy between LLMs and graph models represents a promising frontier in computational research, with substantial theoretical and practical implications.