mABC: multi-Agent Blockchain-Inspired Collaboration for root cause analysis in micro-services architecture (2404.12135v3)

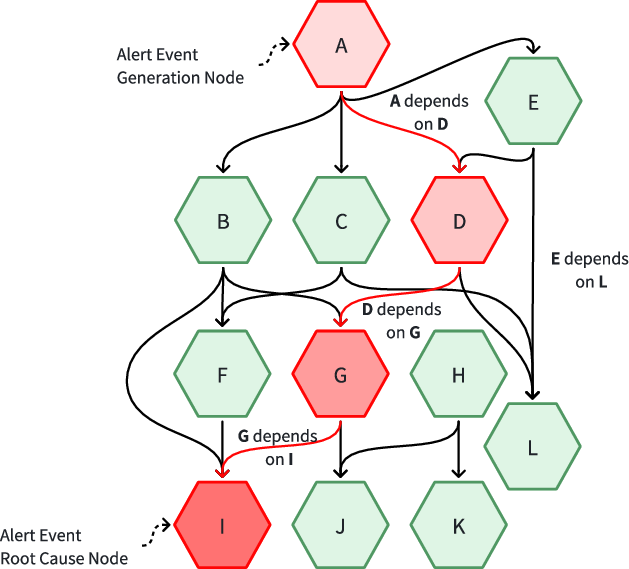

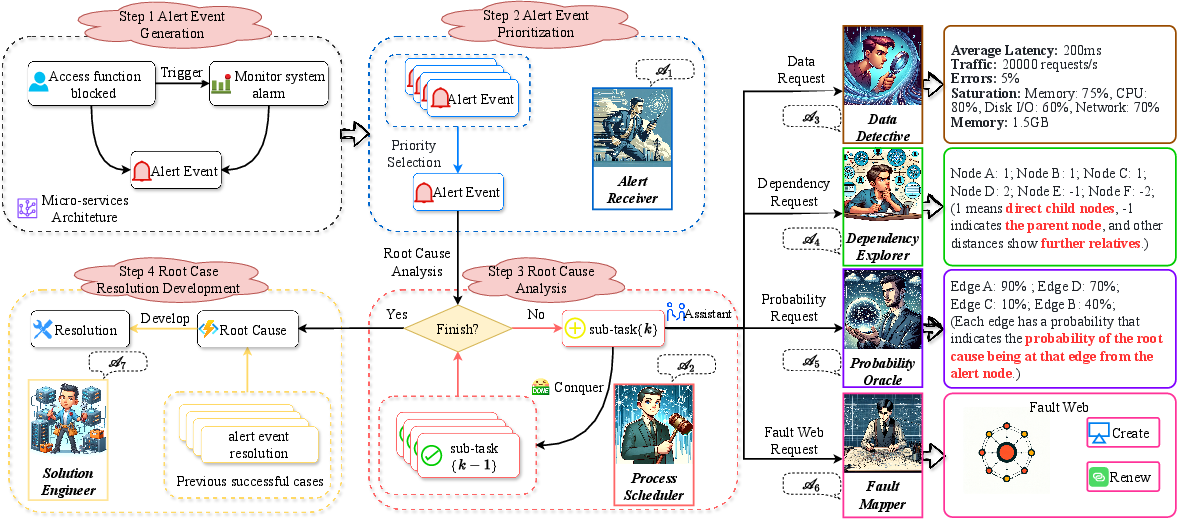

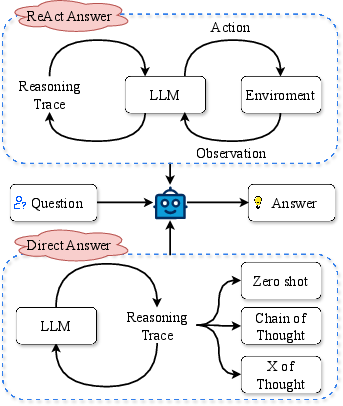

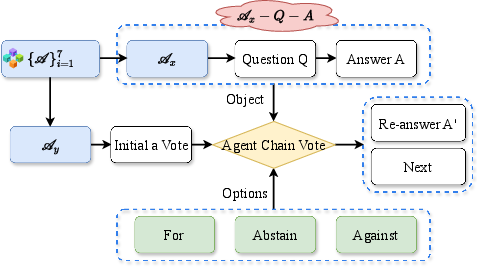

Abstract: Root cause analysis (RCA) in Micro-services architecture (MSA) with escalating complexity encounters complex challenges in maintaining system stability and efficiency due to fault propagation and circular dependencies among nodes. Diverse root cause analysis faults require multi-agents with diverse expertise. To mitigate the hallucination problem of LLMs, we design blockchain-inspired voting to ensure the reliability of the analysis by using a decentralized decision-making process. To avoid non-terminating loops led by common circular dependency in MSA, we objectively limit steps and standardize task processing through Agent Workflow. We propose a pioneering framework, multi-Agent Blockchain-inspired Collaboration for root cause analysis in micro-services architecture (mABC), where multiple agents based on the powerful LLMs follow Agent Workflow and collaborate in blockchain-inspired voting. Specifically, seven specialized agents derived from Agent Workflow each provide valuable insights towards root cause analysis based on their expertise and the intrinsic software knowledge of LLMs collaborating within a decentralized chain. Our experiments on the AIOps challenge dataset and a newly created Train-Ticket dataset demonstrate superior performance in identifying root causes and generating effective resolutions. The ablation study further highlights Agent Workflow, multi-agent, and blockchain-inspired voting is crucial for achieving optimal performance. mABC offers a comprehensive automated root cause analysis and resolution in micro-services architecture and significantly improves the IT Operation domain. The code and dataset are in https://github.com/zwpride/mABC.

- A. Alquraan, H. Takruri, M. Alfatafta, and S. Al-Kiswany, “An analysis of network-partitioning failures in cloud systems,” in Proceedings of the 13th USENIX Conference on Operating Systems Design and Implementation (OSDI’18), 2018.

- Y. Gao, W. Dou, F. Qin, C. Gao, D. Wang, J. Wei, R. Huang, L. Zhou, and Y. Wu, “An empirical study on crash recovery bugs in large-scale distributed systems,” in Proceedings of the 26th ACM joint meeting on european software engineering conference and symposium on the foundations of software engineering (ESEC/FSE’18), 2018.

- Y. Zhang, J. Yang, Z. Jin, U. Sethi, K. Rodrigues, S. Lu, and D. Yuan, “Understanding and detecting software upgrade failures in distributed systems,” in Proceedings of the ACM SIGOPS 28th Symposium on Operating Systems Principles (SOSP’21), 2021.

- H. Liu, S. Lu, M. Musuvathi, and S. Nath, “What bugs cause production cloud incidents?” in Proceedings of the Workshop on Hot Topics in Operating Systems (HotOS’19), 2019.

- P. Jamshidi, C. Pahl, N. C. Mendonça, J. Lewis, and S. Tilkov, “Microservices: The journey so far and challenges ahead,” IEEE Software, vol. 35, no. 3, pp. 24–35, 2018.

- M. Kim, R. Sumbaly, and S. Shah, “Root cause detection in a service-oriented architecture,” ACM SIGMETRICS Performance Evaluation Review, vol. 41, no. 1, pp. 93–104, 2013.

- K. Wang, C. Fung, C. Ding, P. Pei, S. Huang, Z. Luan, and D. Qian, “A methodology for root-cause analysis in component based systems,” in 2015 IEEE 23rd International Symposium on Quality of Service (IWQoS). IEEE, 2015, pp. 243–248.

- J. Lin, P. Chen, and Z. Zheng, “Microscope: Pinpoint performance issues with causal graphs in micro-service environments,” in Service-Oriented Computing: 16th International Conference, ICSOC 2018, Hangzhou, China, November 12-15, 2018, Proceedings 16. Springer, 2018, pp. 3–20.

- P. Wang, J. Xu, M. Ma, W. Lin, D. Pan, Y. Wang, and P. Chen, “Cloudranger: Root cause identification for cloud native systems,” in 2018 18th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (CCGRID). IEEE, 2018, pp. 492–502.

- M. Ma, J. Xu, Y. Wang, P. Chen, Z. Zhang, and P. Wang, “Automap: Diagnose your microservice-based web applications automatically,” in Proceedings of The Web Conference 2020, 2020.

- M. Ma, Z. Yin, S. Zhang, S. Wang, C. Zheng, X. Jiang, H. Hu, C. Luo, Y. Li, N. Qiu et al., “Diagnosing root causes of intermittent slow queries in cloud databases,” Proceedings of the VLDB Endowment (VLDB’20), 2020.

- Y. Zhang, Z. Guan, H. Qian, L. Xu, H. Liu, Q. Wen, L. Sun, J. Jiang, L. Fan, and M. Ke, “Cloudrca: a root cause analysis framework for cloud computing platforms,” in Proceedings of the 30th ACM International Conference on Information & Knowledge Management, 2021.

- S. Ghosh, M. Shetty, C. Bansal, and S. Nath, “How to fight production incidents? an empirical study on a large-scale cloud service,” in Symposium on Cloud Computing, 2022, pp. 126–141.

- D. Yuan, Y. Luo, X. Zhuang, G. R. Rodrigues, X. Zhao, Y. Zhang, P. Jain, and M. Stumm, “Simple testing can prevent most critical failures: An analysis of production failures in distributed data-intensive systems.” in Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI’14), 2014.

- T. Leesatapornwongsa, C. A. Stuardo, R. O. Suminto, H. Ke, J. F. Lukman, and H. S. Gunawi, “Scalability bugs: When 100-node testing is not enough,” in Proceedings of the 16th Workshop on Hot Topics in Operating Systems (HotOS’17), 2017.

- P. Liu, H. Xu, Q. Ouyang, R. Jiao, Z. Chen, S. Zhang, J. Yang, L. Mo, J. Zeng, W. Xue, and D. Pei, “Unsupervised detection of microservice trace anomalies through service-level deep bayesian networks,” in 2020 IEEE 31st International Symposium on Software Reliability Engineering (ISSRE), 2020, pp. 48–58.

- X. Zhou, X. Peng, T. Xie, J. Sun, C. Ji, D. Liu, Q. Xiang, and C. He, “Latent error prediction and fault localization for microservice applications by learning from system trace logs,” in Proceedings of the 2019 27th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, 2019, pp. 683–694.

- Z. Li, J. Chen, R. Jiao, N. Zhao, Z. Wang, S. Zhang, Y. Wu, L. Jiang, L. Yan, Z. Wang, Z. Chen, W. Zhang, X. Nie, K. Sui, and D. Pei, “Practical root cause localization for microservice systems via trace analysis,” in 2021 IEEE/ACM 29th International Symposium on Quality of Service (IWQOS), 2021, pp. 1–10.

- OpenAI, “Gpt-4 technical report,” 2023.

- J. Wei, M. Bosma, V. Y. Zhao, K. Guu, A. W. Yu, B. Lester, N. Du, A. M. Dai, and Q. V. Le, “Finetuned language models are zero-shot learners,” 2022.

- T. Kojima, S. S. Gu, M. Reid, Y. Matsuo, and Y. Iwasawa, “Large language models are zero-shot reasoners,” arXiv preprint arXiv:2205.11916, 2022.

- J. Wei et al., “Chain of thought prompting elicits reasoning in large language models,” arXiv preprint arXiv:2201.11903, 2022.

- X. Wang, J. Wei, D. Schuurmans, Q. Le, E. Chi, and D. Zhou, “Self-consistency improves chain of thought reasoning in language models,” arXiv preprint arXiv:2203.11171, 2022.

- N. Shinn, F. Cassano, E. Berman, A. Gopinath, K. Narasimhan, and S. Yao, “Reflexion: Language agents with verbal reinforcement learning,” in Advances in Neural Information Processing Systems, 2023.

- P. Lewis, E. Perez, A. Piktus, F. Petroni, V. Karpukhin, N. Goyal, H. Küttler, M. Lewis, W.-t. Yih, T. Rocktäschel et al., “Retrieval-augmented generation for knowledge-intensive nlp tasks,” in Advances in Neural Information Processing Systems, 2020, pp. 9459–9474.

- S. Yao, J. Zhao, D. Yu, N. Du, I. Shafran, K. Narasimhan, and Y. Cao, “React: Synergizing reasoning and acting in language models,” 2023.

- Z. Wang, Z. Liu, Y. Zhang, A. Zhong, L. Fan, L. Wu, and Q. Wen, “Rcagent: Cloud root cause analysis by autonomous agents with tool-augmented large language models,” 2023.

- Y. Shen, K. Song, X. Tan, D. Li, W. Lu, and Y. Zhuang, “HuggingGPT: Solving ai tasks with chatgpt and its friends in huggingface,” arXiv preprint arXiv:2303.17580, 2023.

- Y. Chen et al., “Empowering cloud rca with augmented large language models,” arXiv preprint arXiv:2311.00000, 2023.

- X. Zhou, G. Li, Z. Sun, Z. Liu, W. Chen, J. Wu, J. Liu, R. Feng, and G. Zeng, “D-bot: Database diagnosis system using large language models,” 2023.

- H. Chen, W. Dou, Y. Jiang, and F. Qin, “Understanding exception-related bugs in large-scale cloud systems,” in 2019 34th IEEE/ACM International Conference on Automated Software Engineering (ASE’19), 2019.

- C. Lou, P. Huang, and S. Smith, “Understanding, detecting and localizing partial failures in large system software.” in Proceedings of the 17th USENIX Symposium on Networked Systems Design and Implementation (NSDI’20), 2020.

- Y. Liu, C. Pei, L. Xu, B. Chen, M. Sun, Z. Zhang, Y. Sun, S. Zhang, K. Wang, H. Zhang et al., “Opseval: A comprehensive task-oriented aiops benchmark for large language models,” arXiv preprint arXiv:2310.07637, 2023.

- M. Li, M. Ma, X. Nie, K. Yin, L. Cao, X. Wen, Z. Yuan, D. Wu, G. Li, W. Liu et al., “Mining fluctuation propagation graph among time series with active learning,” in Database and Expert Systems Applications: 33rd International Conference, 2022.

- H. Guo, X. Lin, J. Yang, Y. Zhuang, J. Bai, T. Zheng, B. Zhang, and Z. Li, “Translog: A unified transformer-based framework for log anomaly detection,” arXiv preprint arXiv:2201.00016, 2021.

- H. Guo, J. Yang, J. Liu, J. Bai, B. Wang, Z. Li, T. Zheng, B. Zhang, J. Peng, and Q. Tian, “Logformer: A pre-train and tuning pipeline for log anomaly detection,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 38, no. 1, 2024, pp. 135–143.

- H. Guo, Y. Guo, R. Chen, J. Yang, J. Liu, Z. Li, T. Zheng, W. Hou, L. Zheng, and B. Zhang, “Loglg: Weakly supervised log anomaly detection via log-event graph construction,” 2023.

- H. Guo, J. Yang, J. Liu, L. Yang, L. Chai, J. Bai, J. Peng, X. Hu, C. Chen, D. Zhang et al., “Owl: A large language model for it operations,” arXiv preprint arXiv:2309.09298, 2023.

- W. Zhang, H. Guo, A. Le, J. Yang, J. Liu, Z. Li, T. Zheng, S. Xu, R. Zang, L. Zheng, and B. Zhang, “Lemur: Log parsing with entropy sampling and chain-of-thought merging,” 2024.

- S. Locke, H. Li, T.-H. P. Chen, W. Shang, and W. Liu, “Logassist: Assisting log analysis through log summarization,” IEEE Transactions on Software Engineering, vol. 48, no. 9, pp. 3227–3241, 2021.

- H. Guo, S. Yuan, and X. Wu, “Logbert: Log anomaly detection via bert,” in 2021 international joint conference on neural networks (IJCNN). IEEE, 2021, pp. 1–8.

- Z. Jiang, J. Liu, Z. Chen, Y. Li, J. Huang, Y. Huo, P. He, J. Gu, and M. R. Lyu, “Llmparser: A llm-based log parsing framework,” arXiv preprint arXiv:2310.01796, 2023.

- A. Vaswani, N. M. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, and I. Polosukhin, “Attention is all you need,” NIPS, 2017.

- J. Yang, S. Ma, D. Zhang, J. Wan, Z. Li, and M. Zhou, “Smart-start decoding for neural machine translation,” in Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, 2021, pp. 3982–3988.

- J. Yang, S. Ma, D. Zhang, Z. Li, and M. Zhou, “Improving neural machine translation with soft template prediction,” in Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, 2020, pp. 5979–5989.

- J. Yang, S. Ma, D. Zhang, S. Wu, Z. Li, and M. Zhou, “Alternating language modeling for cross-lingual pre-training,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, no. 05, 2020, pp. 9386–9393.

- J. Yang, S. Ma, L. Dong, S. Huang, H. Huang, Y. Yin, D. Zhang, L. Yang, F. Wei, and Z. Li, “Ganlm: Encoder-decoder pre-training with an auxiliary discriminator,” arXiv preprint arXiv:2212.10218, 2022.

- L. Chai, J. Yang, T. Sun, H. Guo, J. Liu, B. Wang, X. Liang, J. Bai, T. Li, Q. Peng et al., “xcot: Cross-lingual instruction tuning for cross-lingual chain-of-thought reasoning,” arXiv preprint arXiv:2401.07037, 2024.

- A. Aghajanyan, L. Yu, A. Conneau, W. Hsu, K. Hambardzumyan, S. Zhang, S. Roller, N. Goyal, O. Levy, and L. Zettlemoyer, “Scaling laws for generative mixed-modal language models,” in International Conference on Machine Learning, ICML 2023, 23-29 July 2023, Honolulu, Hawaii, USA, ser. Proceedings of Machine Learning Research, A. Krause, E. Brunskill, K. Cho, B. Engelhardt, S. Sabato, and J. Scarlett, Eds., vol. 202. PMLR, 2023, pp. 265–279.

- R. Anil, A. M. Dai, O. Firat, M. Johnson, D. Lepikhin, A. Passos, S. Shakeri, E. Taropa, P. Bailey, Z. Chen, E. Chu, J. H. Clark, L. E. Shafey, Y. Huang, K. Meier-Hellstern, G. Mishra, E. Moreira, M. Omernick, K. Robinson, S. Ruder, Y. Tay, K. Xiao, Y. Xu, Y. Zhang, G. H. Ábrego, J. Ahn, J. Austin, P. Barham, J. A. Botha, J. Bradbury, S. Brahma, K. Brooks, M. Catasta, Y. Cheng, C. Cherry, C. A. Choquette-Choo, A. Chowdhery, C. Crepy, S. Dave, M. Dehghani, S. Dev, J. Devlin, M. Díaz, N. Du, E. Dyer, V. Feinberg, F. Feng, V. Fienber, M. Freitag, X. Garcia, S. Gehrmann, L. Gonzalez, and et al., “Palm 2 technical report,” CoRR, vol. abs/2305.10403, 2023.

- A. Vaswani et al., “Attention is all you need,” Advances in neural information processing systems, vol. 30, 2017.

- M. Lewis et al., “Bart: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension,” ACL, 2020.

- T. B. Brown et al., “Language models are few-shot learners,” NeurIPS, 2020.

- A. Chowdhery et al., “Palm: Scaling language modeling with pathways,” ArXiv, 2022.

- J. Kaplan et al., “Scaling laws for neural language models,” ArXiv, 2020.

- L. Ouyang et al., “Training language models to follow instructions with human feedback,” arXiv preprint arXiv:2203.02155, 2022.

- J. H. Park et al., “Generative models as multi-agent systems,” Journal of Artificial Intelligence Research, 2023.

- L. Sumers et al., “Cognitive architectures for autonomous agents: A survey,” arXiv preprint arXiv:2302.00000, 2023.

- L. Qin et al., “Toolllm: Enhancing large language models with external tools for advanced problem solving,” arXiv preprint arXiv:2305.00000, 2023.

- M. Li et al., “Api-aware language modeling for micro-service architectures,” arXiv preprint arXiv:2308.00000, 2023.

- X. Jin et al., “Assessing the impact of large language models in cloud service root cause analysis,” arXiv preprint arXiv:2309.00000, 2023.

- X. Wang, Y. Chen, L. Yuan, Y. Zhang, Y. Li, H. Peng, and H. Ji, “Executable code actions elicit better llm agents,” 2024.

- A. Wang et al., “Interactive learning with autonomous agents and large language models,” arXiv preprint arXiv:2303.00000, 2023.

- Y. Zhou et al., “Llm-based autonomous agents for dynamic environments,” arXiv preprint arXiv:2304.00000, 2023.

- S. Ahmed et al., “Recommending fixes for cloud service failures with llm-enhanced tools,” arXiv preprint arXiv:2310.00000, 2023.

- Y. Chen, H. Xie, M. Ma, Y. Kang, X. Gao, L. Shi, Y. Cao, X. Gao, H. Fan, M. Wen, J. Zeng, S. Ghosh, X. Zhang, C. Zhang, Q. Lin, S. Rajmohan, D. Zhang, and T. Xu, “Automatic root cause analysis via large language models for cloud incidents,” 2023.

- X. Zhou, X. Peng, T. Xie, J. Sun, C. Ji, W. Li, and D. Ding, “Fault analysis and debugging of microservice systems: Industrial survey, benchmark system, and empirical study,” IEEE Transactions on Software Engineering, vol. 47, no. 2, pp. 243–260, 2018.

- B. Li, X. Peng, Q. Xiang, H. Wang, T. Xie, J. Sun, and X. Liu, “Enjoy your observability: an industrial survey of microservice tracing and analysis,” Empirical Software Engineering, vol. 27, pp. 1–28, 2022.

- alibaba. (2021) https://github.com/chaosblade-io/chaosblade. [Online]. Available: https://github.com/chaosblade-io/chaosblade

- I. Abdallah, V. Dertimanis, H. Mylonas, K. Tatsis, E. Chatzi, N. Dervili, K. Worden, and E. Maguire, “Fault diagnosis of wind turbine structures using decision tree learning algorithms with big data,” in Safety and Reliability–Safe Societies in a Changing World. CRC Press, 2018, pp. 3053–3061.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.