Map-Relative Pose Regression for Visual Re-Localization (2404.09884v1)

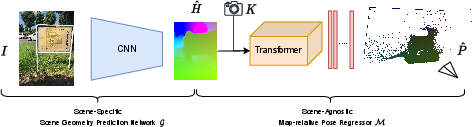

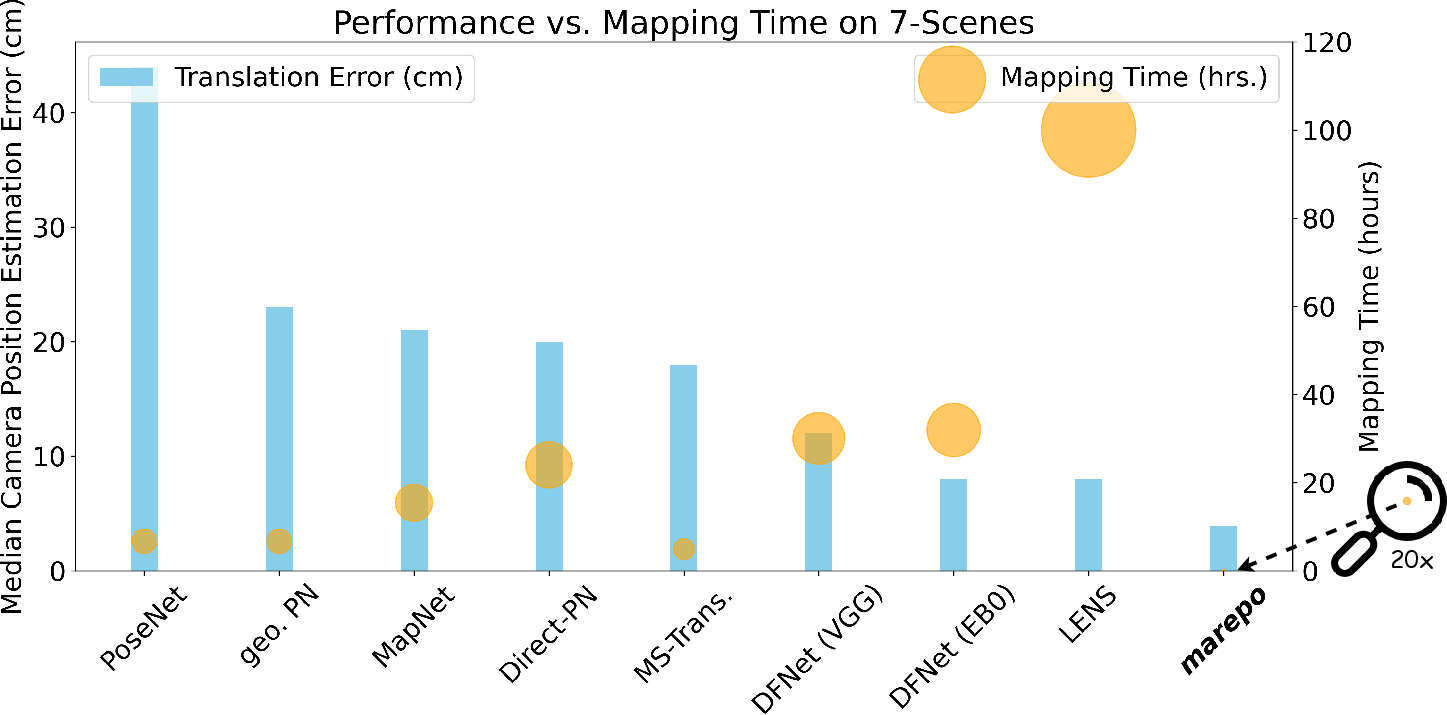

Abstract: Pose regression networks predict the camera pose of a query image relative to a known environment. Within this family of methods, absolute pose regression (APR) has recently shown promising accuracy in the range of a few centimeters in position error. APR networks encode the scene geometry implicitly in their weights. To achieve high accuracy, they require vast amounts of training data that, realistically, can only be created using novel view synthesis in a days-long process. This process has to be repeated for each new scene again and again. We present a new approach to pose regression, map-relative pose regression (marepo), that satisfies the data hunger of the pose regression network in a scene-agnostic fashion. We condition the pose regressor on a scene-specific map representation such that its pose predictions are relative to the scene map. This allows us to train the pose regressor across hundreds of scenes to learn the generic relation between a scene-specific map representation and the camera pose. Our map-relative pose regressor can be applied to new map representations immediately or after mere minutes of fine-tuning for the highest accuracy. Our approach outperforms previous pose regression methods by far on two public datasets, indoor and outdoor. Code is available: https://nianticlabs.github.io/marepo

- Apple. ARKit. Accessed: 26 March 2024.

- Map-free visual relocalization: Metric pose relative to a single image. In ECCV, 2022.

- Neural- Guided RANSAC: Learning where to sample model hypotheses. In ICCV, 2019.

- Visual camera re-localization from RGB and RGB-D images using DSAC. IEEE TPAMI, 2021.

- DSAC - Differentiable RANSAC for Camera Localization. In CVPR, 2017.

- Accelerated coordinate encoding: Learning to relocalize in minutes using rgb and poses. In CVPR, 2023.

- Geometry-Aware Learning of Maps for Camera Localization. In CVPR, 2018.

- Hybrid scene compression for visual localization. In CVPR, 2019.

- End-to-end object detection with transformers. In ECCV, 2020.

- Wide-baseline relative camera pose estimation with directional learning. In CVPR, 2021a.

- Direct-PoseNet: Absolute pose regression with photometric consistency. In 3DV, 2021b.

- DFNet: Enhance absolute pose regression with direct feature matching. In ECCV, 2022.

- Vidloc: A deep spatio-temporal model for 6-dof video-clip relocalization. In CVPR, 2017.

- Visual localization via few-shot scene region classification. In 3DV, 2022.

- An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020.

- Rpnet: An end-to-end network for relative camera pose estimation. In ECCVW, 2018.

- Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. In CACM, 1981.

- Complete solution classification for the perspective-three-point problem. IEEE TPAMI, 2003.

- Real-time rgb-d camera relocalization. In ISMAR, 2013.

- Google. ARCore. Accessed: 26 March 2024.

- Robust image retrieval-based visual localization using Kapture, 2020.

- Transformers are rnns: Fast autoregressive transformers with linear attention. In ICML, 2020.

- Modelling uncertainty in deep learning for camera relocalization. In ICRA, 2016.

- Geometric loss functions for camera pose regression with deep learning. In CVPR, 2017.

- Posenet: A convolutional network for real-time 6-dof camera relocalization. In ICCV, 2015.

- An analysis of svd for deep rotation estimation. NeurIPS, 2020.

- Hierarchical scene coordinate classification and regression for visual localization. In CVPR, pages 11983–11992, 2020.

- Decoupled weight decay regularization. ICLR, 2017.

- Large-scale, real-time visual-inertial localization revisited. International Journal of Robotics Research, 39(9), 2019.

- Image-based localization using hourglass networks. In ICCVW, 2017.

- NeRF Representing scenes as neural radiance fields for view synthesis. In ECCV, 2020.

- LENS: Localization enhanced by nerf synthesis. In CoRL, 2021.

- Coordinet: uncertainty-aware pose regressor for reliable vehicle localization. In WACV, 2022.

- Deep regression for monocular camera-based 6-dof global localization in outdoor environments. In IROS, 2017.

- Meshloc: Mesh-based visual localization. In ECCV, pages 589–609. Springer, 2022.

- Synthetic view generation for absolute pose regression and image synthesis. In BMVC, 2018.

- Vlocnet++: Deep multitask learning for semantic visual localization and odometry. In IEEE Robotics and Automation Letters, 2018.

- From coarse to fine: Robust hierarchical localization at large scale. In CVPR, 2019.

- Superglue: Learning feature matching with graph neural networks. In CVPR, 2020.

- Back to the Feature: Learning robust camera localization from pixels to pose. In CVPR, 2021.

- Improving image-based localization by active correspondence search. In ECCV, 2012.

- Efficient & Effective Prioritized Matching for Large-Scale Image-Based Localization. In IEEE TPAMI, 2017.

- Understanding the limitations of cnn-based absolute camera pose regression. In CVPR, 2019.

- Structure-from-motion revisited. In CVPR, 2016.

- Camera pose auto-encoders for improving pose regression. In ECCV, 2022.

- Paying attention to activation maps in camera pose regression. In arXiv preprint arXiv:2103.11477, 2021a.

- Learning multi-scene absolute pose regression with transformers. In ICCV, 2021b.

- Scene coordinate regression forests for camera relocalization in rgb-d images. In CVPR, 2013.

- Super-convergence: Very fast training of neural networks using large learning rates. In Artificial intelligence and machine learning for multi-domain operations applications, 2019.

- LoFTR: Detector-free local feature matching with transformers. CVPR, 2021.

- Visual Camera Re-Localization Using Graph Neural Networks and Relative Pose Supervision. In 3DV, 2021.

- Learning to navigate the energy landscape. In 3DV. IEEE, 2016.

- Image-based localization using lstms for structured feature correlation. In ICCV, 2017.

- Atloc: Attention guided camera localization. In AAAI, 2020.

- Learning to localize in new environments from synthetic training data. In ICRA, 2021.

- Delving Deeper into Convolutional Neural Networks for Camera Relocalization. In ICRA, 2017.

- SANet: Scene agnostic network for camera localization. In ICCV, 2019.

- Is geometry enough for matching in visual localization? In ECCV, 2022.

- On the continuity of rotation representations in neural networks. In CVPR, 2019.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.