Ferret-UI: Implementing Multimodal LLMs for Enhanced Mobile UI Understanding

Introduction

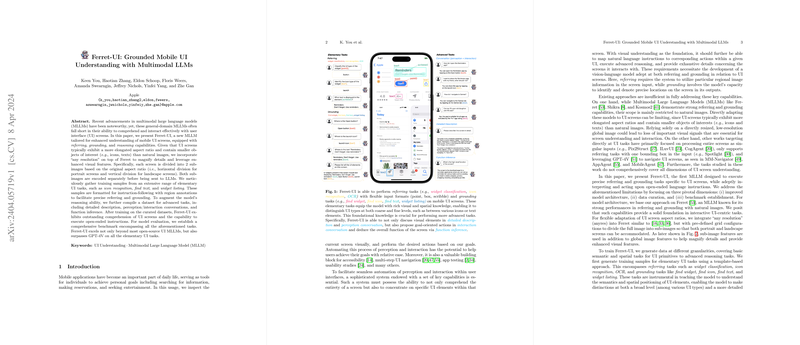

Mobile applications are ubiquitous in our daily activities, assisting us in a wide array of tasks from information search to entertainment. A vice for more effective interaction with these interfaces has led to the development of systems designed to interpret and act upon UI screens autonomously. This paper introduces Ferret-UI, a tailored Multimodal LLM (MLLM) aimed at understanding mobile UI screens through advanced referring, grounding, and reasoning capabilities. Traditional MLLMs, while proficient in dealing with natural images, often falter when applied directly to UI understanding due to the unique characteristics of UI screens, like elongated aspect ratios and dense small-sized elements. Ferret-UI tackles these challenges by incorporating a specifically designed architecture and training datasets to operationally magnify UI details and improve comprehension and interaction with mobile interfaces.

Model Architecture and Training

Ferret-UI is built on the foundation of Ferret, a MLLM known for its adeptness in referring and grounding tasks. To adapt to the distinct features of UI screens, Ferret-UI introduces an "any resolution" approach, dividing screens into sub-images for detailed processing. This method ensures enhanced visual features from UI elements are captured, aiding in the model's understanding and interaction capabilities.

Training Ferret-UI involved creating a diverse dataset that not only encompasses basic UI tasks like icon recognition and text finding but also addresses advanced reasoning abilities through datasets for detailed description, perception/interaction conversation, and function inference. The training strategy ensures the model's proficiency in executing both elementary UI tasks and engaging in complex reasoning about UI screens.

Evaluation and Benchmarking

Ferret-UI’s performance was rigorously evaluated against a comprehensive benchmark of UI understanding tasks. Results showed Ferret-UI significantly outperforms existing open-source UI MLLMs and even surpasses GPT-4V on elementary UI tasks. The evaluation extended to advanced tasks showed Ferret-UI's strong capabilities in understanding and interacting with UIs through natural language instructions, highlighting its potential impact on accessibility, app testing, and multi-step navigation.

Implications and Future Directions

The development of Ferret-UI represents a notable step toward more nuanced and effective interaction with mobile UIs through AI. Its ability to understand and reason about UI elements has significant implications for building more intuitive and accessible digital interfaces. Future research could focus on expanding Ferret-UI's capabilities to encompass more varied UI designs and interaction modes. Additionally, exploring the integration of Ferret-UI with real-world applications offers a promising avenue for enhancing user experience and accessibility across mobile platforms.

Ferret-UI's architecture, tailored dataset, and performance on a diverse set of tasks underscore its potential in transforming how AI systems understand and interact with mobile user interfaces. As AI continues to evolve, models like Ferret-UI pave the way for more intelligent and user-friendly applications, further bridging the gap between human-computer interaction.