Ferret: Refer and Ground Anything Anywhere at Any Granularity

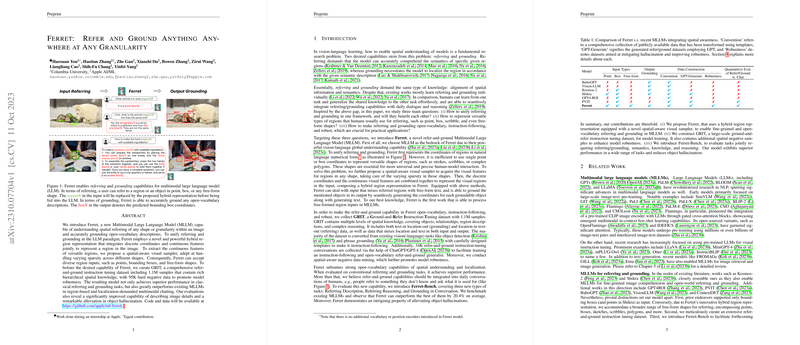

Ferret introduces a Multimodal LLM (MLLM) capable of comprehensive spatial understanding and grounding of images. The primary contributions of Ferret revolve around the unique ability to handle diverse region-based inputs and produce detailed, grounded outputs in a unified framework.

Key Contributions

1. Hybrid Region Representation

Ferret employs a novel hybrid region representation integrating discrete coordinates and continuous features. This allows the model to represent various region shapes such as points, bounding boxes, and free-form shapes. The discrete coordinates are quantized, and continuous features are derived using a Spatial-Aware Visual Sampler, enhancing the model's interaction with irregular shapes and sparsely distributed regions.

2. Spatial-Aware Visual Sampler

This module addresses the challenge of processing diverse region shapes. By utilizing techniques from 3D point cloud learning, it samples, gathers, and pools points to extract dense region features. This sophisticated approach enables Ferret to handle varying densities and forms of input regions effectively.

3. Comprehensive Dataset: GRIT

Ferret is trained on the GRIT dataset containing 1.1M samples. GRIT includes data on object detection, visual grounding, and complex reasoning, ensuring the model's robust performance across various tasks. The dataset is augmented with 95K hard negative samples to mitigate object hallucination and enhance model robustness.

4. Superior Performance in Classical Tasks

Ferret sets a new benchmark in tasks of referring and grounding within images. Empirical results demonstrate Ferret's capability to outperform contemporary models in region-based multimodal chatting and specific visual grounding and captioning benchmarks.

Empirical Evaluations

Referring Object Classification

Ferret demonstrates robust performance across different types of referring formats (point, box, free-form shape). For example, in the LVIS dataset, it achieves an accuracy of 68.35% for point-based, 80.46% for box-based, and 70.98% for free-form referring, significantly outperforming existing models such as Shikra and GPT4-ROI.

Grounded Image Captioning

In the Flickr30k Entities dataset, Ferret surpasses predecessors in both caption evaluation metrics (BLEU@4, METEOR, CIDEr, SPICE) and grounding evaluation metrics (, ), with a CIDEr score of 76.1 and a of 38.03.

Visual Grounding

Ferret excels in standard visual grounding benchmarks, including RefCOCO, RefCOCO+, and RefCOCOg, achieving accuracy rates as high as 92.41% in certain tasks, showcasing marked improvements over previously leading approaches such as MDETR and Kosmos-2.

Ferret-Bench for Multimodal Chatting

Ferret is also assessed on the newly proposed Ferret-Bench. This evaluation covers tasks necessitating intricate referring and grounding within conversational contexts. For instance, in the Referring Reasoning task, Ferret outperforms models like Kosmos-2 and Shikra substantially. The superior performance is evident in tasks that demand detailed spatial understanding and context-aware reasoning.

Implications and Future Directions

The implications of Ferret's capabilities are multifaceted:

- Practical Applications: Ferret's advanced spatial understanding and grounding abilities have profound implications for real-world applications such as autonomous navigation, advanced human-computer interaction, and augmented reality.

- Theoretical Impact: From a theoretical standpoint, Ferret's hybrid representation and spatial-aware sampling provide a new paradigm for integrating continuous and discrete data in MLLMs, potentially guiding future research on multimodal learning.

- Future Research: Moving forward, there are intriguing avenues to explore, such as enhancing Ferret to output segmentation masks instead of just bounding boxes. This could bridge the gap between coarse localization and fine-grained scene understanding.

Conclusion

Ferret represents a significant advancement in the domain of multimodal LLMs by setting new benchmarks in spatial understanding and grounding tasks. Its innovative hybrid region representation and spatial-aware visual sampler enable it to tackle diverse and complex visual inputs. With its comprehensive training dataset and robust performance across various benchmarks, Ferret not only addresses current limitations but also opens up new possibilities for future research and applications in AI.