Abstract

Google Research introduces ScreenAI, a model enhancing the understanding of screen user interfaces (UIs) and infographics, leveraging a novel approach that combines the capabilities of the PaLI architecture with the flexibility of the pix2struct patching mechanism. Focusing on tasks like question-answering, navigation, and summarization, ScreenAI sets new state-of-the-art or best-in-class performance in multiple UI- and infographics-based benchmarks, despite its modest parameter count of 5 billion. Additionally, it brings forth a unique textual representation for UIs and employs LLMs to generate massive training datasets autonomously.

Introduction

ScreenAI emerges in response to the confluence of design principles across infographics and digital UIs, widely used for information exchange and user interaction. Infographics demand cognitive ease in interpreting complex data, a need mirrored in interactive digital environment designs for seamless human-computer interaction. ScreenAI embodies an effort to understand and interact with such content seamlessly, overcoming the inherent challenge of pixel-rich visuals with a unified model that innovates a new state-of-the-art landscape.

Methodology

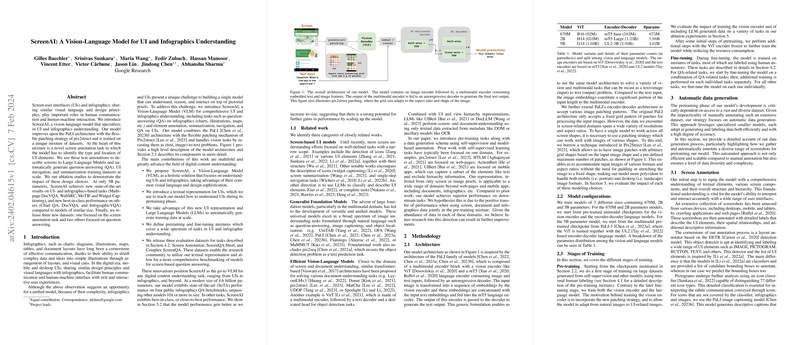

At its core, ScreenAI advances a vision-LLM (VLM) that anchors on a blend of datasets driven by self-supervision and minimal human labeling. Central to this, it introduces a new screen annotation task, compelling the model to discern UI elements through text annotations that subsequently inform LLMs to generate varied, scalable training sets. The building block of ScreenAI's architecture is reminiscent of PaLI but with a crucial pivot to accommodate non-uniform image formats through pix2struct patching. The model's parameters are split among vision and LLMs, having trained on extensive datasets that mix traditional image and text data sources with screen-related tasks.

Evaluations and Results

ScreenAI's evaluation indicates its new leading performance across multiple benchmarks, spanning Multipage DocVQA, WebSRC, MoTIF, Widget Captioning, and InfographicVQA, managing to outpace or rival other advanced models. The evaluations underline the model's proficiency in question-answering, comprehension, navigation tasks, and more. A critical aspect of performance enhancement pertains to the incorporation of OCR data during fine-tuning, which, despite demanding higher input length, appears to bolster the model's effectiveness in QA tasks.

Conclusion

In conclusion, ScreenAI represents a significant milestone in VLMs, adept at interpreting and engaging with digital content encompassing documents, infographics, and various UI formats. Its methodical approach, from LLM-driven data generation to strategic training methodologies, accentuates the potency of a unified VLM that thrives across a spectrum of screen-based interactions. Google Research's contribution, marked by the release of three vital datasets, embodies a potent resource for the community to foster groundbreaking models amenable to screen-based question-answering tasks and beyond.