InternVideo2: A Comprehensive Video Foundation Model for Enhanced Multimodal Understanding

Introduction

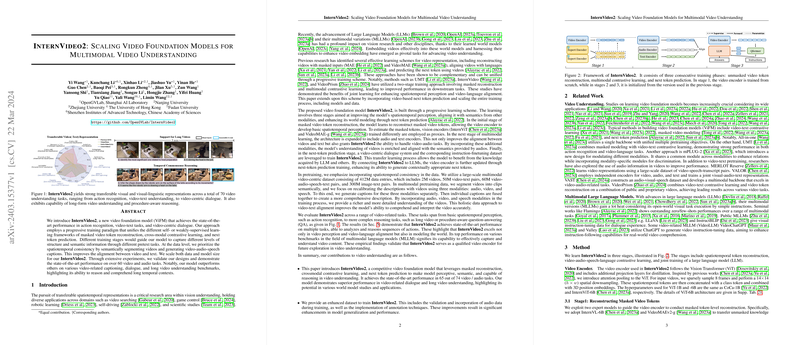

The rapid advancement in video understanding technologies has facilitated the development of models capable of comprehending complex video contents across multiple dimensions. The paper introduces InternVideo2, a state-of-the-art Video Foundation Model (ViFM) tailored for an expansive range of video understanding tasks. This model employs a progressive training framework, integrating masked video token reconstruction, cross-modal contrastive learning, and next token prediction to cultivate a deep understanding of video semantics. This fusion of methodologies enables InternVideo2 to perform excellently across a broad spectrum of video and audio tasks.

Methodology and Innovations

InternVideo2 distinguishes itself through a nuanced progressive learning scheme that strategically enhances its capacity for spatiotemporal perception, semantic alignment across modalities, and enriched world modeling abilities.

- Progressive Learning Scheme: At its core, InternVideo2's training is segmented into distinct stages, each focusing on a different aspect of video understanding. Initially, the model is trained to reconstruct masked video tokens, enhancing its spatiotemporal perception. Subsequently, the model is exposed to multimodal learning, incorporating audio and text for richer semantic understanding. Lastly, it undergoes next-token prediction training to polish its generative capabilities and dialogue understanding.

- In-depth Spatiotemporal Understanding: By employing vision transformers (ViT) and exploring different pretext tasks at each stage, InternVideo2 develops a robust spatiotemporal understanding that is crucial for processing video inputs effectively.

- Cross-modal Contrastive Learning and Semantic Alignment: The inclusion of audio and text modalities in training not only improves the model's alignment between video and auxiliary data but also broadens its applicability across various tasks.

The comprehensive methodology embraced by InternVideo2 ensures it not only learns from visual cues but also effectively integrates audio and textual contexts, making it an adept model for complex multimodal understanding tasks.

Empirical Validation and Performance

Through rigorous experimental validation, InternVideo2 has demonstrated superior competency in over 65 video and audio tasks. Significantly, it achieves state-of-the-art performance in action recognition, video-text understanding, and video-centric dialogue tasks. These outcomes are indicative of InternVideo2's ability to effectively capture, analyze, and comprehend long temporal contexts and complex multimodal data.

- Superior Action Recognition: InternVideo2 sets new benchmarks in action recognition tasks. Its architecture and training methodology enable it to recognize and categorize actions with remarkable accuracy, outperforming its predecessors.

- Unparalleled Video-Text Understanding: In video-text tasks, InternVideo2's ability to semantically align and reason with both the visual and textual content allows it to generate insightful and contextually relevant outputs.

- Advanced Video-Centric Dialogue Capabilities: The model demonstrates excellent capabilities in video-centric dialogue, aiding in the development of interactive systems that can engage in meaningful exchanges based on video content.

Implications and Future Work

The development of InternVideo2 signifies a significant leap in video understanding, offering a versatile model capable of mastering a wide array of multimodal tasks. Its success heralds a new era for applications ranging from enhanced content recommendation systems to the development of sophisticated interactive agents.

Looking forward, the potential for further refining InternVideo2's training process and extending its applications is vast. Future work could explore more intricate multimodal interactions or delve into unsolved challenges within video understanding, leveraging the strong foundation laid by InternVideo2.

Conclusion

InternVideo2 represents a pivotal advancement in video foundation models, characterized by its progressive learning scheme and robust multimodal understanding capabilities. Its exemplary performance across diverse tasks underscores its effectiveness as a comprehensive tool for video understanding, promising significant contributions to both theoretical research and practical applications in the AI domain.