Overview of VideoLLaMA3: Frontier Multimodal Foundation Models

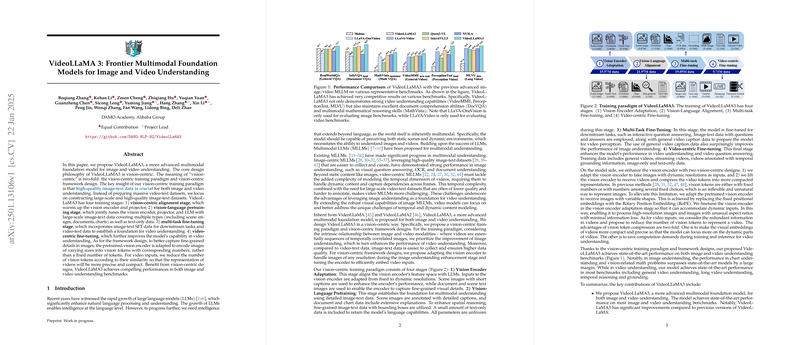

The paper under discussion introduces VideoLLaMA3, an advanced multimodal foundation model designed to improve image and video understanding. The authors articulate a vision-centric approach that emphasizes high-quality image-text data as crucial to both image and video comprehension, shifting away from the conventional reliance on extensive video-text datasets. VideoLLaMA3 is structured around a vision-centric training paradigm with four distinct stages that progressively enhance the model's capability to interpret visual data.

The stages of training are as follows: firstly, the vision-centric alignment to warm up the vision encoder and projector; secondly, vision-language pretraining to jointly tune components using large-scale and diverse image-text datasets; thirdly, multi-task fine-tuning, which incorporates image-text data for downstream tasks as well as video-text data; finally, video-centric fine-tuning to further refine video understanding abilities.

The framework of VideoLLaMA3 is noteworthy for its focus on capturing fine-grained details. The authors adapt a pretrained vision encoder to accommodate images of varying sizes, thus creating scalable vision tokens relevant to their context. For video inputs, the model strategically reduces the number of vision tokens based on similarity measures to ensure precision and compactness, while maintaining computational efficiency.

The model's architecture reflects substantial improvements in benchmarks for both image and video understanding. The experiments compare VideoLLaMA3 to preceding models, where it achieves superior results in evaluations like VideoMME, PerceptionTest, MLVU for video understanding, and DocVQA and MathVista for image comprehension tasks. These results emphasize the model's performance in tasks that require detailed comprehension of static and dynamic visual data, illustrating its effectiveness across a range of applications.

Implications for Future Developments in AI

The research presented in this paper has significant implications for the development of AI systems capable of more nuanced understanding across multiple modalities. The emphasis on high-quality image-text data as a backbone for video comprehension highlights a practical approach toward addressing the complexities inherent in video data.

Practically, the insights from VideoLLaMA3 suggest that future models could benefit from a similar vision-centric approach, where sophisticated image understanding serves as the foundation for robust video analysis capabilities. The efficient repurposing of image-focused datasets could also streamline the development pipeline, reducing reliance on labor-intensive video data curation.

Theoretically, this work underscores the potential for transferring knowledge across modalities within AI systems, providing a roadmap for integrating various visual inputs within a unified architecture. The work invites further exploration into how vision-centric training paradigms can be adapted for other complex data types or combined with additional sensory inputs like audio, enhancing the AI's overall perceptual and reasoning capabilities.

In summary, VideoLLaMA3 represents a significant stride in the field of multimodal AI, reflecting a strategically sound methodology for simultaneous optimization of image and video understanding capabilities that sets a precedent for future research and development in the field.