Enhancing Logical Reasoning in AI with Lean: Introducing LeanReasoner

Overview of LeanReasoner

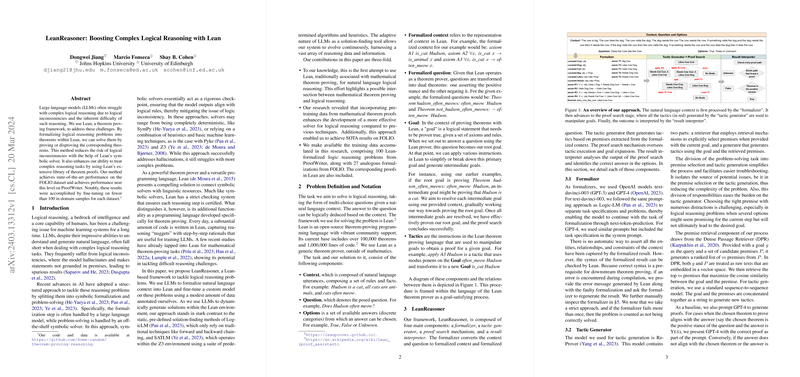

LeanReasoner is a novel framework designed to improve the performance of LLMs on complex logical reasoning tasks. By integrating Lean, a theorem proving framework, LeanReasoner formalizes logical reasoning problems into theorems and attempts to solve them by proving or disproving these theorems. The incorporation of Lean's symbolic solver significantly reduces the risk of logical inconsistencies and enhances the ability to manage intricate reasoning tasks. Through this method, LeanReasoner achieves state-of-the-art performance on the FOLIO dataset and near-state-of-the-art performance on ProofWriter, even with fewer than 100 in-domain samples for fine-tuning on each dataset.

Key Components of LeanReasoner

LeanReasoner encompasses four primary components:

- The Formalizer: Utilizes OpenAI models (GPT-3 and GPT-4) to convert natural language contexts into formalized Lean theorems. It acts as the interface between natural language inputs and the symbolic world of theorem proving.

- Tactic Generator: Employs ReProver model, leveraging retrieval mechanisms and generative tactics to construct proofs based on the provided formulation.

- Proof Search Mechanism: Oversees the selection of tactics and manages the proof construction process, resulting in a proof tree that evolves toward proving the theorem.

- Result Interpreter: Analyzes the output from the proof search to determine the correct answer among the provided options.

Experimental Setup and Results

The LeanReasoner framework was evaluated using two logical reasoning datasets: ProofWriter and FOLIO. The experiments involved fine-tuning a customized model using a modest amount of domain-specific annotation.

- ProofWriter: LeanReasoner demonstrated state-of-the-art performance, successfully leveraging the rigidity of Lean's symbolic solver to navigate the dataset's logical complexities. The approach's efficiency is underscored by its high accuracy achieved with minimal in-domain samples for fine-tuning.

- FOLIO: The framework accomplished near-state-of-the-art performance, a notable achievement given FOLIO's more complex logical structure and intricate linguistic constructs. Its success on FOLIO highlights its capability in tackling advanced logical reasoning challenges.

Implications and Speculation on Future Developments

LeanReasoner's introduction marks a significant advancement in combining symbolic solvers with LLMs for logical reasoning. It demonstrates the potential of using theorem provers like Lean to fortify the logical reasoning capabilities of LLMs, ensuring outputs that adhere strictly to logical rules.

This research's implications extend beyond merely enhancing model performance on reasoning tasks. It suggests a promising direction for future AI development, where the fusion of symbolic reasoning and natural language understanding can lead to more reliable, logically consistent AI systems.

Looking ahead, further exploration into the integration of different symbolic solvers, optimizing the formalization process, and scaling the approach to accommodate a broader range of logical reasoning tasks appear to be promising avenues. Additionally, investigating the impact of training LLMs on datasets specifically tailored for theorem proving could further enhance their reasoning faculties, potentially leading to breakthroughs in AI's logical reasoning capabilities.

In conclusion, LeanReasoner's approach heralds a new era in logical reasoning in AI, blending the structured reasoning of symbolic solvers with the flexible understanding of LLMs. Its success on challenging datasets underscores the robustness of this method, offering a glimpse into the future of AI research in logical reasoning and theorem proving.