Generative AI for Synthetic Data Generation: A Professional Overview

The paper "Generative AI for Synthetic Data Generation: Methods, Challenges and the Future" by Xu Guo and Yiqiang Chen explores the domain of using Generative AI, specifically LLMs, to create synthetic data. This research is positioned at the intersection of data generation and AI, highlighting methodologies, challenges, and potential applications that leverage LLMs for improved synthetic data generation.

Methodologies

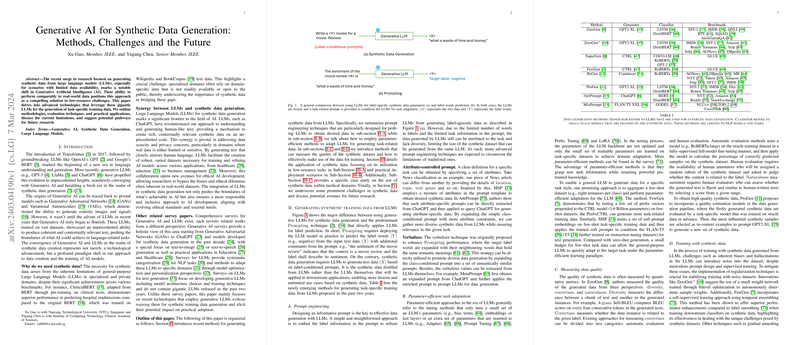

The paper details the advancements in generating synthetic data using LLMs by emphasizing several innovative methodologies. Central to these methods is prompt engineering, wherein prompts are refined to more effectively guide LLMs in producing task-specific data. Techniques such as attribute-controlled prompts and the use of verbalizers are employed to enhance the relevance and diversity of the generated data.

Another crucial aspect addressed is the parameter-efficient task adaptation. Methods like FewGen apply parameter-efficient tuning strategies to align a general-purpose LLM with specific tasks, utilizing few-shot data to retrain certain model components without altering the entire architecture. This approach allows for effective task adaptation while maintaining computational efficiency.

The paper also discusses methods for ensuring the quality of synthetic data by employing metrics for diversity, correctness, and naturalness. Approaches such as using quality estimation modules during the data generation process illustrate how models may prioritize high-fidelity synthetic outputs.

Finally, strategies for training models using synthetic data are considered. This includes the introduction of regularization techniques and other methodological innovations to mitigate noise and inherent biases in training data sets.

Applications and Implications

The paper outlines several applications of synthetic data generation, notably in addressing low-resource and long-tail problems where real data is sparse or unevenly distributed. Synthetic data serves as a valuable asset in these scenarios, providing robust training datasets that contribute to more generalized and accessible AI models.

In practical deployment contexts, synthetic data enables the training of lightweight models suitable for environments where computational resources are constrained. This capacity promotes faster inference and easier integration into real-world applications.

The use cases extend to specialized domains such as medicine, where data privacy concerns limit the availability of real data. Synthetic data facilitates meaningful advancements in medical AI tasks, offering enhanced training opportunities without compromising confidentiality.

Challenges and Future Directions

Despite the potential benefits, the paper rightly acknowledges ongoing challenges associated with synthetic data generation. Ensuring the quality and diversity of synthetic data remains an open problem, particularly when addressing hallucinations and inaccuracies inherent in LLM outputs. Furthermore, the ethical and privacy implications of synthetic data utilization are scrutinized, with calls for robust policy and technical frameworks to safeguard individual rights and data integrity.

The discussed implications prompt the need for further research to enhance the alignment of synthetic data with real-world requirements. The trajectory for future work involves developing more sophisticated techniques for data generation, addressing bias mitigation, and fostering inclusive AI development strategies.

Conclusion

The paper by Guo and Chen provides a comprehensive examination of the state of synthetic data generation using LLMs, presenting both the potential and the hurdles that define this evolving field. By outlining current methodologies and envisioning future directions, the authors contribute significantly to advancing understanding and fostering innovation in AI-driven synthetic data generation. This work underscores the necessity of collaborative efforts in bridging the gap between technological possibilities and practical implementations, ensuring ethical, efficient, and inclusive progress in AI research.