Best Practices and Lessons Learned on Synthetic Data

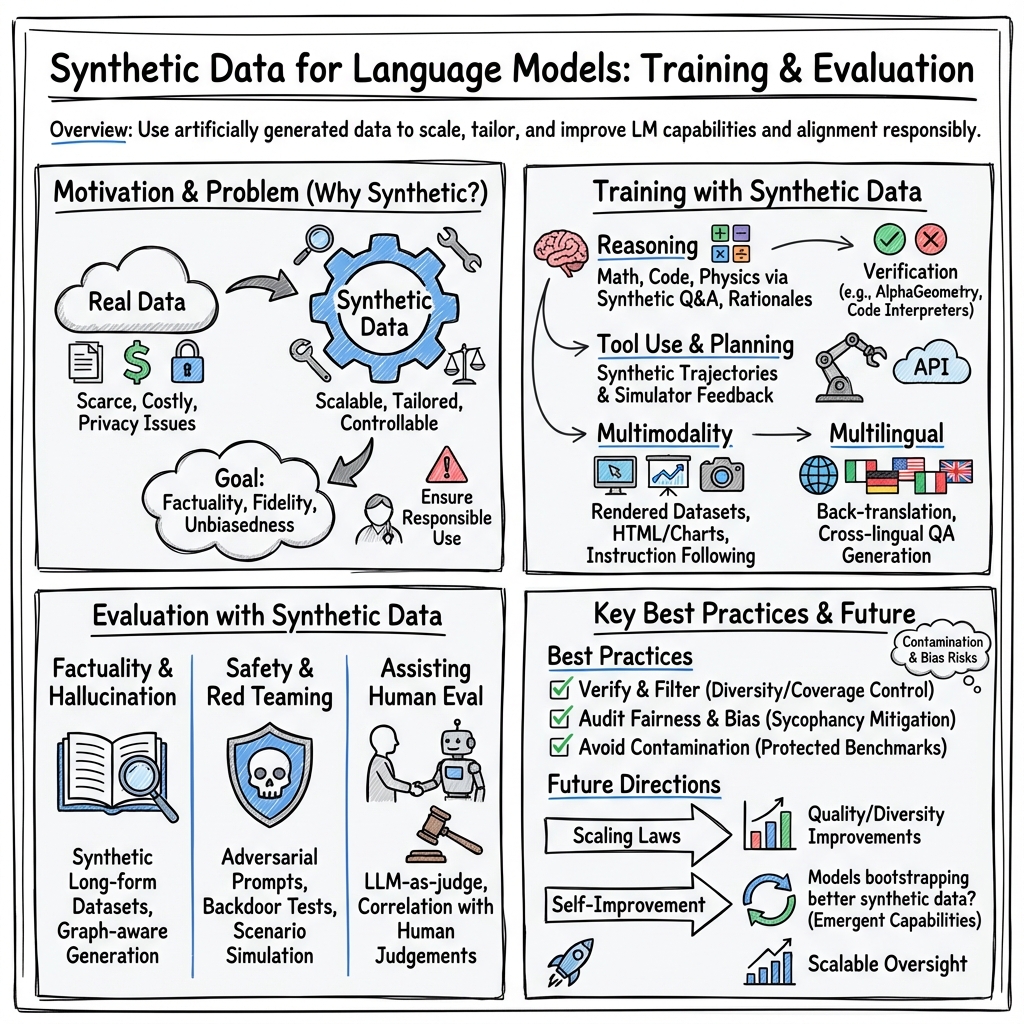

Abstract: The success of AI models relies on the availability of large, diverse, and high-quality datasets, which can be challenging to obtain due to data scarcity, privacy concerns, and high costs. Synthetic data has emerged as a promising solution by generating artificial data that mimics real-world patterns. This paper provides an overview of synthetic data research, discussing its applications, challenges, and future directions. We present empirical evidence from prior art to demonstrate its effectiveness and highlight the importance of ensuring its factuality, fidelity, and unbiasedness. We emphasize the need for responsible use of synthetic data to build more powerful, inclusive, and trustworthy LLMs.

- Privacy preserving synthetic data release using deep learning. In Machine Learning and Knowledge Discovery in Databases: European Conference, ECML PKDD 2018, Dublin, Ireland, September 10–14, 2018, Proceedings, Part I 18, pages 510–526. Springer, 2019.

- Lapca: Language-agnostic pretraining with cross-lingual alignment. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval, pages 2098–2102, 2023.

- Do as i can, not as i say: Grounding language in robotic affordances. ArXiv preprint, abs/2204.01691, 2022. URL https://arxiv.org/abs/2204.01691.

- Concrete problems in ai safety. ArXiv preprint, abs/1606.06565, 2016. URL https://arxiv.org/abs/1606.06565.

- Frontier ai regulation: Managing emerging risks to public safety. ArXiv preprint, abs/2307.03718, 2023. URL https://arxiv.org/abs/2307.03718.

- Out of one, many: Using language models to simulate human samples. Political Analysis, 31(3):337–351, 2023.

- One question answering model for many languages with cross-lingual dense passage retrieval. In M. Ranzato, A. Beygelzimer, Y. N. Dauphin, P. Liang, and J. W. Vaughan, editors, Advances in Neural Information Processing Systems 34: Annual Conference on Neural Information Processing Systems 2021, NeurIPS 2021, December 6-14, 2021, virtual, pages 7547–7560, 2021. URL https://proceedings.neurips.cc/paper/2021/hash/3df07fdae1ab273a967aaa1d355b8bb6-Abstract.html.

- A general language assistant as a laboratory for alignment. ArXiv preprint, abs/2112.00861, 2021. URL https://arxiv.org/abs/2112.00861.

- Generating synthetic data in finance: opportunities, challenges and pitfalls. In Proceedings of the First ACM International Conference on AI in Finance, pages 1–8, 2020.

- Llemma: An open language model for mathematics. ArXiv preprint, abs/2310.10631, 2023. URL https://arxiv.org/abs/2310.10631.

- R. Babbar and B. Schölkopf. Data scarcity, robustness and extreme multi-label classification. Machine Learning, 108(8):1329–1351, 2019.

- Constitutional ai: Harmlessness from ai feedback. ArXiv preprint, abs/2212.08073, 2022. URL https://arxiv.org/abs/2212.08073.

- A methodology for controlling bias and fairness in synthetic data generation. Applied Sciences, 12(9):4619, 2022.

- Data augmentation for text generation without any augmented data. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pages 2223–2237, Online, 2021. Association for Computational Linguistics. 10.18653/v1/2021.acl-long.173. URL https://aclanthology.org/2021.acl-long.173.

- Improving language models by retrieving from trillions of tokens. In K. Chaudhuri, S. Jegelka, L. Song, C. Szepesvári, G. Niu, and S. Sabato, editors, International Conference on Machine Learning, ICML 2022, 17-23 July 2022, Baltimore, Maryland, USA, volume 162 of Proceedings of Machine Learning Research, pages 2206–2240. PMLR, 2022. URL https://proceedings.mlr.press/v162/borgeaud22a.html.

- Language models are realistic tabular data generators. ArXiv preprint, abs/2210.06280, 2022. URL https://arxiv.org/abs/2210.06280.

- Unity perception: Generate synthetic data for computer vision. ArXiv preprint, abs/2107.04259, 2021. URL https://arxiv.org/abs/2107.04259.

- Measuring progress on scalable oversight for large language models. ArXiv preprint, abs/2211.03540, 2022. URL https://arxiv.org/abs/2211.03540.

- Weak-to-strong generalization: Eliciting strong capabilities with weak supervision. ArXiv preprint, abs/2312.09390, 2023. URL https://arxiv.org/abs/2312.09390.

- Deepfakes and international conflict. Brookings Institution, 2023.

- Chart-based reasoning: Transferring capabilities from llms to vlms. ArXiv preprint, abs/2403.12596, 2024. URL https://arxiv.org/abs/2403.12596.

- Red teaming deep neural networks with feature synthesis tools. In Thirty-seventh Conference on Neural Information Processing Systems, 2023a.

- Explore, establish, exploit: Red teaming language models from scratch. ArXiv preprint, abs/2306.09442, 2023b. URL https://arxiv.org/abs/2306.09442.

- Tagged back-translation. In Proceedings of the Fourth Conference on Machine Translation (Volume 1: Research Papers), pages 53–63, Florence, Italy, 2019. Association for Computational Linguistics. 10.18653/v1/W19-5206. URL https://aclanthology.org/W19-5206.

- Improved unsupervised neural machine translation with semantically weighted back translation for morphologically rich and low resource languages. Neural Processing Letters, 54(3):1707–1726, 2022.

- Self-play fine-tuning converts weak language models to strong language models, 2024.

- CoMPosT: Characterizing and evaluating caricature in LLM simulations. In H. Bouamor, J. Pino, and K. Bali, editors, Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, pages 10853–10875, Singapore, Dec. 2023. Association for Computational Linguistics. 10.18653/v1/2023.emnlp-main.669. URL https://aclanthology.org/2023.emnlp-main.669.

- Generative ai for math: Abel. https://github.com/GAIR-NLP/abel, 2023.

- Cross-lingual natural language generation via pre-training. In The Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, The Thirty-Second Innovative Applications of Artificial Intelligence Conference, IAAI 2020, The Tenth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2020, New York, NY, USA, February 7-12, 2020, pages 7570–7577. AAAI Press, 2020. URL https://aaai.org/ojs/index.php/AAAI/article/view/6256.

- Deep reinforcement learning from human preferences. In I. Guyon, U. von Luxburg, S. Bengio, H. M. Wallach, R. Fergus, S. V. N. Vishwanathan, and R. Garnett, editors, Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, December 4-9, 2017, Long Beach, CA, USA, pages 4299–4307, 2017. URL https://proceedings.neurips.cc/paper/2017/hash/d5e2c0adad503c91f91df240d0cd4e49-Abstract.html.

- Ultrafeedback: Boosting language models with high-quality feedback, 2023.

- J. Dahmen and D. Cook. Synsys: A synthetic data generation system for healthcare applications. Sensors, 19(5):1181, 2019.

- Toxicity in chatgpt: Analyzing persona-assigned language models. ArXiv preprint, abs/2304.05335, 2023. URL https://arxiv.org/abs/2304.05335.

- Handling divergent reference texts when evaluating table-to-text generation. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pages 4884–4895, Florence, Italy, 2019. Association for Computational Linguistics. 10.18653/v1/P19-1483. URL https://aclanthology.org/P19-1483.

- Enhancing chat language models by scaling high-quality instructional conversations. ArXiv preprint, abs/2305.14233, 2023. URL https://arxiv.org/abs/2305.14233.

- Understanding back-translation at scale. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, pages 489–500, Brussels, Belgium, 2018. Association for Computational Linguistics. 10.18653/v1/D18-1045. URL https://aclanthology.org/D18-1045.

- Practical synthetic data generation: balancing privacy and the broad availability of data. O’Reilly Media, 2020.

- Improving back-translation with iterative filtering and data selection for sinhala-english nmt. In 2021 Moratuwa Engineering Research Conference (MERCon), pages 438–443. IEEE, 2021.

- Reward tampering problems and solutions in reinforcement learning: A causal influence diagram perspective. Synthese, 198(Suppl 27):6435–6467, 2021.

- Ranking generated summaries by correctness: An interesting but challenging application for natural language inference. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pages 2214–2220, Florence, Italy, 2019. Association for Computational Linguistics. 10.18653/v1/P19-1213. URL https://aclanthology.org/P19-1213.

- FactKB: Generalizable factuality evaluation using language models enhanced with factual knowledge. In H. Bouamor, J. Pino, and K. Bali, editors, Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, pages 933–952, Singapore, Dec. 2023a. Association for Computational Linguistics. 10.18653/v1/2023.emnlp-main.59. URL https://aclanthology.org/2023.emnlp-main.59.

- From pretraining data to language models to downstream tasks: Tracking the trails of political biases leading to unfair nlp models. ArXiv preprint, abs/2305.08283, 2023b. URL https://arxiv.org/abs/2305.08283.

- Synthetic data augmentation using gan for improved liver lesion classification. In 2018 IEEE 15th international symposium on biomedical imaging (ISBI 2018), pages 289–293. IEEE, 2018.

- Red teaming language models to reduce harms: Methods, scaling behaviors, and lessons learned. ArXiv preprint, abs/2209.07858, 2022. URL https://arxiv.org/abs/2209.07858.

- The pile: An 800gb dataset of diverse text for language modeling. ArXiv preprint, abs/2101.00027, 2021. URL https://arxiv.org/abs/2101.00027.

- Scaling laws for reward model overoptimization. In International Conference on Machine Learning, pages 10835–10866. PMLR, 2023.

- Gemini: a family of highly capable multimodal models. ArXiv preprint, abs/2312.11805, 2023. URL https://arxiv.org/abs/2312.11805.

- Gemini 1.5: Unlocking multimodal understanding across millions of tokens of context. ArXiv preprint, abs/2403.05530, 2024. URL https://arxiv.org/abs/2403.05530.

- Gemma: Open models based on gemini research and technology. ArXiv preprint, abs/2403.08295, 2024. URL https://arxiv.org/abs/2403.08295.

- Chatgpt outperforms crowd workers for text-annotation tasks. Proceedings of the National Academy of Sciences, 120(30):e2305016120, 2023a. 10.1073/pnas.2305016120. URL https://www.pnas.org/doi/abs/10.1073/pnas.2305016120.

- Chatgpt outperforms crowd workers for text-annotation tasks. Proceedings of the National Academy of Sciences, 120(30):e2305016120, 2023b.

- Generative adversarial networks. Communications of the ACM, 63(11):139–144, 2020.

- Generalizing back-translation in neural machine translation. In Proceedings of the Fourth Conference on Machine Translation (Volume 1: Research Papers), pages 45–52, Florence, Italy, 2019. Association for Computational Linguistics. 10.18653/v1/W19-5205. URL https://aclanthology.org/W19-5205.

- Deepfake detection by human crowds, machines, and machine-informed crowds. Proceedings of the National Academy of Sciences, 119(1):e2110013119, 2022.

- Cruxeval: A benchmark for code reasoning, understanding and execution. ArXiv preprint, abs/2401.03065, 2024. URL https://arxiv.org/abs/2401.03065.

- Deepfake detection by analyzing convolutional traces. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops, pages 666–667, 2020.

- Transitioning from real to synthetic data: Quantifying the bias in model. ArXiv preprint, abs/2105.04144, 2021. URL https://arxiv.org/abs/2105.04144.

- Language models can teach themselves to program better. ArXiv preprint, abs/2207.14502, 2022. URL https://arxiv.org/abs/2207.14502.

- Gans trained by a two time-scale update rule converge to a local nash equilibrium. In I. Guyon, U. von Luxburg, S. Bengio, H. M. Wallach, R. Fergus, S. V. N. Vishwanathan, and R. Garnett, editors, Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, December 4-9, 2017, Long Beach, CA, USA, pages 6626–6637, 2017. URL https://proceedings.neurips.cc/paper/2017/hash/8a1d694707eb0fefe65871369074926d-Abstract.html.

- Denoising diffusion probabilistic models. In H. Larochelle, M. Ranzato, R. Hadsell, M. Balcan, and H. Lin, editors, Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6-12, 2020, virtual, 2020. URL https://proceedings.neurips.cc/paper/2020/hash/4c5bcfec8584af0d967f1ab10179ca4b-Abstract.html.

- An empirical analysis of compute-optimal large language model training. Advances in Neural Information Processing Systems, 35:30016–30030, 2022.

- q2superscript𝑞2q^{2}italic_q start_POSTSUPERSCRIPT 2 end_POSTSUPERSCRIPT: Evaluating factual consistency in knowledge-grounded dialogues via question generation and question answering. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pages 7856–7870, Online and Punta Cana, Dominican Republic, 2021. Association for Computational Linguistics. 10.18653/v1/2021.emnlp-main.619. URL https://aclanthology.org/2021.emnlp-main.619.

- Synthetic data for social good. ArXiv preprint, abs/1710.08874, 2017. URL https://arxiv.org/abs/1710.08874.

- Is chatgpt better than human annotators? potential and limitations of chatgpt in explaining implicit hate speech. ArXiv preprint, abs/2302.07736, 2023a. URL https://arxiv.org/abs/2302.07736.

- Large language models can self-improve. In H. Bouamor, J. Pino, and K. Bali, editors, Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, pages 1051–1068, Singapore, Dec. 2023b. Association for Computational Linguistics. 10.18653/v1/2023.emnlp-main.67. URL https://aclanthology.org/2023.emnlp-main.67.

- Inner monologue: Embodied reasoning through planning with language models. ArXiv preprint, abs/2207.05608, 2022. URL https://arxiv.org/abs/2207.05608.

- Sleeper agents: Training deceptive llms that persist through safety training. ArXiv preprint, abs/2401.05566, 2024. URL https://arxiv.org/abs/2401.05566.

- Survey of hallucination in natural language generation. ACM Computing Surveys (CSUR), 55(12):1–38, 2023.

- Scaling up visual and vision-language representation learning with noisy text supervision. In M. Meila and T. Zhang, editors, Proceedings of the 38th International Conference on Machine Learning, ICML 2021, 18-24 July 2021, Virtual Event, volume 139 of Proceedings of Machine Learning Research, pages 4904–4916. PMLR, 2021. URL http://proceedings.mlr.press/v139/jia21b.html.

- Mistral 7b. ArXiv preprint, abs/2310.06825, 2023. URL https://arxiv.org/abs/2310.06825.

- Vima: General robot manipulation with multimodal prompts. In NeurIPS 2022 Foundation Models for Decision Making Workshop, 2022.

- Teaching language models to hallucinate less with synthetic tasks, 2023. URL https://arxiv.org/abs/2310.06827.

- Llms can’t plan, but can help planning in llm-modulo frameworks. arXiv preprint arXiv:2402.01817, 2024.

- Cross-lingual training for automatic question generation. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pages 4863–4872, Florence, Italy, 2019. Association for Computational Linguistics. 10.18653/v1/P19-1481. URL https://aclanthology.org/P19-1481.

- Learning skillful medium-range global weather forecasting. Science, 382(6677):1416–1421, 2023.

- Auditing the ai auditors: A framework for evaluating fairness and bias in high stakes ai predictive models. American Psychologist, 78(1):36, 2023.

- Unlocking the conversion of web screenshots into html code with the websight dataset, 2024. URL https://arxiv.org/abs/2403.09029.

- Coderl: Mastering code generation through pretrained models and deep reinforcement learning. Advances in Neural Information Processing Systems, 35:21314–21328, 2022.

- Y. LeCun. A path towards autonomous machine intelligence version 0.9. 2, 2022-06-27. Open Review, 62, 2022.

- Pix2struct: Screenshot parsing as pretraining for visual language understanding. In International Conference on Machine Learning, pages 18893–18912. PMLR, 2023.

- Scalable agent alignment via reward modeling: a research direction. ArXiv preprint, abs/1811.07871, 2018. URL https://arxiv.org/abs/1811.07871.

- Retrieval-augmented generation for knowledge-intensive NLP tasks. In H. Larochelle, M. Ranzato, R. Hadsell, M. Balcan, and H. Lin, editors, Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6-12, 2020, virtual, 2020. URL https://proceedings.neurips.cc/paper/2020/hash/6b493230205f780e1bc26945df7481e5-Abstract.html.

- Solving quantitative reasoning problems with language models, 2022. URL https://arxiv.org/abs/2206.14858.

- B. Li and C. Callison-Burch. Paxqa: Generating cross-lingual question answering examples at training scale. ArXiv preprint, abs/2304.12206, 2023. URL https://arxiv.org/abs/2304.12206.

- Common 7b language models already possess strong math capabilities. ArXiv preprint, abs/2403.04706, 2024. URL https://arxiv.org/abs/2403.04706.

- Seeds: Emulation of weather forecast ensembles with diffusion models. ArXiv preprint, abs/2306.14066, 2023a. URL https://arxiv.org/abs/2306.14066.

- Alpacaeval: An automatic evaluator of instruction-following models. https://github.com/tatsu-lab/alpaca_eval, 2023b.

- Code as policies: Language model programs for embodied control. ArXiv preprint, abs/2209.07753, 2022. URL https://arxiv.org/abs/2209.07753.

- Back-translation for large-scale multilingual machine translation. In Proceedings of the Sixth Conference on Machine Translation, pages 418–424, Online, 2021. Association for Computational Linguistics. URL https://aclanthology.org/2021.wmt-1.50.

- Let’s verify step by step. ArXiv preprint, abs/2305.20050, 2023. URL https://arxiv.org/abs/2305.20050.

- TruthfulQA: Measuring how models mimic human falsehoods. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 3214–3252, Dublin, Ireland, 2022. Association for Computational Linguistics. 10.18653/v1/2022.acl-long.229. URL https://aclanthology.org/2022.acl-long.229.

- Codemind: A framework to challenge large language models for code reasoning. ArXiv preprint, abs/2402.09664, 2024a. URL https://arxiv.org/abs/2402.09664.

- Deplot: One-shot visual language reasoning by plot-to-table translation. In Findings of the Association for Computational Linguistics: ACL 2023, pages 10381–10399, 2023a.

- Matcha: Enhancing visual language pretraining with math reasoning and chart derendering. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 12756–12770, 2023b.

- H. Liu and A. C.-C. Yao. Augmenting math word problems via iterative question composing. ArXiv preprint, abs/2401.09003, 2024. URL https://arxiv.org/abs/2401.09003.

- Visual instruction tuning. Advances in neural information processing systems, 36, 2024b.

- Mitigating political bias in language models through reinforced calibration. In Thirty-Fifth AAAI Conference on Artificial Intelligence, AAAI 2021, Thirty-Third Conference on Innovative Applications of Artificial Intelligence, IAAI 2021, The Eleventh Symposium on Educational Advances in Artificial Intelligence, EAAI 2021, Virtual Event, February 2-9, 2021, pages 14857–14866. AAAI Press, 2021. URL https://ojs.aaai.org/index.php/AAAI/article/view/17744.

- Mind’s eye: Grounded language model reasoning through simulation. ArXiv preprint, abs/2210.05359, 2022. URL https://arxiv.org/abs/2210.05359.

- Training socially aligned language models in simulated human society. ArXiv preprint, abs/2305.16960, 2023c. URL https://arxiv.org/abs/2305.16960.

- What makes good data for alignment? a comprehensive study of automatic data selection in instruction tuning. ArXiv preprint, abs/2312.15685, 2023d. URL https://arxiv.org/abs/2312.15685.

- Machine learning for synthetic data generation: a review. ArXiv preprint, abs/2302.04062, 2023. URL https://arxiv.org/abs/2302.04062.

- F. Lucini. The real deal about synthetic data. MIT Sloan Management Review, 63(1):1–4, 2021.

- Wizardmath: Empowering mathematical reasoning for large language models via reinforced evol-instruct. ArXiv preprint, abs/2308.09583, 2023a. URL https://arxiv.org/abs/2308.09583.

- Wizardcoder: Empowering code large language models with evol-instruct. ArXiv preprint, abs/2306.08568, 2023b. URL https://arxiv.org/abs/2306.08568.

- Tagged back-translation revisited: Why does it really work? In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pages 5990–5997, Online, 2020. Association for Computational Linguistics. 10.18653/v1/2020.acl-main.532. URL https://aclanthology.org/2020.acl-main.532.

- UniChart: A universal vision-language pretrained model for chart comprehension and reasoning. In H. Bouamor, J. Pino, and K. Bali, editors, Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, pages 14662–14684, Singapore, 2023. Association for Computational Linguistics. 10.18653/v1/2023.emnlp-main.906. URL https://aclanthology.org/2023.emnlp-main.906.

- Membership inference attacks against language models via neighbourhood comparison. ArXiv preprint, abs/2305.18462, 2023. URL https://arxiv.org/abs/2305.18462.

- Generating training data with language models: Towards zero-shot language understanding. Advances in Neural Information Processing Systems, 35:462–477, 2022.

- Meta. Meta and microsoft introduce the next generation of llama. https://ai.meta.com/blog/llama-2, 2023.

- Factscore: Fine-grained atomic evaluation of factual precision in long form text generation. arXiv preprint arXiv:2305.14251, 2023.

- Scaling data-constrained language models. Advances in Neural Information Processing Systems, 36, 2024.

- Orca: Progressive learning from complex explanation traces of gpt-4. ArXiv preprint, abs/2306.02707, 2023. URL https://arxiv.org/abs/2306.02707.

- S. I. Nikolenko. Synthetic data for deep learning, volume 174. Springer, 2021.

- Bias in data-driven artificial intelligence systems—an introductory survey. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, 10(3):e1356, 2020.

- OpenAI. Gpt-4 technical report, 2023.

- Proving test set contamination in black box language models. ArXiv preprint, abs/2310.17623, 2023. URL https://arxiv.org/abs/2310.17623.

- Training language models to follow instructions with human feedback. ArXiv preprint, abs/2203.02155, 2022. URL https://arxiv.org/abs/2203.02155.

- The effects of reward misspecification: Mapping and mitigating misaligned models. In The Tenth International Conference on Learning Representations, ICLR 2022, Virtual Event, April 25-29, 2022. OpenReview.net, 2022. URL https://openreview.net/forum?id=JYtwGwIL7ye.

- Generative agents: Interactive simulacra of human behavior. In Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology, pages 1–22, 2023.

- Openwebmath: An open dataset of high-quality mathematical web text. ArXiv preprint, abs/2310.06786, 2023. URL https://arxiv.org/abs/2310.06786.

- R. Patel and E. Pavlick. Mapping language models to grounded conceptual spaces. In The Tenth International Conference on Learning Representations, ICLR 2022, Virtual Event, April 25-29, 2022. OpenReview.net, 2022. URL https://openreview.net/forum?id=gJcEM8sxHK.

- Red teaming language models with language models. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, pages 3419–3448, Abu Dhabi, United Arab Emirates, 2022. Association for Computational Linguistics. URL https://aclanthology.org/2022.emnlp-main.225.

- Discovering language model behaviors with model-written evaluations. In Findings of the Association for Computational Linguistics: ACL 2023, Toronto, Canada, July 9-14, 2023, pages 13387–13434. Association for Computational Linguistics, 2023.

- Meta back-translation. In 9th International Conference on Learning Representations, ICLR 2021, Virtual Event, Austria, May 3-7, 2021. OpenReview.net, 2021. URL https://openreview.net/forum?id=3jjmdp7Hha.

- M. Przystupa and M. Abdul-Mageed. Neural machine translation of low-resource and similar languages with backtranslation. In Proceedings of the Fourth Conference on Machine Translation (Volume 3: Shared Task Papers, Day 2), pages 224–235, Florence, Italy, 2019. Association for Computational Linguistics. 10.18653/v1/W19-5431. URL https://aclanthology.org/W19-5431.

- Learning transferable visual models from natural language supervision. In M. Meila and T. Zhang, editors, Proceedings of the 38th International Conference on Machine Learning, ICML 2021, 18-24 July 2021, Virtual Event, volume 139 of Proceedings of Machine Learning Research, pages 8748–8763. PMLR, 2021. URL http://proceedings.mlr.press/v139/radford21a.html.

- Scaling language models: Methods, analysis & insights from training gopher, 2021. URL https://arxiv.org/abs/2112.11446.

- Direct preference optimization: Your language model is secretly a reward model. In NeurIPS, 2023. URL https://api.semanticscholar.org/CorpusID:258959321.

- Hierarchical text-conditional image generation with clip latents. ArXiv preprint, abs/2204.06125, 2022. URL https://arxiv.org/abs/2204.06125.

- Synthetic data augmentation for zero-shot cross-lingual question answering. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pages 7016–7030, Online and Punta Cana, Dominican Republic, 2021. Association for Computational Linguistics. 10.18653/v1/2021.emnlp-main.562. URL https://aclanthology.org/2021.emnlp-main.562.

- T. Rid. Active measures: The secret history of disinformation and political warfare. Farrar, Straus and Giroux, 2020.

- Photorealistic text-to-image diffusion models with deep language understanding. In S. Koyejo, S. Mohamed, A. Agarwal, D. Belgrave, K. Cho, and A. Oh, editors, Advances in Neural Information Processing Systems, volume 35, pages 36479–36494. Curran Associates, Inc., 2022a. URL https://proceedings.neurips.cc/paper_files/paper/2022/file/ec795aeadae0b7d230fa35cbaf04c041-Paper-Conference.pdf.

- Photorealistic text-to-image diffusion models with deep language understanding. Advances in neural information processing systems, 35:36479–36494, 2022b.

- Analysing mathematical reasoning abilities of neural models. In 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, May 6-9, 2019. OpenReview.net, 2019. URL https://openreview.net/forum?id=H1gR5iR5FX.

- Toolformer: Language models can teach themselves to use tools. Advances in Neural Information Processing Systems, 36, 2024.

- Improving neural machine translation models with monolingual data. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 86–96, Berlin, Germany, 2016. Association for Computational Linguistics. 10.18653/v1/P16-1009. URL https://aclanthology.org/P16-1009.

- Towards zero-shot multilingual synthetic question and answer generation for cross-lingual reading comprehension. In Proceedings of the 14th International Conference on Natural Language Generation, pages 35–45, Aberdeen, Scotland, UK, 2021. Association for Computational Linguistics. URL https://aclanthology.org/2021.inlg-1.4.

- Deepseekmath: Pushing the limits of mathematical reasoning in open language models, 2024.

- Towards understanding sycophancy in language models. In The Twelfth International Conference on Learning Representations, 2024.

- Detecting pretraining data from large language models, 2023.

- Reflexion: Language agents with verbal reinforcement learning. Advances in Neural Information Processing Systems, 36, 2024.

- Learning performance-improving code edits. ArXiv preprint, abs/2302.07867, 2023. URL https://arxiv.org/abs/2302.07867.

- Design2code: How far are we from automating front-end engineering?, 2024. URL https://arxiv.org/abs/2403.03163.

- Large language models encode clinical knowledge. ArXiv preprint, abs/2212.13138, 2022. URL https://arxiv.org/abs/2212.13138.

- J. Steinhardt. Ml systems will have weird failure modes. https://bounded-regret.ghost.io/ml-systems-will-have-weird-failure-modes-2/, 2022.

- Aligning large multimodal models with factually augmented rlhf. ArXiv preprint, abs/2309.14525, 2023. URL https://arxiv.org/abs/2309.14525.

- Toolalpaca: Generalized tool learning for language models with 3000 simulated cases. ArXiv preprint, abs/2306.05301, 2023. URL https://arxiv.org/abs/2306.05301.

- Stanford alpaca: An instruction-following llama model. https://github.com/tatsu-lab/stanford_alpaca, 2023.

- Galactica: A large language model for science. ArXiv preprint, abs/2211.09085, 2022. URL https://arxiv.org/abs/2211.09085.

- Lamda: Language models for dialog applications. ArXiv preprint, abs/2201.08239, 2022. URL https://arxiv.org/abs/2201.08239.

- Fine-tuning language models for factuality. In ICLR, 2023. URL https://api.semanticscholar.org/CorpusID:265158181.

- Mujoco: A physics engine for model-based control. In 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, pages 5026–5033. IEEE, 2012. 10.1109/IROS.2012.6386109.

- Llama 2: Open foundation and fine-tuned chat models. ArXiv preprint, abs/2307.09288, 2023. URL https://arxiv.org/abs/2307.09288.

- Solving olympiad geometry without human demonstrations. Nature, 625(7995):476–482, 2024.

- Synthetic data, real errors: how (not) to publish and use synthetic data. In International Conference on Machine Learning, pages 34793–34808. PMLR, 2023.

- Generative agent-based modeling with actions grounded in physical, social, or digital space using concordia. ArXiv preprint, abs/2312.03664, 2023. URL https://arxiv.org/abs/2312.03664.

- Will we run out of data? an analysis of the limits of scaling datasets in machine learning. ArXiv preprint, abs/2211.04325, 2022. URL https://arxiv.org/abs/2211.04325.

- Voyager: An open-ended embodied agent with large language models. ArXiv preprint, abs/2305.16291, 2023. URL https://arxiv.org/abs/2305.16291.

- Self-consistency improves chain of thought reasoning in language models. 2022a. URL https://arxiv.org/abs/2203.11171.

- Self-instruct: Aligning language models with self-generated instructions. volume abs/2212.10560, 2022b. URL https://arxiv.org/abs/2212.10560.

- Towards faithful neural table-to-text generation with content-matching constraints. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pages 1072–1086, Online, 2020. Association for Computational Linguistics. 10.18653/v1/2020.acl-main.101. URL https://aclanthology.org/2020.acl-main.101.

- Generative image translation for data augmentation in colorectal histopathology images. In Advances in Neural Information Processing Systems, 2019.

- Finetuned language models are zero-shot learners. In The Tenth International Conference on Learning Representations, ICLR 2022, Virtual Event, April 25-29, 2022. OpenReview.net, 2022. URL https://openreview.net/forum?id=gEZrGCozdqR.

- Symbol tuning improves in-context learning in language models. volume abs/2305.08298, 2023a. URL https://arxiv.org/abs/2305.08298.

- Simple synthetic data reduces sycophancy in large language models, 2023b. URL https://arxiv.org/abs/2308.03958.

- Long-form factuality in large language models. 2024. URL https://api.semanticscholar.org/CorpusID:268724304.

- Magicoder: Source code is all you need. ArXiv preprint, abs/2312.02120, 2023c. URL https://arxiv.org/abs/2312.02120.

- Ethical and social risks of harm from language models. ArXiv preprint, abs/2112.04359, 2021. URL https://arxiv.org/abs/2112.04359.

- Fake it till you make it: face analysis in the wild using synthetic data alone. In 2021 IEEE/CVF International Conference on Computer Vision, ICCV 2021, Montreal, QC, Canada, October 10-17, 2021, pages 3661–3671. IEEE, 2021. 10.1109/ICCV48922.2021.00366. URL https://doi.org/10.1109/ICCV48922.2021.00366.

- Wizardlm: Empowering large language models to follow complex instructions. ArXiv preprint, abs/2304.12244, 2023. URL https://arxiv.org/abs/2304.12244.

- On synthetic data for back translation. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 419–430, Seattle, United States, 2022. Association for Computational Linguistics. 10.18653/v1/2022.naacl-main.32. URL https://aclanthology.org/2022.naacl-main.32.

- mt5: A massively multilingual pre-trained text-to-text transformer. arXiv preprint arXiv:2010.11934, 2020.

- Intercode: Standardizing and benchmarking interactive coding with execution feedback. Advances in Neural Information Processing Systems, 36, 2024.

- Toolsword: Unveiling safety issues of large language models in tool learning across three stages. ArXiv preprint, abs/2402.10753, 2024. URL https://arxiv.org/abs/2402.10753.

- Metamath: Bootstrap your own mathematical questions for large language models. ArXiv preprint, abs/2309.12284, 2023. URL https://arxiv.org/abs/2309.12284.

- Large language model as attributed training data generator: A tale of diversity and bias. Advances in Neural Information Processing Systems, 36, 2024.

- Self-rewarding language models. ArXiv preprint, abs/2401.10020, 2024. URL https://arxiv.org/abs/2401.10020.

- Scaling relationship on learning mathematical reasoning with large language models. ArXiv preprint, abs/2308.01825, 2023. URL https://arxiv.org/abs/2308.01825.

- Star: Bootstrapping reasoning with reasoning. In NeurIPS, 2022. URL https://api.semanticscholar.org/CorpusID:247762790.

- Exploring collaboration mechanisms for llm agents: A social psychology view. ArXiv preprint, abs/2310.02124, 2023a. URL https://arxiv.org/abs/2310.02124.

- Instruction tuning for large language models: A survey, 2023b. URL https://arxiv.org/abs/2308.10792.

- Siren’s song in the ai ocean: A survey on hallucination in large language models. ArXiv preprint, abs/2309.01219, 2023c. URL https://arxiv.org/abs/2309.01219.

- Llavar: Enhanced visual instruction tuning for text-rich image understanding. ArXiv preprint, abs/2306.17107, 2023d. URL https://arxiv.org/abs/2306.17107.

- Svit: Scaling up visual instruction tuning. ArXiv preprint, abs/2307.04087, 2023. URL https://arxiv.org/abs/2307.04087.

- Gender bias in coreference resolution: Evaluation and debiasing methods. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 2 (Short Papers), pages 15–20, New Orleans, Louisiana, 2018. Association for Computational Linguistics. 10.18653/v1/N18-2003. URL https://aclanthology.org/N18-2003.

- Judging llm-as-a-judge with mt-bench and chatbot arena, 2023.

- The ai economist: Taxation policy design via two-level deep multiagent reinforcement learning. Science advances, 8(18):eabk2607, 2022.

- Mirror-generative neural machine translation. In 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, April 26-30, 2020. OpenReview.net, 2020. URL https://openreview.net/forum?id=HkxQRTNYPH.

- Is this the real life? is this just fantasy? the misleading success of simulating social interactions with llms. ArXiv preprint, abs/2403.05020, 2024. URL https://arxiv.org/abs/2403.05020.

- Normbank: A knowledge bank of situational social norms. ArXiv preprint, abs/2305.17008, 2023. URL https://arxiv.org/abs/2305.17008.

- Universal and transferable adversarial attacks on aligned language models. ArXiv preprint, abs/2307.15043, 2023. URL https://arxiv.org/abs/2307.15043.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What this paper is about

This paper is about “synthetic data,” which means data made by computers rather than collected from real life. Think of it like practice worksheets a teacher creates for you: they aren’t from a real test, but they’re designed to look and feel like the real thing so you can learn. The authors explain how synthetic data is used to train and test LLMs (like chatbots), what works well, what can go wrong, and how to use it responsibly.

What questions the paper tries to answer

The paper looks at simple but important questions:

- When and how does synthetic data help LLMs learn better (for math, coding, using tools, planning, images + text, many languages, and following instructions)?

- How can synthetic data be used to evaluate models (check facts, test safety, and help humans judge quality)?

- What are the risks (like spreading mistakes or bias), and how can we avoid them?

- What should researchers do next to make synthetic data safer, higher-quality, and more useful?

How the researchers approached it

Instead of running one big new experiment, the authors reviewed and compared lots of existing studies and real examples. They gathered lessons from many projects that already use synthetic data and organized what worked and what didn’t.

To make the ideas clearer:

- Synthetic data can be made by:

- Generative models (an AI “writer” or “artist” making new text or images),

- Translating and re-translating text (called “back-translation,” like translating English to French and then back to English to create training pairs),

- Simulations (virtual worlds or engines that create realistic tasks and outcomes),

- Templates and rules (structured ways to produce consistent examples).

- They highlight techniques that check quality, like verifying math steps, running code to see if it works, or fact-checking answers with search or databases.

What they found and why it matters

Here are the main takeaways, kept brief and clear:

- Synthetic data helps models learn many skills:

- Math and reasoning: Models improve by practicing on computer-made questions and step-by-step solutions, especially when answers are checked for correctness.

- Coding: Because code can be executed, models can learn from “try-run-fix” loops. Synthetic datasets plus automatic checks help them write better, working programs.

- Tools and planning: Simulated tasks teach models when to use calculators, search tools, or APIs, and how to break big jobs into smaller steps (planning).

- Multimodal (images + text): Synthetic image–text pairs (like rendering charts or web pages and asking the model to describe or recreate them) improve how models understand visuals.

- Multilingual: Back-translation creates training examples for low-resource languages and improves translation and question-answering across languages.

- Alignment (following instructions and values): Synthetic conversations and feedback can train models to be more helpful and safer—if the synthetic feedback is done carefully.

- Synthetic data is powerful for evaluation too:

- Factuality: Automatically built tests can check whether a model sticks to facts and avoids “hallucinations” (confident but wrong answers).

- Safety: “Red teaming” with synthetic prompts helps find unsafe behaviors at scale.

- Assisting human evaluation: AI “judges” and code-running environments can speed up and lower the cost of scoring models, often matching human judgments.

- Clear benefits:

- Scale: You can make lots of data quickly and cheaply.

- Coverage: You can create rare or tricky cases humans don’t have much data for.

- Privacy: You can train models without exposing real people’s private info.

- Balance: You can design data to include underrepresented groups or languages.

- Real risks:

- Quality and bias: If synthetic data is inaccurate or biased, the model will learn those mistakes.

- Hallucination: Poorly made synthetic answers can teach models to sound confident even when they’re wrong.

- Misinformation/deepfakes: Powerful generators can create convincing but false content.

- Alignment ambiguity: Computer-made “human feedback” may miss human nuances.

- Test contamination: If training synthetic data accidentally mirrors test questions, evaluations become less trustworthy.

- Best practices the paper emphasizes:

- Verify and filter synthetic data (e.g., check math, run code, fact-check).

- Keep it diverse and balanced, not just large.

- Combine synthetic data with real data when possible.

- Build better tools to detect test contamination and measure fairness.

Why this work matters for the future

The authors suggest four promising directions:

- Understand “scaling laws” for synthetic data: How much synthetic data is enough, and how does quality vs. quantity affect learning?

- Make higher-quality, more controllable data: Use better generators and retrieval tools so synthetic examples are accurate, varied, and grounded in facts.

- Scalable oversight: Use synthetic scenarios to monitor advanced models more efficiently and reliably across many situations.

- Self-improvement: Explore whether models can generate better practice data for themselves over time—improving without constant human help—while keeping guardrails in place.

Simple bottom line

Synthetic data is like a smart practice gym for AI. It can make models better, faster, and safer—but only if the “practice drills” are well-designed, checked, and fair. Used responsibly, synthetic data can help build more capable, inclusive, and trustworthy AI systems. Used carelessly, it can spread errors and biases. The paper maps out how to get the benefits while avoiding the pitfalls.

Collections

Sign up for free to add this paper to one or more collections.