Self-Retrieval: An End-to-End LLM-driven Information Retrieval Architecture

Introduction

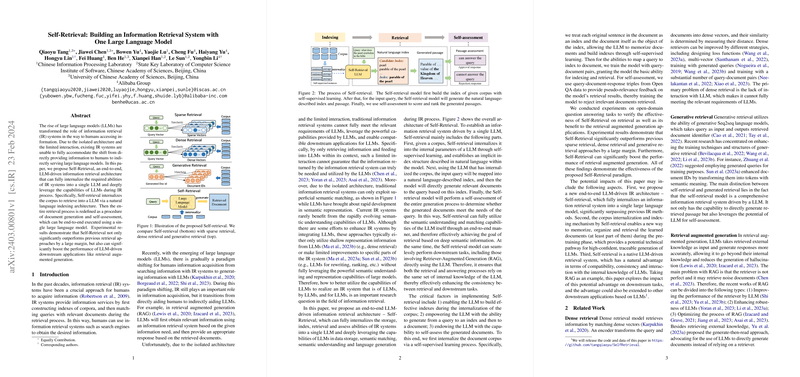

The integration and interaction of LLMs with Information Retrieval (IR) systems have been progressing, yet traditional IR systems lag in meeting the modern requirements posed by LLMs. This paper introduces Self-Retrieval, a novel architecture that internalizes the functionalities of an IR system entirely within a LLM. This model not only transforms how documents are indexed, retrieved, and assessed but also harnesses the innate abilities of LLMs for a more integrated retrieval process. The architecture outpaces conventional retrieval methods and demonstrates potential enhancements for downstream applications like retrieval-augmented generation.

Self-Retrieval Architecture

Self-Retrieval is structured around three core processes: indexing, retrieval through natural language indexing, and self-assessment. This approach integrates the document corpus into the LLM via self-supervised learning to create a natural language-described index within the model. Upon receiving a query, the model generates relevant documents through a two-step generative process, eventually assessing the quality of these generated documents vis-à-vis the query. This method leverages the LLM's comprehensive capabilities, including semantic understanding and generation, thereby redefining retrieval through a lens of deep semantic understanding and end-to-end processing.

Internalization and Indexing

The initial step involves internalizing the document corpus into the LLM, enabling it to build implicit index structures described in natural language. This process uses self-supervised learning to memorize documents as indexes and the broader corpus, establishing a basis for retrieval that leverages the LLM's deep understanding capabilities.

Natural Language Index-driven Retrieval

This procedure involves first generating a natural language index from the input query and then generating the relevant document based on this index. To ensure the generated content aligns closely with the corpus, the model employs constrained decoding algorithms, emphasizing the alignment with encoded documents and further enhancing retrieval accuracy.

Self-Assessment

Self-Retrieval uniquely incorporates a self-assessment phase where the model evaluates the relevance of the retrieved document to the input query. This phase utilizes pseudo-relevance feedback, allowing the LLM to assign scores to documents based on their generated relevance, ensuring a more targeted and meaningful retrieval output.

Experimental Insights

Experiments conducted on open-domain question answering tasks illustrate that Self-Retrieval significantly outperforms existing sparse, dense, and generative retrieval methods. This model not only demonstrates superior retrieval capabilities but also significantly enhances the performance of downstream tasks like retrieval augmented generation (RAG), suggesting a powerful synergy between retrieval mechanisms and generative model capabilities.

Theoretical and Practical Implications

Self-Retrieval presents a groundbreaking shift in the interaction between IR systems and LLMs, proposing a unified model that internalizes the retrieval process. Theoretically, it advances our understanding of how LLMs can be leveraged for complex retrieval tasks, integrating storage, semantic understanding, and document generation within a single model. Practically, it sets the groundwork for more sophisticated IR systems that can seamlessly integrate with LLM-driven applications, enhancing both retrieval accuracy and the efficiency of downstream processes.

Future Directions

While Self-Retrieval has demonstrated significant advancements, it also opens new avenues for research, particularly in understanding the scaling laws between document corpus sizes and model parameters, and expanding the model's application across various domains and tasks. Probing further into these areas could elucidate the full potential of LLM-driven IR systems and their impact on information access and knowledge discovery.

Conclusion

The Self-Retrieval architecture heralds a new era in information retrieval, wherein the capacities of LLMs are fully harnessed to perform end-to-end retrieval tasks. By internalizing the entire retrieval process within an LLM, it bridges the gap between traditional IR systems and the adaptive, semantic-rich capabilities of modern LLMs, offering a promising direction for future IR system development.

Limitations and Considerations

The paper also acknowledges the limitations of the current implementation of Self-Retrieval, specifically the need to explore the optimal scaling between the size of the document corpus and the parameters of the model. Moreover, the exploration of the model's effectiveness across various downstream tasks beyond retrieval augmented generation remains a fruitful area for future research.