RetroLLM: An Integration of Retrieval and Generation in LLMs

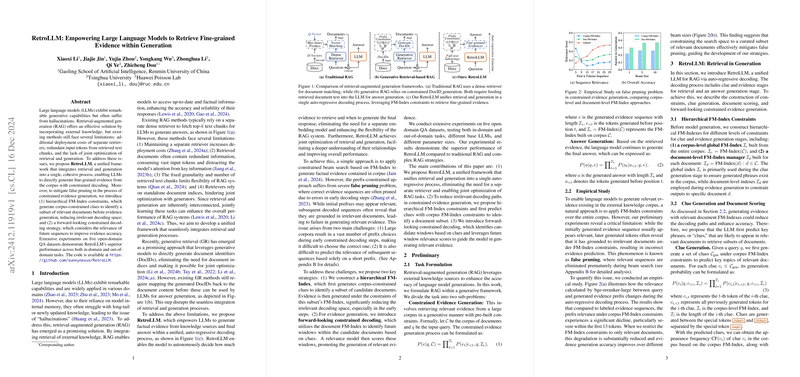

The paper "RetroLLM: Empowering LLMs to Retrieve Fine-grained Evidence within Generation" presents a novel framework aimed at addressing the challenges faced by LLMs in generating fact-based responses. These models, while powerful, often suffer from hallucinations due to their dependence on internal model memory, and thereby benefit from retrieval-augmented generation (RAG) methods that incorporate external information. However, most existing RAG methods struggle with high deployment costs due to separate retrievers, input token redundancy, and joint optimization issues. RetroLLM proposes a unified framework to seamlessly integrate retrieval and generation processes within a single decoder, thus overcoming these challenges.

RetroLLM achieves this integration by incorporating a corpus-constrained decoding mechanism. It employs an FM-Index structure to constrain generation within evidence directly retrieved from the corpus. This innovative approach effectively reduces irrelevant decoding paths, lowering computational cost and improving the efficiency of retrieval. The paper introduces a hierarchical FM-Index and a novel forward-looking constrained decoding strategy to mitigate false pruning—a critical issue where potentially correct evidence sequences are erroneously pruned during the early stages of decoding.

The hierarchical FM-Index allows RetroLLM to first predict clues from the corpus which guide the model to identify a relevant subset of documents. From this subset, the model generates evidence, constrained by a forward-looking strategy that evaluates the relevance of future sequences during generation. This ensures a more robust generation of factual evidence by considering not only the immediate but also subsequent sequence relevance.

Significant empirical validation is provided across various open-domain question-answering (QA) datasets, where RetroLLM demonstrates superior performance relative to both direct and retrieval-augmented generation baselines. RetroLLM's impact is underscored by its ability to use significantly fewer tokens while achieving higher accuracy and F1 scores in the QA tasks. Specifically, it achieves approximately 2.1x token efficiency compared to traditional RAG methods like Iter-RetGen, and performs better in both precision and recall metrics.

The framework's implications are notable for both applied and theoretical aspects of AI. Practically, RetroLLM reduces infrastructural complexity and enhances efficiency in environments requiring factual generation capabilities. Theoretically, it challenges the traditional separation of retrieval and generation processes, suggesting potential for deeper integration in LLM architectures.

Future developments hinted by this work include the potential further integration of reasoning processes within the generation sequence, raising the prospect of truly end-to-end generative-retrieval systems. Additionally, enhancing the scalability of RetroLLM through techniques like speculative decoding may offer broader applicability and efficiency gains.

In conclusion, RetroLLM represents a significant stride in optimizing knowledge retrieval and utilization in LLMs, with promising avenues for advancing both the efficacy and efficiency of AI systems dealing with dynamic and knowledge-intensive tasks.