Evaluating LLMs: Introducing the \texttt{FAC$^2$E} Framework

The Concept Behind \texttt{FAC$^2$E}

In light of the remarkable advancements in LLMs, our ability to evaluate these models comprehensively becomes crucial. Traditional benchmarks often focus on overall task performance, which, while useful, does not fully capture the nuanced capabilities of LLMs. To address this gap, we introduce \texttt{FAC$^2$E} - a framework designed for the Fine-grained and Cognition-grounded evaluation of LLMs' Capabilities. Distinctively, \texttt{FAC$^2$E} leverages a multi-dimensional approach to differentiate between language-related and cognition-related capabilities, allowing for a more nuanced understanding of these complex models.

Capabilities and Their Axes

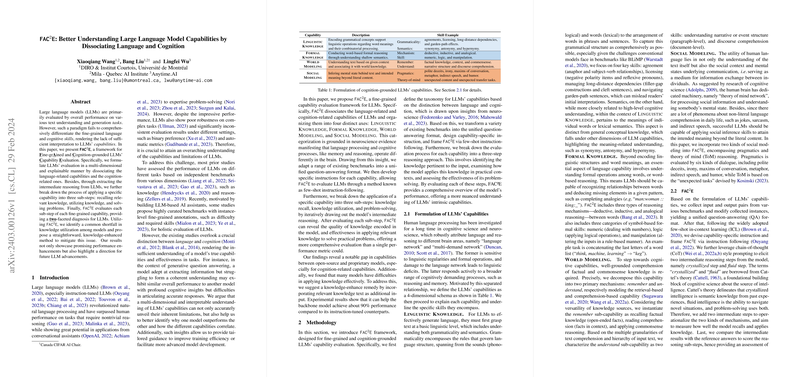

\texttt{FAC$^2$E} categorizes LLM capabilities into four axes:

- \textsc{Linguistic Knowledge} focuses on grammatical and semantic aspects.

- \textsc{Formal Knowledge} assesses models on their ability to conduct symbolic reasoning.

- \textsc{World Modeling} evaluates comprehension and application of factual and commonsense knowledge.

- \textsc{Social Modeling} looks into inferencing mental states and beyond-literal contents comprehension.

Each capability axis encompasses various specific skills crucial for understanding natural language and solving tasks that require cognition.

Framework Components and Evaluation Process

\texttt{FAC$^2$E} performs an evaluation by breaking down tasks into three steps: recalling relevant knowledge, utilizing knowledge, and problem-solving. This process enables a more granular assessment of LLMs, pinpointing their strengths and weaknesses in each step. Through this framework, \texttt{FAC$^2$E} not only assesses the effectiveness of knowledge recall but also the model's ability to apply this knowledge contextually.

Insights and Implications

Preliminary evaluations using \texttt{FAC$^2$E} reveal significant findings:

- Knowledge Utilization Gap: There's a pronounced shortfall in how models utilize knowledge, despite demonstrating strong recall abilities. This gap suggests a potential area for enhancing LLMs by focusing on improving knowledge application mechanisms within the models.

- Distinction between Language and Cognition: The framework's results underscore the importance of treating language processing and cognitive processing as distinct capabilities within LLMs. This distinction has profound implications on how we approach model training and evaluation moving forward.

- Direction for Future Development: The insights provided by \texttt{FAC$^2$E} not only highlight current limitations but also offer clear pathways for future advancements in LLM research. Specifically, a focused approach on improving knowledge utilization could drive the next wave of breakthroughs in the field.

Conclusion

In conclusion, the \texttt{FAC$^2$E} framework represents a significant step forward in our quest to better understand and evaluate LLMs. By adopting a nuanced approach that accounts for both language and cognition-related capabilities, \texttt{FAC$^2$E} offers the research community a robust tool for dissecting the complexities of modern LLMs. Through continued refinement and application of this framework, we can anticipate not only more sophisticated evaluations but also targeted improvements in LLM performance and reliability.